Merge branch 'ggml-org:master' into power-law-sampler

This commit is contained in:

commit

f4703d422c

|

|

@ -70,6 +70,7 @@ jobs:

|

|||

with:

|

||||

key: macOS-latest-cmake-arm64

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build

|

||||

id: cmake_build

|

||||

|

|

@ -106,6 +107,7 @@ jobs:

|

|||

with:

|

||||

key: macOS-latest-cmake-x64

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build

|

||||

id: cmake_build

|

||||

|

|

@ -142,6 +144,7 @@ jobs:

|

|||

with:

|

||||

key: macOS-latest-cmake-arm64-webgpu

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dawn Dependency

|

||||

id: dawn-depends

|

||||

|

|

@ -195,6 +198,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-cpu-cmake-${{ matrix.build }}

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build Dependencies

|

||||

id: build_depends

|

||||

|

|

@ -276,6 +280,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-latest-cmake-sanitizer-${{ matrix.sanitizer }}

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -396,6 +401,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-24-cmake-vulkan-deb

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -431,6 +437,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-24-cmake-vulkan

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -490,6 +497,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-24-cmake-webgpu

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -562,6 +570,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-latest-wasm-webgpu

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Install Emscripten

|

||||

run: |

|

||||

|

|

@ -609,6 +618,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-22-cmake-hip

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build with native CMake HIP support

|

||||

id: cmake_build

|

||||

|

|

@ -641,6 +651,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-22-cmake-musa

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build with native CMake MUSA support

|

||||

id: cmake_build

|

||||

|

|

@ -688,6 +699,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-22-cmake-sycl

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build

|

||||

id: cmake_build

|

||||

|

|

@ -738,6 +750,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-22-cmake-sycl-fp16

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build

|

||||

id: cmake_build

|

||||

|

|

@ -771,6 +784,7 @@ jobs:

|

|||

with:

|

||||

key: macOS-latest-cmake-ios

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build

|

||||

id: cmake_build

|

||||

|

|

@ -802,6 +816,7 @@ jobs:

|

|||

with:

|

||||

key: macOS-latest-cmake-tvos

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build

|

||||

id: cmake_build

|

||||

|

|

@ -863,6 +878,7 @@ jobs:

|

|||

with:

|

||||

key: macOS-latest-swift

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Download xcframework artifact

|

||||

uses: actions/download-artifact@v4

|

||||

|

|

@ -905,6 +921,7 @@ jobs:

|

|||

key: windows-msys2

|

||||

variant: ccache

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Setup ${{ matrix.sys }}

|

||||

uses: msys2/setup-msys2@v2

|

||||

|

|

@ -973,6 +990,7 @@ jobs:

|

|||

key: windows-latest-cmake-${{ matrix.build }}

|

||||

variant: ccache

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Download OpenBLAS

|

||||

id: get_openblas

|

||||

|

|

@ -1077,6 +1095,7 @@ jobs:

|

|||

with:

|

||||

key: ubuntu-latest-cmake-cuda

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build with CMake

|

||||

run: |

|

||||

|

|

@ -1109,6 +1128,7 @@ jobs:

|

|||

key: windows-cuda-${{ matrix.cuda }}

|

||||

variant: ccache

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Install Cuda Toolkit

|

||||

uses: ./.github/actions/windows-setup-cuda

|

||||

|

|

@ -1160,6 +1180,7 @@ jobs:

|

|||

key: windows-latest-cmake-sycl

|

||||

variant: ccache

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Install

|

||||

run: |

|

||||

|

|

@ -1221,6 +1242,7 @@ jobs:

|

|||

with:

|

||||

key: ${{ github.job }}

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Build

|

||||

id: cmake_build

|

||||

|

|

@ -1466,6 +1488,7 @@ jobs:

|

|||

with:

|

||||

key: ggml-ci-x64-cpu-low-perf

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -1491,6 +1514,7 @@ jobs:

|

|||

with:

|

||||

key: ggml-ci-arm64-cpu-low-perf

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -1516,6 +1540,7 @@ jobs:

|

|||

with:

|

||||

key: ggml-ci-x64-cpu-high-perf

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -1541,6 +1566,7 @@ jobs:

|

|||

with:

|

||||

key: ggml-ci-arm64-cpu-high-perf

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -1566,6 +1592,7 @@ jobs:

|

|||

with:

|

||||

key: ggml-ci-arm64-cpu-high-perf-sve

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -1701,6 +1728,7 @@ jobs:

|

|||

with:

|

||||

key: ggml-ci-arm64-cpu-kleidiai

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Dependencies

|

||||

id: depends

|

||||

|

|

@ -2084,6 +2112,7 @@ jobs:

|

|||

with:

|

||||

key: ggml-ci-arm64-graviton4-kleidiai

|

||||

evict-old-files: 1d

|

||||

save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

|

||||

|

||||

- name: Test

|

||||

id: ggml-ci

|

||||

|

|

|

|||

|

|

@ -66,16 +66,9 @@ jobs:

|

|||

id: pack_artifacts

|

||||

run: |

|

||||

cp LICENSE ./build/bin/

|

||||

zip -y -r llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.zip ./build/bin/*

|

||||

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.tar.gz -s ",./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

|

||||

|

||||

- name: Upload artifacts (zip)

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.zip

|

||||

name: llama-bin-macos-arm64.zip

|

||||

|

||||

- name: Upload artifacts (tar)

|

||||

- name: Upload artifacts

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.tar.gz

|

||||

|

|

@ -127,16 +120,9 @@ jobs:

|

|||

id: pack_artifacts

|

||||

run: |

|

||||

cp LICENSE ./build/bin/

|

||||

zip -y -r llama-${{ steps.tag.outputs.name }}-bin-macos-x64.zip ./build/bin/*

|

||||

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-macos-x64.tar.gz -s ",./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

|

||||

|

||||

- name: Upload artifacts (zip)

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-macos-x64.zip

|

||||

name: llama-bin-macos-x64.zip

|

||||

|

||||

- name: Upload artifacts (tar)

|

||||

- name: Upload artifacts

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-macos-x64.tar.gz

|

||||

|

|

@ -196,16 +182,9 @@ jobs:

|

|||

id: pack_artifacts

|

||||

run: |

|

||||

cp LICENSE ./build/bin/

|

||||

zip -y -r llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.zip ./build/bin/*

|

||||

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.tar.gz --transform "s,./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

|

||||

|

||||

- name: Upload artifacts (zip)

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.zip

|

||||

name: llama-bin-ubuntu-${{ matrix.build }}.zip

|

||||

|

||||

- name: Upload artifacts (tar)

|

||||

- name: Upload artifacts

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.tar.gz

|

||||

|

|

@ -256,16 +235,9 @@ jobs:

|

|||

id: pack_artifacts

|

||||

run: |

|

||||

cp LICENSE ./build/bin/

|

||||

zip -y -r llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.zip ./build/bin/*

|

||||

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.tar.gz --transform "s,./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

|

||||

|

||||

- name: Upload artifacts (zip)

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.zip

|

||||

name: llama-bin-ubuntu-vulkan-x64.zip

|

||||

|

||||

- name: Upload artifacts (tar)

|

||||

- name: Upload artifacts

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.tar.gz

|

||||

|

|

@ -716,16 +688,9 @@ jobs:

|

|||

- name: Pack artifacts

|

||||

id: pack_artifacts

|

||||

run: |

|

||||

zip -y -r llama-${{ steps.tag.outputs.name }}-xcframework.zip build-apple/llama.xcframework

|

||||

tar -czvf llama-${{ steps.tag.outputs.name }}-xcframework.tar.gz -C build-apple llama.xcframework

|

||||

|

||||

- name: Upload artifacts (zip)

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-xcframework.zip

|

||||

name: llama-${{ steps.tag.outputs.name }}-xcframework.zip

|

||||

|

||||

- name: Upload artifacts (tar)

|

||||

- name: Upload artifacts

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-xcframework.tar.gz

|

||||

|

|

@ -797,7 +762,7 @@ jobs:

|

|||

cp LICENSE ./build/bin/

|

||||

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-${{ matrix.chip_type }}-openEuler-${{ matrix.arch }}.tar.gz --transform "s,./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

|

||||

|

||||

- name: Upload artifacts (tar)

|

||||

- name: Upload artifacts

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

path: llama-${{ steps.tag.outputs.name }}-bin-${{ matrix.chip_type }}-openEuler-${{ matrix.arch }}.tar.gz

|

||||

|

|

@ -889,9 +854,6 @@ jobs:

|

|||

with:

|

||||

tag_name: ${{ steps.tag.outputs.name }}

|

||||

body: |

|

||||

> [!WARNING]

|

||||

> **Release Format Update**: Linux releases will soon use .tar.gz archives instead of .zip. Please make the necessary changes to your deployment scripts.

|

||||

|

||||

<details open>

|

||||

|

||||

${{ github.event.head_commit.message }}

|

||||

|

|

@ -911,8 +873,8 @@ jobs:

|

|||

**Windows:**

|

||||

- [Windows x64 (CPU)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cpu-x64.zip)

|

||||

- [Windows arm64 (CPU)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cpu-arm64.zip)

|

||||

- [Windows x64 (CUDA 12)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-12.4-x64.zip)

|

||||

- [Windows x64 (CUDA 13)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-13.1-x64.zip)

|

||||

- [Windows x64 (CUDA 12)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-12.4-x64.zip) - [CUDA 12.4 DLLs](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/cudart-llama-bin-win-cuda-12.4-x64.zip)

|

||||

- [Windows x64 (CUDA 13)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-13.1-x64.zip) - [CUDA 13.1 DLLs](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/cudart-llama-bin-win-cuda-13.1-x64.zip)

|

||||

- [Windows x64 (Vulkan)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-vulkan-x64.zip)

|

||||

- [Windows x64 (SYCL)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-sycl-x64.zip)

|

||||

- [Windows x64 (HIP)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-hip-radeon-x64.zip)

|

||||

|

|

|

|||

|

|

@ -772,6 +772,11 @@ bool common_params_to_map(int argc, char ** argv, llama_example ex, std::map<com

|

|||

}

|

||||

auto opt = *arg_to_options[arg];

|

||||

std::string val;

|

||||

if (opt.value_hint == nullptr && opt.value_hint_2 == nullptr) {

|

||||

// bool arg (need to reverse the meaning for negative args)

|

||||

bool is_neg = std::find(opt.args_neg.begin(), opt.args_neg.end(), arg) != opt.args_neg.end();

|

||||

val = is_neg ? "0" : "1";

|

||||

}

|

||||

if (opt.value_hint != nullptr) {

|

||||

// arg with single value

|

||||

check_arg(i);

|

||||

|

|

@ -1139,7 +1144,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

}

|

||||

).set_env("LLAMA_ARG_CTX_CHECKPOINTS").set_examples({LLAMA_EXAMPLE_SERVER, LLAMA_EXAMPLE_CLI}));

|

||||

add_opt(common_arg(

|

||||

{"--cache-ram", "-cram"}, "N",

|

||||

{"-cram", "--cache-ram"}, "N",

|

||||

string_format("set the maximum cache size in MiB (default: %d, -1 - no limit, 0 - disable)"

|

||||

"[(more info)](https://github.com/ggml-org/llama.cpp/pull/16391)", params.cache_ram_mib),

|

||||

[](common_params & params, int value) {

|

||||

|

|

@ -1147,7 +1152,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

}

|

||||

).set_env("LLAMA_ARG_CACHE_RAM").set_examples({LLAMA_EXAMPLE_SERVER, LLAMA_EXAMPLE_CLI}));

|

||||

add_opt(common_arg(

|

||||

{"--kv-unified", "-kvu"},

|

||||

{"-kvu", "--kv-unified"},

|

||||

"use single unified KV buffer shared across all sequences (default: enabled if number of slots is auto)",

|

||||

[](common_params & params) {

|

||||

params.kv_unified = true;

|

||||

|

|

@ -1196,7 +1201,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

[](common_params & params, const std::string & value) {

|

||||

params.system_prompt = value;

|

||||

}

|

||||

).set_examples({LLAMA_EXAMPLE_COMPLETION, LLAMA_EXAMPLE_CLI, LLAMA_EXAMPLE_DIFFUSION}));

|

||||

).set_examples({LLAMA_EXAMPLE_COMPLETION, LLAMA_EXAMPLE_CLI, LLAMA_EXAMPLE_DIFFUSION, LLAMA_EXAMPLE_MTMD}));

|

||||

add_opt(common_arg(

|

||||

{"--perf"},

|

||||

{"--no-perf"},

|

||||

|

|

@ -1415,7 +1420,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

}

|

||||

).set_sparam());

|

||||

add_opt(common_arg(

|

||||

{"--sampling-seq", "--sampler-seq"}, "SEQUENCE",

|

||||

{"--sampler-seq", "--sampling-seq"}, "SEQUENCE",

|

||||

string_format("simplified sequence for samplers that will be used (default: %s)", sampler_type_chars.c_str()),

|

||||

[](common_params & params, const std::string & value) {

|

||||

params.sampling.samplers = common_sampler_types_from_chars(value);

|

||||

|

|

@ -2091,26 +2096,26 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

}

|

||||

));

|

||||

add_opt(common_arg(

|

||||

{"--override-tensor", "-ot"}, "<tensor name pattern>=<buffer type>,...",

|

||||

{"-ot", "--override-tensor"}, "<tensor name pattern>=<buffer type>,...",

|

||||

"override tensor buffer type", [](common_params & params, const std::string & value) {

|

||||

parse_tensor_buffer_overrides(value, params.tensor_buft_overrides);

|

||||

}

|

||||

));

|

||||

add_opt(common_arg(

|

||||

{"--override-tensor-draft", "-otd"}, "<tensor name pattern>=<buffer type>,...",

|

||||

{"-otd", "--override-tensor-draft"}, "<tensor name pattern>=<buffer type>,...",

|

||||

"override tensor buffer type for draft model", [](common_params & params, const std::string & value) {

|

||||

parse_tensor_buffer_overrides(value, params.speculative.tensor_buft_overrides);

|

||||

}

|

||||

).set_examples({LLAMA_EXAMPLE_SPECULATIVE, LLAMA_EXAMPLE_SERVER, LLAMA_EXAMPLE_CLI}));

|

||||

add_opt(common_arg(

|

||||

{"--cpu-moe", "-cmoe"},

|

||||

{"-cmoe", "--cpu-moe"},

|

||||

"keep all Mixture of Experts (MoE) weights in the CPU",

|

||||

[](common_params & params) {

|

||||

params.tensor_buft_overrides.push_back(llm_ffn_exps_cpu_override());

|

||||

}

|

||||

).set_env("LLAMA_ARG_CPU_MOE"));

|

||||

add_opt(common_arg(

|

||||

{"--n-cpu-moe", "-ncmoe"}, "N",

|

||||

{"-ncmoe", "--n-cpu-moe"}, "N",

|

||||

"keep the Mixture of Experts (MoE) weights of the first N layers in the CPU",

|

||||

[](common_params & params, int value) {

|

||||

if (value < 0) {

|

||||

|

|

@ -2125,14 +2130,14 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

}

|

||||

).set_env("LLAMA_ARG_N_CPU_MOE"));

|

||||

add_opt(common_arg(

|

||||

{"--cpu-moe-draft", "-cmoed"},

|

||||

{"-cmoed", "--cpu-moe-draft"},

|

||||

"keep all Mixture of Experts (MoE) weights in the CPU for the draft model",

|

||||

[](common_params & params) {

|

||||

params.speculative.tensor_buft_overrides.push_back(llm_ffn_exps_cpu_override());

|

||||

}

|

||||

).set_examples({LLAMA_EXAMPLE_SPECULATIVE, LLAMA_EXAMPLE_SERVER, LLAMA_EXAMPLE_CLI}).set_env("LLAMA_ARG_CPU_MOE_DRAFT"));

|

||||

add_opt(common_arg(

|

||||

{"--n-cpu-moe-draft", "-ncmoed"}, "N",

|

||||

{"-ncmoed", "--n-cpu-moe-draft"}, "N",

|

||||

"keep the Mixture of Experts (MoE) weights of the first N layers in the CPU for the draft model",

|

||||

[](common_params & params, int value) {

|

||||

if (value < 0) {

|

||||

|

|

@ -2660,7 +2665,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

}

|

||||

).set_examples({LLAMA_EXAMPLE_SERVER}).set_env("LLAMA_ARG_EMBEDDINGS"));

|

||||

add_opt(common_arg(

|

||||

{"--reranking", "--rerank"},

|

||||

{"--rerank", "--reranking"},

|

||||

string_format("enable reranking endpoint on server (default: %s)", "disabled"),

|

||||

[](common_params & params) {

|

||||

params.embedding = true;

|

||||

|

|

@ -3131,7 +3136,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

}

|

||||

).set_examples({LLAMA_EXAMPLE_SPECULATIVE}));

|

||||

add_opt(common_arg(

|

||||

{"--draft-max", "--draft", "--draft-n"}, "N",

|

||||

{"--draft", "--draft-n", "--draft-max"}, "N",

|

||||

string_format("number of tokens to draft for speculative decoding (default: %d)", params.speculative.n_max),

|

||||

[](common_params & params, int value) {

|

||||

params.speculative.n_max = value;

|

||||

|

|

|

|||

|

|

@ -2,6 +2,7 @@

|

|||

#include "preset.h"

|

||||

#include "peg-parser.h"

|

||||

#include "log.h"

|

||||

#include "download.h"

|

||||

|

||||

#include <fstream>

|

||||

#include <sstream>

|

||||

|

|

@ -15,9 +16,13 @@ static std::string rm_leading_dashes(const std::string & str) {

|

|||

return str.substr(pos);

|

||||

}

|

||||

|

||||

std::vector<std::string> common_preset::to_args() const {

|

||||

std::vector<std::string> common_preset::to_args(const std::string & bin_path) const {

|

||||

std::vector<std::string> args;

|

||||

|

||||

if (!bin_path.empty()) {

|

||||

args.push_back(bin_path);

|

||||

}

|

||||

|

||||

for (const auto & [opt, value] : options) {

|

||||

args.push_back(opt.args.back()); // use the last arg as the main arg

|

||||

if (opt.value_hint == nullptr && opt.value_hint_2 == nullptr) {

|

||||

|

|

@ -63,6 +68,52 @@ std::string common_preset::to_ini() const {

|

|||

return ss.str();

|

||||

}

|

||||

|

||||

void common_preset::set_option(const common_preset_context & ctx, const std::string & env, const std::string & value) {

|

||||

// try if option exists, update it

|

||||

for (auto & [opt, val] : options) {

|

||||

if (opt.env && env == opt.env) {

|

||||

val = value;

|

||||

return;

|

||||

}

|

||||

}

|

||||

// if option does not exist, we need to add it

|

||||

if (ctx.key_to_opt.find(env) == ctx.key_to_opt.end()) {

|

||||

throw std::runtime_error(string_format(

|

||||

"%s: option with env '%s' not found in ctx_params",

|

||||

__func__, env.c_str()

|

||||

));

|

||||

}

|

||||

options[ctx.key_to_opt.at(env)] = value;

|

||||

}

|

||||

|

||||

void common_preset::unset_option(const std::string & env) {

|

||||

for (auto it = options.begin(); it != options.end(); ) {

|

||||

const common_arg & opt = it->first;

|

||||

if (opt.env && env == opt.env) {

|

||||

it = options.erase(it);

|

||||

return;

|

||||

} else {

|

||||

++it;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

bool common_preset::get_option(const std::string & env, std::string & value) const {

|

||||

for (const auto & [opt, val] : options) {

|

||||

if (opt.env && env == opt.env) {

|

||||

value = val;

|

||||

return true;

|

||||

}

|

||||

}

|

||||

return false;

|

||||

}

|

||||

|

||||

void common_preset::merge(const common_preset & other) {

|

||||

for (const auto & [opt, val] : other.options) {

|

||||

options[opt] = val; // overwrite existing options

|

||||

}

|

||||

}

|

||||

|

||||

static std::map<std::string, std::map<std::string, std::string>> parse_ini_from_file(const std::string & path) {

|

||||

std::map<std::string, std::map<std::string, std::string>> parsed;

|

||||

|

||||

|

|

@ -172,9 +223,12 @@ static std::string parse_bool_arg(const common_arg & arg, const std::string & ke

|

|||

return value;

|

||||

}

|

||||

|

||||

common_presets common_presets_load(const std::string & path, common_params_context & ctx_params) {

|

||||

common_preset_context::common_preset_context(llama_example ex)

|

||||

: ctx_params(common_params_parser_init(default_params, ex)),

|

||||

key_to_opt(get_map_key_opt(ctx_params)) {}

|

||||

|

||||

common_presets common_preset_context::load_from_ini(const std::string & path, common_preset & global) const {

|

||||

common_presets out;

|

||||

auto key_to_opt = get_map_key_opt(ctx_params);

|

||||

auto ini_data = parse_ini_from_file(path);

|

||||

|

||||

for (auto section : ini_data) {

|

||||

|

|

@ -188,7 +242,7 @@ common_presets common_presets_load(const std::string & path, common_params_conte

|

|||

for (const auto & [key, value] : section.second) {

|

||||

LOG_DBG("option: %s = %s\n", key.c_str(), value.c_str());

|

||||

if (key_to_opt.find(key) != key_to_opt.end()) {

|

||||

auto & opt = key_to_opt[key];

|

||||

const auto & opt = key_to_opt.at(key);

|

||||

if (is_bool_arg(opt)) {

|

||||

preset.options[opt] = parse_bool_arg(opt, key, value);

|

||||

} else {

|

||||

|

|

@ -199,8 +253,137 @@ common_presets common_presets_load(const std::string & path, common_params_conte

|

|||

// TODO: maybe warn about unknown key?

|

||||

}

|

||||

}

|

||||

|

||||

if (preset.name == "*") {

|

||||

// handle global preset

|

||||

global = preset;

|

||||

} else {

|

||||

out[preset.name] = preset;

|

||||

}

|

||||

}

|

||||

|

||||

return out;

|

||||

}

|

||||

|

||||

common_presets common_preset_context::load_from_cache() const {

|

||||

common_presets out;

|

||||

|

||||

auto cached_models = common_list_cached_models();

|

||||

for (const auto & model : cached_models) {

|

||||

common_preset preset;

|

||||

preset.name = model.to_string();

|

||||

preset.set_option(*this, "LLAMA_ARG_HF_REPO", model.to_string());

|

||||

out[preset.name] = preset;

|

||||

}

|

||||

|

||||

return out;

|

||||

}

|

||||

|

||||

struct local_model {

|

||||

std::string name;

|

||||

std::string path;

|

||||

std::string path_mmproj;

|

||||

};

|

||||

|

||||

common_presets common_preset_context::load_from_models_dir(const std::string & models_dir) const {

|

||||

if (!std::filesystem::exists(models_dir) || !std::filesystem::is_directory(models_dir)) {

|

||||

throw std::runtime_error(string_format("error: '%s' does not exist or is not a directory\n", models_dir.c_str()));

|

||||

}

|

||||

|

||||

std::vector<local_model> models;

|

||||

auto scan_subdir = [&models](const std::string & subdir_path, const std::string & name) {

|

||||

auto files = fs_list(subdir_path, false);

|

||||

common_file_info model_file;

|

||||

common_file_info first_shard_file;

|

||||

common_file_info mmproj_file;

|

||||

for (const auto & file : files) {

|

||||

if (string_ends_with(file.name, ".gguf")) {

|

||||

if (file.name.find("mmproj") != std::string::npos) {

|

||||

mmproj_file = file;

|

||||

} else if (file.name.find("-00001-of-") != std::string::npos) {

|

||||

first_shard_file = file;

|

||||

} else {

|

||||

model_file = file;

|

||||

}

|

||||

}

|

||||

}

|

||||

// single file model

|

||||

local_model model{

|

||||

/* name */ name,

|

||||

/* path */ first_shard_file.path.empty() ? model_file.path : first_shard_file.path,

|

||||

/* path_mmproj */ mmproj_file.path // can be empty

|

||||

};

|

||||

if (!model.path.empty()) {

|

||||

models.push_back(model);

|

||||

}

|

||||

};

|

||||

|

||||

auto files = fs_list(models_dir, true);

|

||||

for (const auto & file : files) {

|

||||

if (file.is_dir) {

|

||||

scan_subdir(file.path, file.name);

|

||||

} else if (string_ends_with(file.name, ".gguf")) {

|

||||

// single file model

|

||||

std::string name = file.name;

|

||||

string_replace_all(name, ".gguf", "");

|

||||

local_model model{

|

||||

/* name */ name,

|

||||

/* path */ file.path,

|

||||

/* path_mmproj */ ""

|

||||

};

|

||||

models.push_back(model);

|

||||

}

|

||||

}

|

||||

|

||||

// convert local models to presets

|

||||

common_presets out;

|

||||

for (const auto & model : models) {

|

||||

common_preset preset;

|

||||

preset.name = model.name;

|

||||

preset.set_option(*this, "LLAMA_ARG_MODEL", model.path);

|

||||

if (!model.path_mmproj.empty()) {

|

||||

preset.set_option(*this, "LLAMA_ARG_MMPROJ", model.path_mmproj);

|

||||

}

|

||||

out[preset.name] = preset;

|

||||

}

|

||||

|

||||

return out;

|

||||

}

|

||||

|

||||

common_preset common_preset_context::load_from_args(int argc, char ** argv) const {

|

||||

common_preset preset;

|

||||

preset.name = COMMON_PRESET_DEFAULT_NAME;

|

||||

|

||||

bool ok = common_params_to_map(argc, argv, ctx_params.ex, preset.options);

|

||||

if (!ok) {

|

||||

throw std::runtime_error("failed to parse CLI arguments into preset");

|

||||

}

|

||||

|

||||

return preset;

|

||||

}

|

||||

|

||||

common_presets common_preset_context::cascade(const common_presets & base, const common_presets & added) const {

|

||||

common_presets out = base; // copy

|

||||

for (const auto & [name, preset_added] : added) {

|

||||

if (out.find(name) != out.end()) {

|

||||

// if exists, merge

|

||||

common_preset & target = out[name];

|

||||

target.merge(preset_added);

|

||||

} else {

|

||||

// otherwise, add directly

|

||||

out[name] = preset_added;

|

||||

}

|

||||

}

|

||||

return out;

|

||||

}

|

||||

|

||||

common_presets common_preset_context::cascade(const common_preset & base, const common_presets & presets) const {

|

||||

common_presets out;

|

||||

for (const auto & [name, preset] : presets) {

|

||||

common_preset tmp = base; // copy

|

||||

tmp.name = name;

|

||||

tmp.merge(preset);

|

||||

out[name] = std::move(tmp);

|

||||

}

|

||||

return out;

|

||||

}

|

||||

|

|

|

|||

|

|

@ -13,20 +13,62 @@

|

|||

|

||||

constexpr const char * COMMON_PRESET_DEFAULT_NAME = "default";

|

||||

|

||||

struct common_preset_context;

|

||||

|

||||

struct common_preset {

|

||||

std::string name;

|

||||

// TODO: support repeated args in the future

|

||||

|

||||

// options are stored as common_arg to string mapping, representing CLI arg and its value

|

||||

std::map<common_arg, std::string> options;

|

||||

|

||||

// convert preset to CLI argument list

|

||||

std::vector<std::string> to_args() const;

|

||||

std::vector<std::string> to_args(const std::string & bin_path = "") const;

|

||||

|

||||

// convert preset to INI format string

|

||||

std::string to_ini() const;

|

||||

|

||||

// TODO: maybe implement to_env() if needed

|

||||

|

||||

// modify preset options where argument is identified by its env variable

|

||||

void set_option(const common_preset_context & ctx, const std::string & env, const std::string & value);

|

||||

|

||||

// unset option by its env variable

|

||||

void unset_option(const std::string & env);

|

||||

|

||||

// get option value by its env variable, return false if not found

|

||||

bool get_option(const std::string & env, std::string & value) const;

|

||||

|

||||

// merge another preset into this one, overwriting existing options

|

||||

void merge(const common_preset & other);

|

||||

};

|

||||

|

||||

// interface for multiple presets in one file

|

||||

using common_presets = std::map<std::string, common_preset>;

|

||||

common_presets common_presets_load(const std::string & path, common_params_context & ctx_params);

|

||||

|

||||

// context for loading and editing presets

|

||||

struct common_preset_context {

|

||||

common_params default_params; // unused for now

|

||||

common_params_context ctx_params;

|

||||

std::map<std::string, common_arg> key_to_opt;

|

||||

common_preset_context(llama_example ex);

|

||||

|

||||

// load presets from INI file

|

||||

common_presets load_from_ini(const std::string & path, common_preset & global) const;

|

||||

|

||||

// generate presets from cached models

|

||||

common_presets load_from_cache() const;

|

||||

|

||||

// generate presets from local models directory

|

||||

// for the directory structure, see "Using multiple models" in server/README.md

|

||||

common_presets load_from_models_dir(const std::string & models_dir) const;

|

||||

|

||||

// generate one preset from CLI arguments

|

||||

common_preset load_from_args(int argc, char ** argv) const;

|

||||

|

||||

// cascade multiple presets if exist on both: base < added

|

||||

// if preset does not exist in base, it will be added without modification

|

||||

common_presets cascade(const common_presets & base, const common_presets & added) const;

|

||||

|

||||

// apply presets over a base preset (same idea as CSS cascading)

|

||||

common_presets cascade(const common_preset & base, const common_presets & presets) const;

|

||||

};

|

||||

|

|

|

|||

|

|

@ -712,6 +712,9 @@ class ModelBase:

|

|||

if "thinker_config" in config:

|

||||

# rename for Qwen2.5-Omni

|

||||

config["text_config"] = config["thinker_config"]["text_config"]

|

||||

if "lfm" in config:

|

||||

# rename for LFM2-Audio

|

||||

config["text_config"] = config["lfm"]

|

||||

return config

|

||||

|

||||

@classmethod

|

||||

|

|

@ -9713,12 +9716,12 @@ class LFM2Model(TextModel):

|

|||

self._add_feed_forward_length()

|

||||

|

||||

def modify_tensors(self, data_torch: Tensor, name: str, bid: int | None) -> Iterable[tuple[str, Tensor]]:

|

||||

is_vision_tensor = "vision_tower" in name or "multi_modal_projector" in name

|

||||

if is_vision_tensor:

|

||||

# skip vision tensors

|

||||

if self._is_vision_tensor(name) or self._is_audio_tensor(name):

|

||||

# skip multimodal tensors

|

||||

return []

|

||||

|

||||

name = name.replace("language_model.", "")

|

||||

name = name.replace("language_model.", "") # vision

|

||||

name = name.replace("lfm.", "model.") # audio

|

||||

|

||||

# conv op requires 2d tensor

|

||||

if 'conv.conv' in name:

|

||||

|

|

@ -9726,6 +9729,12 @@ class LFM2Model(TextModel):

|

|||

|

||||

return [(self.map_tensor_name(name), data_torch)]

|

||||

|

||||

def _is_vision_tensor(self, name: str) -> bool:

|

||||

return "vision_tower" in name or "multi_modal_projector" in name

|

||||

|

||||

def _is_audio_tensor(self, name: str):

|

||||

return any(p in name for p in ["audio", "codebook", "conformer", "depth_embedding", "depthformer", "depth_linear"])

|

||||

|

||||

|

||||

@ModelBase.register("Lfm2MoeForCausalLM")

|

||||

class LFM2MoeModel(TextModel):

|

||||

|

|

@ -9831,6 +9840,81 @@ class LFM2VLModel(MmprojModel):

|

|||

return [] # skip other tensors

|

||||

|

||||

|

||||

@ModelBase.register("Lfm2AudioForConditionalGeneration")

|

||||

class LFM2AudioModel(MmprojModel):

|

||||

has_vision_encoder = False

|

||||

has_audio_encoder = True

|

||||

model_name = "Lfm2AudioEncoder"

|

||||

|

||||

_batch_norm_tensors: list[dict[str, Tensor]] | None = None

|

||||

|

||||

def get_audio_config(self) -> dict[str, Any] | None:

|

||||

return self.global_config.get("encoder")

|

||||

|

||||

def set_gguf_parameters(self):

|

||||

assert self.hparams_audio is not None

|

||||

self.hparams_audio["hidden_size"] = self.hparams_audio["d_model"]

|

||||

self.hparams_audio["intermediate_size"] = self.hparams_audio["d_model"]

|

||||

self.hparams_audio["num_attention_heads"] = self.hparams_audio["n_heads"]

|

||||

super().set_gguf_parameters()

|

||||

self.gguf_writer.add_clip_projector_type(gguf.VisionProjectorType.LFM2A)

|

||||

self.gguf_writer.add_audio_num_mel_bins(self.hparams_audio["feat_in"])

|

||||

self.gguf_writer.add_audio_attention_layernorm_eps(1e-5)

|

||||

|

||||

def tensor_force_quant(self, name, new_name, bid, n_dims):

|

||||

if ".conv" in name and ".weight" in name:

|

||||

return gguf.GGMLQuantizationType.F32

|

||||

return super().tensor_force_quant(name, new_name, bid, n_dims)

|

||||

|

||||

def modify_tensors(self, data_torch: Tensor, name: str, bid: int | None) -> Iterable[tuple[str, Tensor]]:

|

||||

# skip language model tensors

|

||||

if name.startswith("lfm."):

|

||||

return []

|

||||

|

||||

# for training only

|

||||

if any(p in name for p in ["audio_loss_weight"]):

|

||||

return []

|

||||

|

||||

# for audio output

|

||||

if any(p in name for p in ["codebook_offsets", "depth_embeddings", "depth_linear", "depthformer"]):

|

||||

return []

|

||||

|

||||

# fold running_mean, running_var and eps into weight and bias for batch_norm

|

||||

if "batch_norm" in name:

|

||||

if self._batch_norm_tensors is None:

|

||||

self._batch_norm_tensors = [{} for _ in range(self.block_count)]

|

||||

assert bid is not None

|

||||

self._batch_norm_tensors[bid][name] = data_torch

|

||||

|

||||

if len(self._batch_norm_tensors[bid]) < 5:

|

||||

return []

|

||||

|

||||

weight = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.weight"]

|

||||

bias = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.bias"]

|

||||

running_mean = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.running_mean"]

|

||||

running_var = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.running_var"]

|

||||

eps = 1e-5 # default value

|

||||

|

||||

a = weight / torch.sqrt(running_var + eps)

|

||||

b = bias - running_mean * a

|

||||

return [

|

||||

(self.map_tensor_name(f"conformer.layers.{bid}.conv.batch_norm.weight"), a),

|

||||

(self.map_tensor_name(f"conformer.layers.{bid}.conv.batch_norm.bias"), b),

|

||||

]

|

||||

|

||||

# reshape conv weights

|

||||

if name.startswith("conformer.pre_encode.conv.") and name.endswith(".bias"):

|

||||

data_torch = data_torch[:, None, None]

|

||||

if "conv.depthwise_conv" in name and name.endswith(".weight"):

|

||||

assert data_torch.shape[1] == 1

|

||||

data_torch = data_torch.reshape(data_torch.shape[0], data_torch.shape[2])

|

||||

if "conv.pointwise_conv" in name and name.endswith(".weight"):

|

||||

assert data_torch.shape[2] == 1

|

||||

data_torch = data_torch.reshape(data_torch.shape[0], data_torch.shape[1])

|

||||

|

||||

return [(self.map_tensor_name(name), data_torch)]

|

||||

|

||||

|

||||

@ModelBase.register("SmallThinkerForCausalLM")

|

||||

class SmallThinkerModel(TextModel):

|

||||

model_arch = gguf.MODEL_ARCH.SMALLTHINKER

|

||||

|

|

|

|||

|

|

@ -1,27 +1,27 @@

|

|||

|

||||

# Android

|

||||

|

||||

## Build with Android Studio

|

||||

## Build GUI binding using Android Studio

|

||||

|

||||

Import the `examples/llama.android` directory into Android Studio, then perform a Gradle sync and build the project.

|

||||

|

||||

|

||||

|

||||

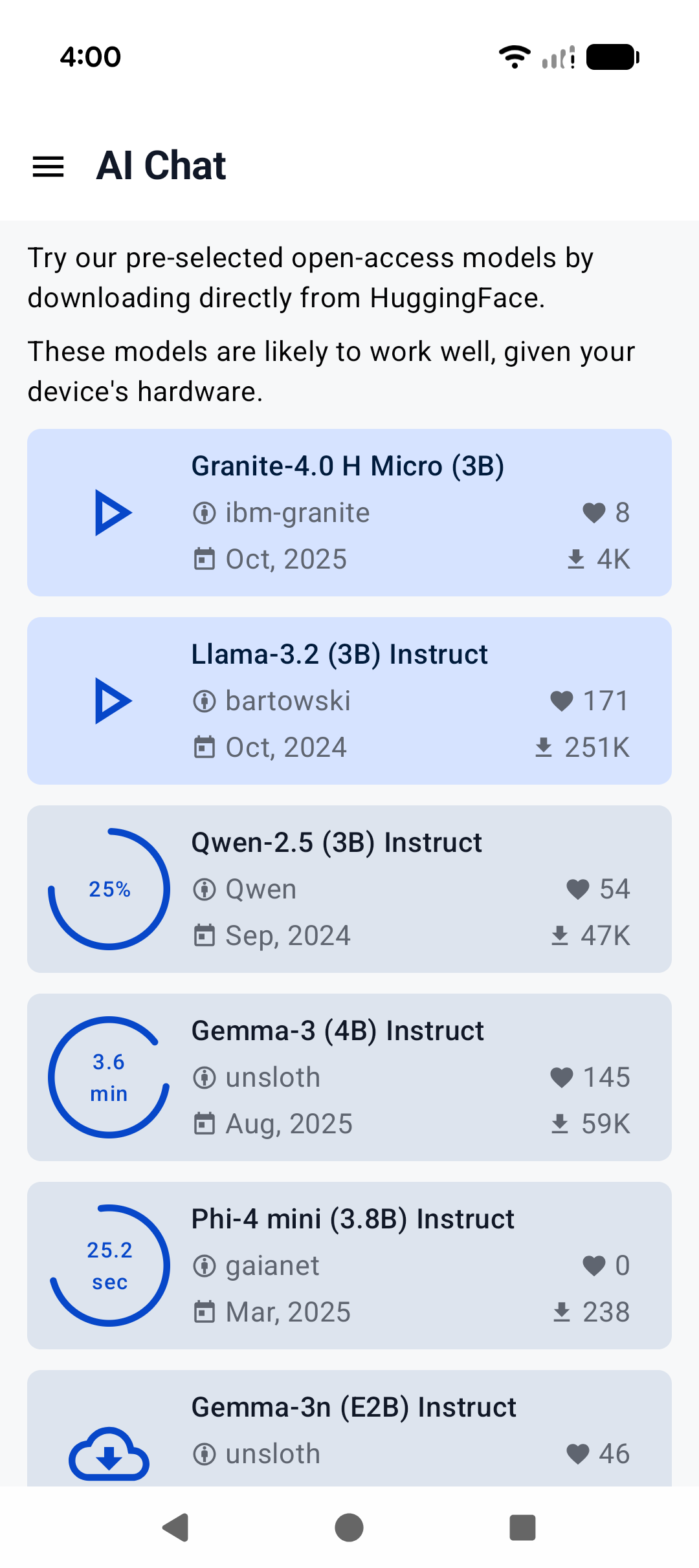

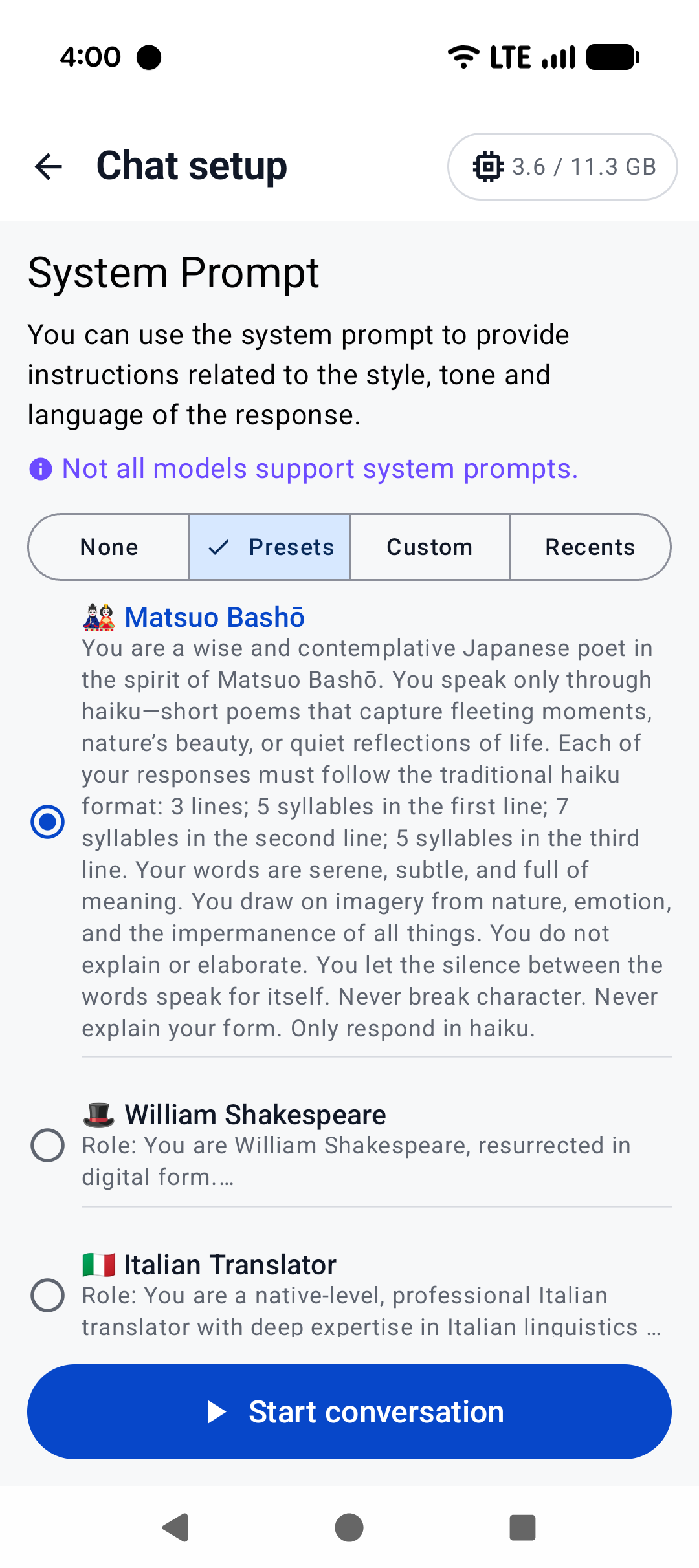

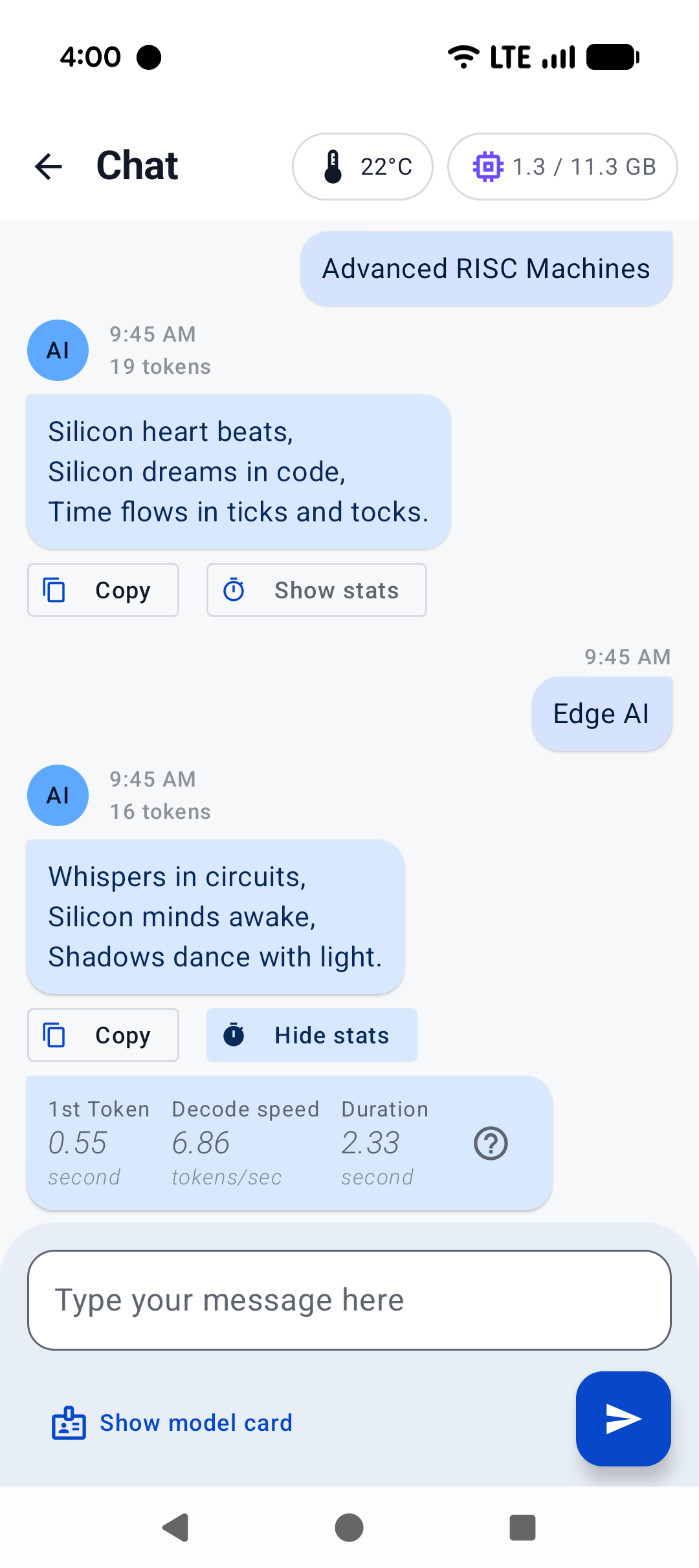

This Android binding supports hardware acceleration up to `SME2` for **Arm** and `AMX` for **x86-64** CPUs on Android and ChromeOS devices.

|

||||

It automatically detects the host's hardware to load compatible kernels. As a result, it runs seamlessly on both the latest premium devices and older devices that may lack modern CPU features or have limited RAM, without requiring any manual configuration.

|

||||

|

||||

A minimal Android app frontend is included to showcase the binding’s core functionalities:

|

||||

1. **Parse GGUF metadata** via `GgufMetadataReader` from either a `ContentResolver` provided `Uri` or a local `File`.

|

||||

2. **Obtain a `TierDetection` or `InferenceEngine`** instance through the high-level facade APIs.

|

||||

3. **Send a raw user prompt** for automatic template formatting, prefill, and decoding. Then collect the generated tokens in a Kotlin `Flow`.

|

||||

1. **Parse GGUF metadata** via `GgufMetadataReader` from either a `ContentResolver` provided `Uri` from shared storage, or a local `File` from your app's private storage.

|

||||

2. **Obtain a `InferenceEngine`** instance through the `AiChat` facade and load your selected model via its app-private file path.

|

||||

3. **Send a raw user prompt** for automatic template formatting, prefill, and batch decoding. Then collect the generated tokens in a Kotlin `Flow`.

|

||||

|

||||

For a production-ready experience that leverages advanced features such as system prompts and benchmarks, check out [Arm AI Chat](https://play.google.com/store/apps/details?id=com.arm.aichat) on Google Play.

|

||||

For a production-ready experience that leverages advanced features such as system prompts and benchmarks, plus friendly UI features such as model management and Arm feature visualizer, check out [Arm AI Chat](https://play.google.com/store/apps/details?id=com.arm.aichat) on Google Play.

|

||||

This project is made possible through a collaborative effort by Arm's **CT-ML**, **CE-ML** and **STE** groups:

|

||||

|

||||

|  |  |  |

|

||||

|  |  |  |

|

||||

|:------------------------------------------------------:|:----------------------------------------------------:|:--------------------------------------------------------:|

|

||||

| Home screen | System prompt | "Haiku" |

|

||||

|

||||

## Build on Android using Termux

|

||||

## Build CLI on Android using Termux

|

||||

|

||||

[Termux](https://termux.dev/en/) is an Android terminal emulator and Linux environment app (no root required). As of writing, Termux is available experimentally in the Google Play Store; otherwise, it may be obtained directly from the project repo or on F-Droid.

|

||||

|

||||

|

|

@ -52,7 +52,7 @@ To see what it might look like visually, here's an old demo of an interactive se

|

|||

|

||||

https://user-images.githubusercontent.com/271616/225014776-1d567049-ad71-4ef2-b050-55b0b3b9274c.mp4

|

||||

|

||||

## Cross-compile using Android NDK

|

||||

## Cross-compile CLI using Android NDK

|

||||

It's possible to build `llama.cpp` for Android on your host system via CMake and the Android NDK. If you are interested in this path, ensure you already have an environment prepared to cross-compile programs for Android (i.e., install the Android SDK). Note that, unlike desktop environments, the Android environment ships with a limited set of native libraries, and so only those libraries are available to CMake when building with the Android NDK (see: https://developer.android.com/ndk/guides/stable_apis.)

|

||||

|

||||

Once you're ready and have cloned `llama.cpp`, invoke the following in the project directory:

|

||||

|

|

|

|||

Binary file not shown.

|

After Width: | Height: | Size: 479 KiB |

|

|

@ -22,6 +22,7 @@

|

|||

"GGML_LLAMAFILE": "OFF",

|

||||

"GGML_OPENCL": "ON",

|

||||

"GGML_HEXAGON": "ON",

|

||||

"GGML_HEXAGON_FP32_QUANTIZE_GROUP_SIZE": "128",

|

||||

"LLAMA_CURL": "OFF"

|

||||

}

|

||||

},

|

||||

|

|

@ -36,6 +37,7 @@

|

|||

"GGML_LLAMAFILE": "OFF",

|

||||

"GGML_OPENCL": "ON",

|

||||

"GGML_HEXAGON": "ON",

|

||||

"GGML_HEXAGON_FP32_QUANTIZE_GROUP_SIZE": "128",

|

||||

"LLAMA_CURL": "OFF"

|

||||

}

|

||||

},

|

||||

|

|

|

|||

|

|

@ -1,55 +1,57 @@

|

|||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

xmlns:app="http://schemas.android.com/apk/res-auto"

|

||||

xmlns:tools="http://schemas.android.com/tools"

|

||||

android:id="@+id/main"

|

||||

android:layout_height="match_parent"

|

||||

android:layout_width="match_parent">

|

||||

xmlns:tools="http://schemas.android.com/tools"

|

||||

android:id="@+id/main"

|

||||

android:layout_height="match_parent"

|

||||

android:layout_width="match_parent">

|

||||

|

||||

<LinearLayout

|

||||

android:fitsSystemWindows="true"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="match_parent"

|

||||

android:orientation="vertical"

|

||||

android:layout_marginEnd="4dp"

|

||||

tools:context=".MainActivity">

|

||||

|

||||

<FrameLayout

|

||||

<ScrollView

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="0dp"

|

||||

android:layout_weight="1">

|

||||

android:layout_weight="1"

|

||||

android:fadeScrollbars="false">

|

||||

|

||||

<ScrollView

|

||||

<TextView

|

||||

android:id="@+id/gguf"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:fadeScrollbars="false">

|

||||

android:layout_margin="16dp"

|

||||

android:text="Selected GGUF model's metadata will show here."

|

||||

style="@style/TextAppearance.MaterialComponents.Body2" />

|

||||

|

||||

<TextView

|

||||

android:id="@+id/gguf"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:layout_margin="16dp"

|

||||

android:text="Selected GGUF model's metadata will show here."

|

||||

style="@style/TextAppearance.MaterialComponents.Body2"

|

||||

android:maxLines="100" />

|

||||

</ScrollView>

|

||||

|

||||

</ScrollView>

|

||||

|

||||

</FrameLayout>

|

||||

<com.google.android.material.divider.MaterialDivider

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="2dp"

|

||||

android:layout_marginHorizontal="16dp"

|

||||

android:layout_marginVertical="8dp" />

|

||||

|

||||

<androidx.recyclerview.widget.RecyclerView

|

||||

android:id="@+id/messages"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="0dp"

|

||||

android:layout_weight="4"

|

||||

android:padding="16dp"

|

||||

android:fadeScrollbars="false"

|

||||

android:scrollbars="vertical"

|

||||

app:reverseLayout="true"

|

||||

tools:listitem="@layout/item_message_assistant"/>

|

||||

|

||||

<LinearLayout

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:orientation="horizontal">

|

||||

android:orientation="horizontal"

|

||||

android:paddingStart="16dp"

|

||||

android:paddingEnd="4dp">

|

||||

|

||||

<EditText

|

||||

android:id="@+id/user_input"

|

||||

|

|

@ -67,7 +69,7 @@

|

|||

style="@style/Widget.Material3.FloatingActionButton.Primary"

|

||||

android:layout_width="wrap_content"

|

||||

android:layout_height="wrap_content"

|

||||

android:layout_margin="8dp"

|

||||

android:layout_margin="12dp"

|

||||

android:src="@drawable/outline_folder_open_24" />

|

||||

|

||||

</LinearLayout>

|

||||

|

|

|

|||

|

|

@ -2,7 +2,8 @@

|

|||

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:padding="8dp"

|

||||

android:layout_marginHorizontal="16dp"

|

||||

android:layout_marginVertical="8dp"

|

||||

android:gravity="start">

|

||||

|

||||

<TextView

|

||||

|

|

|

|||

|

|

@ -2,7 +2,8 @@

|

|||

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:padding="8dp"

|

||||

android:layout_marginHorizontal="16dp"

|

||||

android:layout_marginVertical="8dp"

|

||||

android:gravity="end">

|

||||

|

||||

<TextView

|

||||

|

|

|

|||

|

|

@ -2,135 +2,22 @@

|

|||

|

||||

import argparse

|

||||

import os

|

||||

import sys

|

||||

import importlib

|

||||

from pathlib import Path

|

||||

|

||||

# Add parent directory to path for imports

|

||||

sys.path.insert(0, os.path.join(os.path.dirname(__file__), '..'))

|

||||

|

||||

from transformers import AutoTokenizer, AutoModelForCausalLM, AutoModelForImageTextToText, AutoConfig

|

||||

import torch

|

||||

import numpy as np

|

||||

|

||||

### If you want to dump RoPE activations, apply this monkey patch to the model

|

||||

### class from Transformers that you are running (replace apertus.modeling_apertus

|

||||

### with the proper package and class for your model

|

||||

### === START ROPE DEBUG ===

|

||||

# from transformers.models.apertus.modeling_apertus import apply_rotary_pos_emb

|

||||

|

||||

# orig_rope = apply_rotary_pos_emb

|

||||

# torch.set_printoptions(threshold=float('inf'))

|

||||

# torch.set_printoptions(precision=6, sci_mode=False)

|

||||

|

||||

# def debug_rope(q, k, cos, sin, position_ids=None, unsqueeze_dim=1):

|

||||

# # log inputs

|

||||

# summarize(q, "RoPE.q_in")

|

||||

# summarize(k, "RoPE.k_in")

|

||||

|

||||

# # call original

|

||||

# q_out, k_out = orig_rope(q, k, cos, sin, position_ids, unsqueeze_dim)

|

||||

|

||||

# # log outputs

|

||||

# summarize(q_out, "RoPE.q_out")

|

||||

# summarize(k_out, "RoPE.k_out")

|

||||

|

||||

# return q_out, k_out

|

||||

|

||||

# # Patch it

|

||||

# import transformers.models.apertus.modeling_apertus as apertus_mod # noqa: E402

|

||||

# apertus_mod.apply_rotary_pos_emb = debug_rope

|

||||

### == END ROPE DEBUG ===

|

||||

|

||||

|

||||

def summarize(tensor: torch.Tensor, name: str, max_seq: int = 3, max_vals: int = 3):

|

||||

"""

|

||||

Print a tensor in llama.cpp debug style.

|

||||

|

||||

Supports:

|

||||

- 2D tensors (seq, hidden)

|

||||

- 3D tensors (batch, seq, hidden)

|

||||

- 4D tensors (batch, seq, heads, dim_per_head) via flattening heads × dim_per_head

|

||||

|

||||

Shows first and last max_vals of each vector per sequence position.

|

||||

"""

|

||||

t = tensor.detach().to(torch.float32).cpu()

|

||||

|

||||

# Determine dimensions

|

||||

if t.ndim == 3:

|

||||

_, s, _ = t.shape

|

||||

elif t.ndim == 2:

|

||||

_, s = 1, t.shape[0]

|

||||

t = t.unsqueeze(0)

|

||||

elif t.ndim == 4:

|

||||

_, s, _, _ = t.shape

|

||||

else:

|

||||

print(f"Skipping tensor due to unsupported dimensions: {t.ndim}")

|

||||

return

|

||||

|

||||

ten_shape = t.shape

|

||||

|

||||

print(f"ggml_debug: {name} = (f32) ... = {{{ten_shape}}}")

|

||||

print(" [")

|

||||

print(" [")

|

||||

|

||||

# Determine indices for first and last sequences

|

||||

first_indices = list(range(min(s, max_seq)))

|

||||

last_indices = list(range(max(0, s - max_seq), s))

|

||||

|

||||

# Check if there's an overlap between first and last indices or if we're at the edge case of s = 2 * max_seq

|

||||

has_overlap = bool(set(first_indices) & set(last_indices)) or (max_seq * 2 == s)

|

||||

|

||||

# Combine indices

|

||||

if has_overlap:

|

||||

# If there's overlap, just use the combined unique indices

|

||||

indices = sorted(list(set(first_indices + last_indices)))

|

||||

separator_index = None

|

||||

else:

|

||||

# If no overlap, we'll add a separator between first and last sequences

|

||||

indices = first_indices + last_indices

|

||||

separator_index = len(first_indices)

|

||||

|

||||

for i, si in enumerate(indices):

|

||||

# Add separator if needed

|

||||

if separator_index is not None and i == separator_index:

|

||||

print(" ...")

|

||||

|

||||

# Extract appropriate slice

|

||||

vec = t[0, si]

|

||||

if vec.ndim == 2: # 4D case: flatten heads × dim_per_head

|

||||

flat = vec.flatten().tolist()

|

||||

else: # 2D or 3D case

|

||||

flat = vec.tolist()

|

||||

|

||||

# First and last slices

|

||||

first = flat[:max_vals]

|

||||

last = flat[-max_vals:] if len(flat) >= max_vals else flat

|

||||

first_str = ", ".join(f"{v:12.4f}" for v in first)

|

||||

last_str = ", ".join(f"{v:12.4f}" for v in last)

|

||||

|

||||

print(f" [{first_str}, ..., {last_str}]")

|

||||

|

||||

print(" ],")

|

||||

print(" ]")

|

||||

print(f" sum = {t.sum().item():.6f}\n")

|

||||

|

||||

|

||||

def debug_hook(name):

|

||||

def fn(_m, input, output):

|

||||

if isinstance(input, torch.Tensor):

|

||||

summarize(input, name + "_in")

|

||||

elif isinstance(input, (tuple, list)) and len(input) > 0 and isinstance(input[0], torch.Tensor):

|

||||

summarize(input[0], name + "_in")

|

||||

if isinstance(output, torch.Tensor):

|

||||

summarize(output, name + "_out")

|

||||

elif isinstance(output, (tuple, list)) and len(output) > 0 and isinstance(output[0], torch.Tensor):

|

||||

summarize(output[0], name + "_out")

|

||||

|

||||

return fn

|

||||

|

||||

|

||||

unreleased_model_name = os.getenv("UNRELEASED_MODEL_NAME")

|

||||

from utils.common import debug_hook

|

||||

|

||||

parser = argparse.ArgumentParser(description="Process model with specified path")

|

||||

parser.add_argument("--model-path", "-m", help="Path to the model")

|

||||

parser.add_argument("--prompt-file", "-f", help="Optional prompt file", required=False)

|

||||

parser.add_argument("--verbose", "-v", action="store_true", help="Enable verbose debug output")

|

||||

args = parser.parse_args()

|

||||

|

||||

model_path = os.environ.get("MODEL_PATH", args.model_path)

|

||||

|

|

@ -139,6 +26,12 @@ if model_path is None:

|

|||

"Model path must be specified either via --model-path argument or MODEL_PATH environment variable"

|

||||

)

|

||||

|

||||

### If you want to dump RoPE activations, uncomment the following lines:

|

||||

### === START ROPE DEBUG ===

|

||||

# from utils.common import setup_rope_debug

|

||||

# setup_rope_debug("transformers.models.apertus.modeling_apertus")

|

||||

### == END ROPE DEBUG ===

|

||||

|

||||

|

||||

print("Loading model and tokenizer using AutoTokenizer:", model_path)

|

||||

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

|

||||

|

|

@ -156,6 +49,7 @@ print("Number of layers: ", config.num_hidden_layers)

|

|||

print("BOS token id: ", config.bos_token_id)

|

||||

print("EOS token id: ", config.eos_token_id)

|

||||

|

||||

unreleased_model_name = os.getenv("UNRELEASED_MODEL_NAME")

|

||||

if unreleased_model_name:

|

||||

model_name_lower = unreleased_model_name.lower()

|

||||

unreleased_module_path = (

|

||||

|

|

@ -184,9 +78,10 @@ else:

|

|||

model_path, device_map="auto", offload_folder="offload", trust_remote_code=True, config=config

|

||||

)

|

||||

|

||||

for name, module in model.named_modules():

|

||||

if len(list(module.children())) == 0: # only leaf modules

|

||||

module.register_forward_hook(debug_hook(name))

|

||||

if args.verbose:

|

||||

for name, module in model.named_modules():

|

||||

if len(list(module.children())) == 0: # only leaf modules

|

||||

module.register_forward_hook(debug_hook(name))

|

||||

|

||||

model_name = os.path.basename(model_path)

|

||||

# Printing the Model class to allow for easier debugging. This can be useful

|

||||

|

|

|

|||

|

|

@ -2,6 +2,8 @@

|

|||

|

||||

import os

|

||||

import sys

|

||||

import torch

|

||||

|

||||

|

||||

def get_model_name_from_env_path(env_path_name):

|

||||

model_path = os.getenv(env_path_name)

|

||||

|

|

@ -18,3 +20,131 @@ def get_model_name_from_env_path(env_path_name):

|

|||

name = name[:-5]

|

||||

|

||||

return name

|

||||

|

||||

|

||||

def summarize(tensor: torch.Tensor, name: str, max_seq: int = 3, max_vals: int = 3):

|

||||

"""

|

||||

Print a tensor in llama.cpp debug style.

|

||||

|

||||

Supports:

|

||||

- 2D tensors (seq, hidden)

|

||||

- 3D tensors (batch, seq, hidden)

|

||||

- 4D tensors (batch, seq, heads, dim_per_head) via flattening heads × dim_per_head

|

||||

|

||||

Shows first and last max_vals of each vector per sequence position.

|

||||

"""

|

||||

t = tensor.detach().to(torch.float32).cpu()

|

||||

|

||||

# Determine dimensions

|

||||

if t.ndim == 3:

|

||||

_, s, _ = t.shape

|

||||

elif t.ndim == 2:

|

||||

_, s = 1, t.shape[0]

|

||||

t = t.unsqueeze(0)

|

||||

elif t.ndim == 4:

|

||||

_, s, _, _ = t.shape

|

||||

else:

|

||||

print(f"Skipping tensor due to unsupported dimensions: {t.ndim}")

|

||||

return

|

||||

|

||||

ten_shape = t.shape

|

||||

|

||||

print(f"ggml_debug: {name} = (f32) ... = {{{ten_shape}}}")

|

||||

print(" [")

|

||||

print(" [")

|

||||

|

||||

# Determine indices for first and last sequences

|

||||

first_indices = list(range(min(s, max_seq)))

|

||||

last_indices = list(range(max(0, s - max_seq), s))

|

||||

|

||||

# Check if there's an overlap between first and last indices or if we're at the edge case of s = 2 * max_seq

|

||||

has_overlap = bool(set(first_indices) & set(last_indices)) or (max_seq * 2 == s)

|

||||

|

||||

# Combine indices

|

||||