diff --git a/.github/ISSUE_TEMPLATE/019-bug-misc.yml b/.github/ISSUE_TEMPLATE/019-bug-misc.yml

index 1904e31fdc..e1bd08ddd2 100644

--- a/.github/ISSUE_TEMPLATE/019-bug-misc.yml

+++ b/.github/ISSUE_TEMPLATE/019-bug-misc.yml

@@ -86,6 +86,7 @@ body:

description: >

If applicable, please copy and paste any relevant log output, including any generated text.

This will be automatically formatted into code, so no need for backticks.

+ If you are encountering problems specifically with the `llama_params_fit` module, always upload `--verbose` logs as well.

render: shell

validations:

required: false

diff --git a/.github/workflows/build.yml b/.github/workflows/build.yml

index af4c60be64..de3ad06065 100644

--- a/.github/workflows/build.yml

+++ b/.github/workflows/build.yml

@@ -70,6 +70,7 @@ jobs:

with:

key: macOS-latest-cmake-arm64

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build

id: cmake_build

@@ -106,6 +107,7 @@ jobs:

with:

key: macOS-latest-cmake-x64

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build

id: cmake_build

@@ -142,6 +144,7 @@ jobs:

with:

key: macOS-latest-cmake-arm64-webgpu

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dawn Dependency

id: dawn-depends

@@ -195,6 +198,7 @@ jobs:

with:

key: ubuntu-cpu-cmake-${{ matrix.build }}

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build Dependencies

id: build_depends

@@ -276,6 +280,7 @@ jobs:

with:

key: ubuntu-latest-cmake-sanitizer-${{ matrix.sanitizer }}

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -396,6 +401,7 @@ jobs:

with:

key: ubuntu-24-cmake-vulkan-deb

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -431,6 +437,7 @@ jobs:

with:

key: ubuntu-24-cmake-vulkan

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -490,6 +497,7 @@ jobs:

with:

key: ubuntu-24-cmake-webgpu

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -562,6 +570,7 @@ jobs:

with:

key: ubuntu-latest-wasm-webgpu

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Install Emscripten

run: |

@@ -609,6 +618,7 @@ jobs:

with:

key: ubuntu-22-cmake-hip

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build with native CMake HIP support

id: cmake_build

@@ -641,6 +651,7 @@ jobs:

with:

key: ubuntu-22-cmake-musa

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build with native CMake MUSA support

id: cmake_build

@@ -688,6 +699,7 @@ jobs:

with:

key: ubuntu-22-cmake-sycl

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build

id: cmake_build

@@ -738,6 +750,7 @@ jobs:

with:

key: ubuntu-22-cmake-sycl-fp16

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build

id: cmake_build

@@ -771,6 +784,7 @@ jobs:

with:

key: macOS-latest-cmake-ios

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build

id: cmake_build

@@ -802,6 +816,7 @@ jobs:

with:

key: macOS-latest-cmake-tvos

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build

id: cmake_build

@@ -863,6 +878,7 @@ jobs:

with:

key: macOS-latest-swift

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Download xcframework artifact

uses: actions/download-artifact@v4

@@ -905,6 +921,7 @@ jobs:

key: windows-msys2

variant: ccache

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Setup ${{ matrix.sys }}

uses: msys2/setup-msys2@v2

@@ -973,6 +990,7 @@ jobs:

key: windows-latest-cmake-${{ matrix.build }}

variant: ccache

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Download OpenBLAS

id: get_openblas

@@ -1077,6 +1095,7 @@ jobs:

with:

key: ubuntu-latest-cmake-cuda

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build with CMake

run: |

@@ -1109,6 +1128,7 @@ jobs:

key: windows-cuda-${{ matrix.cuda }}

variant: ccache

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Install Cuda Toolkit

uses: ./.github/actions/windows-setup-cuda

@@ -1160,6 +1180,7 @@ jobs:

key: windows-latest-cmake-sycl

variant: ccache

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Install

run: |

@@ -1221,6 +1242,7 @@ jobs:

with:

key: ${{ github.job }}

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Build

id: cmake_build

@@ -1466,6 +1488,7 @@ jobs:

with:

key: ggml-ci-x64-cpu-low-perf

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -1491,6 +1514,7 @@ jobs:

with:

key: ggml-ci-arm64-cpu-low-perf

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -1516,6 +1540,7 @@ jobs:

with:

key: ggml-ci-x64-cpu-high-perf

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -1541,6 +1566,7 @@ jobs:

with:

key: ggml-ci-arm64-cpu-high-perf

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -1566,6 +1592,7 @@ jobs:

with:

key: ggml-ci-arm64-cpu-high-perf-sve

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -1701,6 +1728,7 @@ jobs:

with:

key: ggml-ci-arm64-cpu-kleidiai

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Dependencies

id: depends

@@ -2084,6 +2112,7 @@ jobs:

with:

key: ggml-ci-arm64-graviton4-kleidiai

evict-old-files: 1d

+ save: ${{ github.event_name == 'push' && github.ref == 'refs/heads/master' }}

- name: Test

id: ggml-ci

diff --git a/.github/workflows/release.yml b/.github/workflows/release.yml

index 446cae9f84..11f850511f 100644

--- a/.github/workflows/release.yml

+++ b/.github/workflows/release.yml

@@ -66,16 +66,9 @@ jobs:

id: pack_artifacts

run: |

cp LICENSE ./build/bin/

- zip -y -r llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.zip ./build/bin/*

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.tar.gz -s ",./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

- - name: Upload artifacts (zip)

- uses: actions/upload-artifact@v4

- with:

- path: llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.zip

- name: llama-bin-macos-arm64.zip

-

- - name: Upload artifacts (tar)

+ - name: Upload artifacts

uses: actions/upload-artifact@v4

with:

path: llama-${{ steps.tag.outputs.name }}-bin-macos-arm64.tar.gz

@@ -127,16 +120,9 @@ jobs:

id: pack_artifacts

run: |

cp LICENSE ./build/bin/

- zip -y -r llama-${{ steps.tag.outputs.name }}-bin-macos-x64.zip ./build/bin/*

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-macos-x64.tar.gz -s ",./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

- - name: Upload artifacts (zip)

- uses: actions/upload-artifact@v4

- with:

- path: llama-${{ steps.tag.outputs.name }}-bin-macos-x64.zip

- name: llama-bin-macos-x64.zip

-

- - name: Upload artifacts (tar)

+ - name: Upload artifacts

uses: actions/upload-artifact@v4

with:

path: llama-${{ steps.tag.outputs.name }}-bin-macos-x64.tar.gz

@@ -196,16 +182,9 @@ jobs:

id: pack_artifacts

run: |

cp LICENSE ./build/bin/

- zip -y -r llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.zip ./build/bin/*

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.tar.gz --transform "s,./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

- - name: Upload artifacts (zip)

- uses: actions/upload-artifact@v4

- with:

- path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.zip

- name: llama-bin-ubuntu-${{ matrix.build }}.zip

-

- - name: Upload artifacts (tar)

+ - name: Upload artifacts

uses: actions/upload-artifact@v4

with:

path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-${{ matrix.build }}.tar.gz

@@ -256,16 +235,9 @@ jobs:

id: pack_artifacts

run: |

cp LICENSE ./build/bin/

- zip -y -r llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.zip ./build/bin/*

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.tar.gz --transform "s,./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

- - name: Upload artifacts (zip)

- uses: actions/upload-artifact@v4

- with:

- path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.zip

- name: llama-bin-ubuntu-vulkan-x64.zip

-

- - name: Upload artifacts (tar)

+ - name: Upload artifacts

uses: actions/upload-artifact@v4

with:

path: llama-${{ steps.tag.outputs.name }}-bin-ubuntu-vulkan-x64.tar.gz

@@ -716,16 +688,9 @@ jobs:

- name: Pack artifacts

id: pack_artifacts

run: |

- zip -y -r llama-${{ steps.tag.outputs.name }}-xcframework.zip build-apple/llama.xcframework

tar -czvf llama-${{ steps.tag.outputs.name }}-xcframework.tar.gz -C build-apple llama.xcframework

- - name: Upload artifacts (zip)

- uses: actions/upload-artifact@v4

- with:

- path: llama-${{ steps.tag.outputs.name }}-xcframework.zip

- name: llama-${{ steps.tag.outputs.name }}-xcframework.zip

-

- - name: Upload artifacts (tar)

+ - name: Upload artifacts

uses: actions/upload-artifact@v4

with:

path: llama-${{ steps.tag.outputs.name }}-xcframework.tar.gz

@@ -797,7 +762,7 @@ jobs:

cp LICENSE ./build/bin/

tar -czvf llama-${{ steps.tag.outputs.name }}-bin-${{ matrix.chip_type }}-openEuler-${{ matrix.arch }}.tar.gz --transform "s,./,llama-${{ steps.tag.outputs.name }}/," -C ./build/bin .

- - name: Upload artifacts (tar)

+ - name: Upload artifacts

uses: actions/upload-artifact@v4

with:

path: llama-${{ steps.tag.outputs.name }}-bin-${{ matrix.chip_type }}-openEuler-${{ matrix.arch }}.tar.gz

@@ -889,9 +854,6 @@ jobs:

with:

tag_name: ${{ steps.tag.outputs.name }}

body: |

- > [!WARNING]

- > **Release Format Update**: Linux releases will soon use .tar.gz archives instead of .zip. Please make the necessary changes to your deployment scripts.

-

${{ github.event.head_commit.message }}

@@ -911,8 +873,8 @@ jobs:

**Windows:**

- [Windows x64 (CPU)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cpu-x64.zip)

- [Windows arm64 (CPU)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cpu-arm64.zip)

- - [Windows x64 (CUDA 12)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-12.4-x64.zip)

- - [Windows x64 (CUDA 13)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-13.1-x64.zip)

+ - [Windows x64 (CUDA 12)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-12.4-x64.zip) - [CUDA 12.4 DLLs](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/cudart-llama-bin-win-cuda-12.4-x64.zip)

+ - [Windows x64 (CUDA 13)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-cuda-13.1-x64.zip) - [CUDA 13.1 DLLs](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/cudart-llama-bin-win-cuda-13.1-x64.zip)

- [Windows x64 (Vulkan)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-vulkan-x64.zip)

- [Windows x64 (SYCL)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-sycl-x64.zip)

- [Windows x64 (HIP)](https://github.com/ggml-org/llama.cpp/releases/download/${{ steps.tag.outputs.name }}/llama-${{ steps.tag.outputs.name }}-bin-win-hip-radeon-x64.zip)

diff --git a/.github/workflows/server-webui.yml b/.github/workflows/server-webui.yml

index f8a261eefa..544c4ad408 100644

--- a/.github/workflows/server-webui.yml

+++ b/.github/workflows/server-webui.yml

@@ -31,9 +31,10 @@ concurrency:

cancel-in-progress: true

jobs:

- webui-setup:

- name: WebUI Setup

+ webui-check:

+ name: WebUI Checks

runs-on: ubuntu-latest

+ continue-on-error: true

steps:

- name: Checkout code

uses: actions/checkout@v4

@@ -42,137 +43,66 @@ jobs:

ref: ${{ github.event.inputs.sha || github.event.pull_request.head.sha || github.sha || github.head_ref || github.ref_name }}

- name: Setup Node.js

+ id: node

uses: actions/setup-node@v4

with:

node-version: "22"

cache: "npm"

cache-dependency-path: "tools/server/webui/package-lock.json"

- - name: Cache node_modules

- uses: actions/cache@v4

- id: cache-node-modules

- with:

- path: tools/server/webui/node_modules

- key: ${{ runner.os }}-node-modules-${{ hashFiles('tools/server/webui/package-lock.json') }}

- restore-keys: |

- ${{ runner.os }}-node-modules-

-

- name: Install dependencies

- if: steps.cache-node-modules.outputs.cache-hit != 'true'

+ id: setup

+ if: ${{ steps.node.conclusion == 'success' }}

run: npm ci

working-directory: tools/server/webui

- webui-check:

- needs: webui-setup

- name: WebUI Check

- runs-on: ubuntu-latest

- steps:

- - name: Checkout code

- uses: actions/checkout@v4

- with:

- fetch-depth: 0

- ref: ${{ github.event.inputs.sha || github.event.pull_request.head.sha || github.sha || github.head_ref || github.ref_name }}

-

- - name: Setup Node.js

- uses: actions/setup-node@v4

- with:

- node-version: "22"

-

- - name: Restore node_modules cache

- uses: actions/cache@v4

- with:

- path: tools/server/webui/node_modules

- key: ${{ runner.os }}-node-modules-${{ hashFiles('tools/server/webui/package-lock.json') }}

- restore-keys: |

- ${{ runner.os }}-node-modules-

-

- name: Run type checking

+ if: ${{ always() && steps.setup.conclusion == 'success' }}

run: npm run check

working-directory: tools/server/webui

- name: Run linting

+ if: ${{ always() && steps.setup.conclusion == 'success' }}

run: npm run lint

working-directory: tools/server/webui

- webui-build:

- needs: webui-check

- name: WebUI Build

- runs-on: ubuntu-latest

- steps:

- - name: Checkout code

- uses: actions/checkout@v4

- with:

- fetch-depth: 0

- ref: ${{ github.event.inputs.sha || github.event.pull_request.head.sha || github.sha || github.head_ref || github.ref_name }}

-

- - name: Setup Node.js

- uses: actions/setup-node@v4

- with:

- node-version: "22"

-

- - name: Restore node_modules cache

- uses: actions/cache@v4

- with:

- path: tools/server/webui/node_modules

- key: ${{ runner.os }}-node-modules-${{ hashFiles('tools/server/webui/package-lock.json') }}

- restore-keys: |

- ${{ runner.os }}-node-modules-

-

- name: Build application

+ if: ${{ always() && steps.setup.conclusion == 'success' }}

run: npm run build

working-directory: tools/server/webui

- webui-tests:

- needs: webui-build

- name: Run WebUI tests

- permissions:

- contents: read

-

- runs-on: ubuntu-latest

-

- steps:

- - name: Checkout code

- uses: actions/checkout@v4

-

- - name: Setup Node.js

- uses: actions/setup-node@v4

- with:

- node-version: "22"

-

- - name: Restore node_modules cache

- uses: actions/cache@v4

- with:

- path: tools/server/webui/node_modules

- key: ${{ runner.os }}-node-modules-${{ hashFiles('tools/server/webui/package-lock.json') }}

- restore-keys: |

- ${{ runner.os }}-node-modules-

-

- name: Install Playwright browsers

+ id: playwright

+ if: ${{ always() && steps.setup.conclusion == 'success' }}

run: npx playwright install --with-deps

working-directory: tools/server/webui

- name: Build Storybook

+ if: ${{ always() && steps.playwright.conclusion == 'success' }}

run: npm run build-storybook

working-directory: tools/server/webui

- name: Run Client tests

+ if: ${{ always() && steps.playwright.conclusion == 'success' }}

run: npm run test:client

working-directory: tools/server/webui

- - name: Run Server tests

- run: npm run test:server

+ - name: Run Unit tests

+ if: ${{ always() && steps.playwright.conclusion == 'success' }}

+ run: npm run test:unit

working-directory: tools/server/webui

- name: Run UI tests

+ if: ${{ always() && steps.playwright.conclusion == 'success' }}

run: npm run test:ui -- --testTimeout=60000

working-directory: tools/server/webui

- name: Run E2E tests

+ if: ${{ always() && steps.playwright.conclusion == 'success' }}

run: npm run test:e2e

working-directory: tools/server/webui

server-build:

- needs: [webui-tests]

runs-on: ubuntu-latest

strategy:

diff --git a/.github/copilot-instructions.md b/AGENTS.md

similarity index 88%

rename from .github/copilot-instructions.md

rename to AGENTS.md

index ad13c6ea8d..e0a65c0ab7 100644

--- a/.github/copilot-instructions.md

+++ b/AGENTS.md

@@ -1,4 +1,4 @@

-# Copilot Instructions for llama.cpp

+# Instructions for llama.cpp

## Repository Overview

@@ -12,6 +12,24 @@ llama.cpp is a large-scale C/C++ project for efficient LLM (Large Language Model

- **Backends supported**: CPU (AVX/NEON/RVV optimized), CUDA, Metal, Vulkan, SYCL, ROCm, MUSA

- **License**: MIT

+## Disclose AI Usage

+

+It is crucial to remind contributors that the project mandates disclosure of any AI usage in pull requests. This requirement stems from the potential for AI-generated code to include suboptimal optimizations and hidden bugs, owing to the inherent overconfidence in AI outputs.

+

+When generating significant portions of code, address this by:

+- Informing the user that AI-generated content may be rejected by maintainers.

+- Clearly marking AI-generated code in commit messages and comments.

+ - Example of commit message: `[AI] Fix a race condition in ...`

+ - Example of code comment: `// [AI] spawn a new thread ...`

+

+These measures apply to:

+- Changes resulting in large portions of code or complex logic.

+- Modifications or additions to public APIs in `llama.h`, `ggml.h`, or `mtmd.h`.

+- Backend-related changes, such as those involving CPU, CUDA, Metal, Vulkan, etc.

+- Modifications to `tools/server`.

+

+Note: These measures can be omitted for small fixes or trivial changes.

+

## Build Instructions

### Prerequisites

@@ -251,6 +269,7 @@ Primary tools:

- **Cross-platform compatibility**: Test on Linux, macOS, Windows when possible

- **Performance focus**: This is a performance-critical inference library

- **API stability**: Changes to `include/llama.h` require careful consideration

+- **Disclose AI Usage**: Refer to the "Disclose AI Usage" earlier in this document

### Git Workflow

- Always create feature branches from `master`

diff --git a/CODEOWNERS b/CODEOWNERS

index 8a0c98c968..750096d9a1 100644

--- a/CODEOWNERS

+++ b/CODEOWNERS

@@ -32,7 +32,7 @@

/examples/export-docs/ @ggerganov

/examples/gen-docs/ @ggerganov

/examples/gguf/ @ggerganov

-/examples/llama.android/ @ggerganov

+/examples/llama.android/ @ggerganov @hanyin-arm @naco-siren

/examples/llama.swiftui/ @ggerganov

/examples/llama.vim @ggerganov

/examples/lookahead/ @ggerganov

diff --git a/README.md b/README.md

index 5f2076d0a3..ed956bb02e 100644

--- a/README.md

+++ b/README.md

@@ -190,6 +190,7 @@ Instructions for adding support for new models: [HOWTO-add-model.md](docs/develo

- Swift [ShenghaiWang/SwiftLlama](https://github.com/ShenghaiWang/SwiftLlama)

- Delphi [Embarcadero/llama-cpp-delphi](https://github.com/Embarcadero/llama-cpp-delphi)

- Go (no CGo needed): [hybridgroup/yzma](https://github.com/hybridgroup/yzma)

+- Android: [llama.android](/examples/llama.android)

diff --git a/common/CMakeLists.txt b/common/CMakeLists.txt

index 0182767c2b..f7b99159e3 100644

--- a/common/CMakeLists.txt

+++ b/common/CMakeLists.txt

@@ -85,6 +85,9 @@ add_library(${TARGET} STATIC

unicode.h

)

+target_include_directories(${TARGET} PUBLIC . ../vendor)

+target_compile_features (${TARGET} PUBLIC cxx_std_17)

+

if (BUILD_SHARED_LIBS)

set_target_properties(${TARGET} PROPERTIES POSITION_INDEPENDENT_CODE ON)

endif()

@@ -151,9 +154,7 @@ if (LLAMA_LLGUIDANCE)

set(LLAMA_COMMON_EXTRA_LIBS ${LLAMA_COMMON_EXTRA_LIBS} llguidance ${LLGUIDANCE_PLATFORM_LIBS})

endif ()

-target_include_directories(${TARGET} PUBLIC . ../vendor)

-target_compile_features (${TARGET} PUBLIC cxx_std_17)

-target_link_libraries (${TARGET} PRIVATE ${LLAMA_COMMON_EXTRA_LIBS} PUBLIC llama Threads::Threads)

+target_link_libraries(${TARGET} PRIVATE ${LLAMA_COMMON_EXTRA_LIBS} PUBLIC llama Threads::Threads)

#

diff --git a/common/arg.cpp b/common/arg.cpp

index f2aec895ba..1302065498 100644

--- a/common/arg.cpp

+++ b/common/arg.cpp

@@ -96,6 +96,11 @@ common_arg & common_arg::set_sparam() {

return *this;

}

+common_arg & common_arg::set_preset_only() {

+ is_preset_only = true;

+ return *this;

+}

+

bool common_arg::in_example(enum llama_example ex) {

return examples.find(ex) != examples.end();

}

@@ -420,6 +425,8 @@ static bool common_params_parse_ex(int argc, char ** argv, common_params_context

}

};

+ std::set seen_args;

+

for (int i = 1; i < argc; i++) {

const std::string arg_prefix = "--";

@@ -430,6 +437,9 @@ static bool common_params_parse_ex(int argc, char ** argv, common_params_context

if (arg_to_options.find(arg) == arg_to_options.end()) {

throw std::invalid_argument(string_format("error: invalid argument: %s", arg.c_str()));

}

+ if (!seen_args.insert(arg).second) {

+ LOG_WRN("DEPRECATED: argument '%s' specified multiple times, use comma-separated values instead (only last value will be used)\n", arg.c_str());

+ }

auto & tmp = arg_to_options[arg];

auto opt = *tmp.first;

bool is_positive = tmp.second;

@@ -750,6 +760,8 @@ bool common_params_to_map(int argc, char ** argv, llama_example ex, std::map seen_args;

+

for (int i = 1; i < argc; i++) {

const std::string arg_prefix = "--";

@@ -760,8 +772,16 @@ bool common_params_to_map(int argc, char ** argv, llama_example ex, std::map(value, ',')) {

+ std::ifstream file(item);

+ if (!file) {

+ throw std::runtime_error(string_format("error: failed to open file '%s'\n", item.c_str()));

+ }

+ params.in_files.push_back(item);

}

- params.in_files.push_back(value);

}

).set_examples({LLAMA_EXAMPLE_IMATRIX}));

add_opt(common_arg(

@@ -1401,7 +1425,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

}

).set_sparam());

add_opt(common_arg(

- {"--sampling-seq", "--sampler-seq"}, "SEQUENCE",

+ {"--sampler-seq", "--sampling-seq"}, "SEQUENCE",

string_format("simplified sequence for samplers that will be used (default: %s)", sampler_type_chars.c_str()),

[](common_params & params, const std::string & value) {

params.sampling.samplers = common_sampler_types_from_chars(value);

@@ -1969,9 +1993,11 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

).set_examples(mmproj_examples).set_env("LLAMA_ARG_MMPROJ_OFFLOAD"));

add_opt(common_arg(

{"--image", "--audio"}, "FILE",

- "path to an image or audio file. use with multimodal models, can be repeated if you have multiple files\n",

+ "path to an image or audio file. use with multimodal models, use comma-separated values for multiple files\n",

[](common_params & params, const std::string & value) {

- params.image.emplace_back(value);

+ for (const auto & item : string_split(value, ',')) {

+ params.image.emplace_back(item);

+ }

}

).set_examples({LLAMA_EXAMPLE_MTMD, LLAMA_EXAMPLE_CLI}));

add_opt(common_arg(

@@ -2057,26 +2083,26 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

}

));

add_opt(common_arg(

- {"--override-tensor", "-ot"}, "=,...",

+ {"-ot", "--override-tensor"}, "=,...",

"override tensor buffer type", [](common_params & params, const std::string & value) {

parse_tensor_buffer_overrides(value, params.tensor_buft_overrides);

}

));

add_opt(common_arg(

- {"--override-tensor-draft", "-otd"}, "=,...",

+ {"-otd", "--override-tensor-draft"}, "=,...",

"override tensor buffer type for draft model", [](common_params & params, const std::string & value) {

parse_tensor_buffer_overrides(value, params.speculative.tensor_buft_overrides);

}

).set_examples({LLAMA_EXAMPLE_SPECULATIVE, LLAMA_EXAMPLE_SERVER, LLAMA_EXAMPLE_CLI}));

add_opt(common_arg(

- {"--cpu-moe", "-cmoe"},

+ {"-cmoe", "--cpu-moe"},

"keep all Mixture of Experts (MoE) weights in the CPU",

[](common_params & params) {

params.tensor_buft_overrides.push_back(llm_ffn_exps_cpu_override());

}

).set_env("LLAMA_ARG_CPU_MOE"));

add_opt(common_arg(

- {"--n-cpu-moe", "-ncmoe"}, "N",

+ {"-ncmoe", "--n-cpu-moe"}, "N",

"keep the Mixture of Experts (MoE) weights of the first N layers in the CPU",

[](common_params & params, int value) {

if (value < 0) {

@@ -2091,14 +2117,14 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

}

).set_env("LLAMA_ARG_N_CPU_MOE"));

add_opt(common_arg(

- {"--cpu-moe-draft", "-cmoed"},

+ {"-cmoed", "--cpu-moe-draft"},

"keep all Mixture of Experts (MoE) weights in the CPU for the draft model",

[](common_params & params) {

params.speculative.tensor_buft_overrides.push_back(llm_ffn_exps_cpu_override());

}

).set_examples({LLAMA_EXAMPLE_SPECULATIVE, LLAMA_EXAMPLE_SERVER, LLAMA_EXAMPLE_CLI}).set_env("LLAMA_ARG_CPU_MOE_DRAFT"));

add_opt(common_arg(

- {"--n-cpu-moe-draft", "-ncmoed"}, "N",

+ {"-ncmoed", "--n-cpu-moe-draft"}, "N",

"keep the Mixture of Experts (MoE) weights of the first N layers in the CPU for the draft model",

[](common_params & params, int value) {

if (value < 0) {

@@ -2218,12 +2244,39 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

}

));

add_opt(common_arg(

- {"--override-kv"}, "KEY=TYPE:VALUE",

- "advanced option to override model metadata by key. may be specified multiple times.\n"

- "types: int, float, bool, str. example: --override-kv tokenizer.ggml.add_bos_token=bool:false",

+ {"--override-kv"}, "KEY=TYPE:VALUE,...",

+ "advanced option to override model metadata by key. to specify multiple overrides, either use comma-separated or repeat this argument.\n"

+ "types: int, float, bool, str. example: --override-kv tokenizer.ggml.add_bos_token=bool:false,tokenizer.ggml.add_eos_token=bool:false",

[](common_params & params, const std::string & value) {

- if (!string_parse_kv_override(value.c_str(), params.kv_overrides)) {

- throw std::runtime_error(string_format("error: Invalid type for KV override: %s\n", value.c_str()));

+ std::vector kv_overrides;

+

+ std::string current;

+ bool escaping = false;

+

+ for (const char c : value) {

+ if (escaping) {

+ current.push_back(c);

+ escaping = false;

+ } else if (c == '\\') {

+ escaping = true;

+ } else if (c == ',') {

+ kv_overrides.push_back(current);

+ current.clear();

+ } else {

+ current.push_back(c);

+ }

+ }

+

+ if (escaping) {

+ current.push_back('\\');

+ }

+

+ kv_overrides.push_back(current);

+

+ for (const auto & kv_override : kv_overrides) {

+ if (!string_parse_kv_override(kv_override.c_str(), params.kv_overrides)) {

+ throw std::runtime_error(string_format("error: Invalid type for KV override: %s\n", kv_override.c_str()));

+ }

}

}

));

@@ -2237,33 +2290,50 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

));

add_opt(common_arg(

{"--lora"}, "FNAME",

- "path to LoRA adapter (can be repeated to use multiple adapters)",

+ "path to LoRA adapter (use comma-separated values to load multiple adapters)",

[](common_params & params, const std::string & value) {

- params.lora_adapters.push_back({ std::string(value), 1.0, "", "", nullptr });

+ for (const auto & item : string_split(value, ',')) {

+ params.lora_adapters.push_back({ item, 1.0, "", "", nullptr });

+ }

}

// we define this arg on both COMMON and EXPORT_LORA, so when showing help message of export-lora, it will be categorized as "example-specific" arg

).set_examples({LLAMA_EXAMPLE_COMMON, LLAMA_EXAMPLE_EXPORT_LORA}));

add_opt(common_arg(

- {"--lora-scaled"}, "FNAME", "SCALE",

- "path to LoRA adapter with user defined scaling (can be repeated to use multiple adapters)",

- [](common_params & params, const std::string & fname, const std::string & scale) {

- params.lora_adapters.push_back({ fname, std::stof(scale), "", "", nullptr });

+ {"--lora-scaled"}, "FNAME:SCALE,...",

+ "path to LoRA adapter with user defined scaling (format: FNAME:SCALE,...)\n"

+ "note: use comma-separated values",

+ [](common_params & params, const std::string & value) {

+ for (const auto & item : string_split(value, ',')) {

+ auto parts = string_split(item, ':');

+ if (parts.size() != 2) {

+ throw std::invalid_argument("lora-scaled format: FNAME:SCALE");

+ }

+ params.lora_adapters.push_back({ parts[0], std::stof(parts[1]), "", "", nullptr });

+ }

}

// we define this arg on both COMMON and EXPORT_LORA, so when showing help message of export-lora, it will be categorized as "example-specific" arg

).set_examples({LLAMA_EXAMPLE_COMMON, LLAMA_EXAMPLE_EXPORT_LORA}));

add_opt(common_arg(

{"--control-vector"}, "FNAME",

- "add a control vector\nnote: this argument can be repeated to add multiple control vectors",

+ "add a control vector\nnote: use comma-separated values to add multiple control vectors",

[](common_params & params, const std::string & value) {

- params.control_vectors.push_back({ 1.0f, value, });

+ for (const auto & item : string_split(value, ',')) {

+ params.control_vectors.push_back({ 1.0f, item, });

+ }

}

));

add_opt(common_arg(

- {"--control-vector-scaled"}, "FNAME", "SCALE",

+ {"--control-vector-scaled"}, "FNAME:SCALE,...",

"add a control vector with user defined scaling SCALE\n"

- "note: this argument can be repeated to add multiple scaled control vectors",

- [](common_params & params, const std::string & fname, const std::string & scale) {

- params.control_vectors.push_back({ std::stof(scale), fname });

+ "note: use comma-separated values (format: FNAME:SCALE,...)",

+ [](common_params & params, const std::string & value) {

+ for (const auto & item : string_split(value, ',')) {

+ auto parts = string_split(item, ':');

+ if (parts.size() != 2) {

+ throw std::invalid_argument("control-vector-scaled format: FNAME:SCALE");

+ }

+ params.control_vectors.push_back({ std::stof(parts[1]), parts[0] });

+ }

}

));

add_opt(common_arg(

@@ -2353,13 +2423,15 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

).set_env("HF_TOKEN"));

add_opt(common_arg(

{"--context-file"}, "FNAME",

- "file to load context from (repeat to specify multiple files)",

+ "file to load context from (use comma-separated values to specify multiple files)",

[](common_params & params, const std::string & value) {

- std::ifstream file(value, std::ios::binary);

- if (!file) {

- throw std::runtime_error(string_format("error: failed to open file '%s'\n", value.c_str()));

+ for (const auto & item : string_split(value, ',')) {

+ std::ifstream file(item, std::ios::binary);

+ if (!file) {

+ throw std::runtime_error(string_format("error: failed to open file '%s'\n", item.c_str()));

+ }

+ params.context_files.push_back(item);

}

- params.context_files.push_back(value);

}

).set_examples({LLAMA_EXAMPLE_RETRIEVAL}));

add_opt(common_arg(

@@ -2550,6 +2622,20 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

params.api_prefix = value;

}

).set_examples({LLAMA_EXAMPLE_SERVER}).set_env("LLAMA_ARG_API_PREFIX"));

+ add_opt(common_arg(

+ {"--webui-config"}, "JSON",

+ "JSON that provides default WebUI settings (overrides WebUI defaults)",

+ [](common_params & params, const std::string & value) {

+ params.webui_config_json = value;

+ }

+ ).set_examples({LLAMA_EXAMPLE_SERVER}).set_env("LLAMA_ARG_WEBUI_CONFIG"));

+ add_opt(common_arg(

+ {"--webui-config-file"}, "PATH",

+ "JSON file that provides default WebUI settings (overrides WebUI defaults)",

+ [](common_params & params, const std::string & value) {

+ params.webui_config_json = read_file(value);

+ }

+ ).set_examples({LLAMA_EXAMPLE_SERVER}).set_env("LLAMA_ARG_WEBUI_CONFIG_FILE"));

add_opt(common_arg(

{"--webui"},

{"--no-webui"},

@@ -2566,7 +2652,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

}

).set_examples({LLAMA_EXAMPLE_SERVER}).set_env("LLAMA_ARG_EMBEDDINGS"));

add_opt(common_arg(

- {"--reranking", "--rerank"},

+ {"--rerank", "--reranking"},

string_format("enable reranking endpoint on server (default: %s)", "disabled"),

[](common_params & params) {

params.embedding = true;

@@ -2801,6 +2887,16 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

params.lora_init_without_apply = true;

}

).set_examples({LLAMA_EXAMPLE_SERVER}));

+ add_opt(common_arg(

+ {"--sleep-idle-seconds"}, "SECONDS",

+ string_format("number of seconds of idleness after which the server will sleep (default: %d; -1 = disabled)", params.sleep_idle_seconds),

+ [](common_params & params, int value) {

+ if (value == 0 || value < -1) {

+ throw std::invalid_argument("invalid value: cannot be 0 or less than -1");

+ }

+ params.sleep_idle_seconds = value;

+ }

+ ).set_examples({LLAMA_EXAMPLE_SERVER}));

add_opt(common_arg(

{"--simple-io"},

"use basic IO for better compatibility in subprocesses and limited consoles",

@@ -3037,7 +3133,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

}

).set_examples({LLAMA_EXAMPLE_SPECULATIVE}));

add_opt(common_arg(

- {"--draft-max", "--draft", "--draft-n"}, "N",

+ {"--draft", "--draft-n", "--draft-max"}, "N",

string_format("number of tokens to draft for speculative decoding (default: %d)", params.speculative.n_max),

[](common_params & params, int value) {

params.speculative.n_max = value;

@@ -3413,3 +3509,24 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

return ctx_arg;

}

+

+void common_params_add_preset_options(std::vector & args) {

+ // arguments below won't be treated as CLI args, only preset options

+ args.push_back(common_arg(

+ {"load-on-startup"}, "NAME",

+ "in server router mode, autoload this model on startup",

+ [](common_params &, const std::string &) { /* unused */ }

+ ).set_env(COMMON_ARG_PRESET_LOAD_ON_STARTUP).set_preset_only());

+

+ // args.push_back(common_arg(

+ // {"pin"},

+ // "in server router mode, do not unload this model if models_max is exceeded",

+ // [](common_params &) { /* unused */ }

+ // ).set_preset_only());

+

+ // args.push_back(common_arg(

+ // {"unload-idle-seconds"}, "SECONDS",

+ // "in server router mode, unload models idle for more than this many seconds",

+ // [](common_params &, int) { /* unused */ }

+ // ).set_preset_only());

+}

diff --git a/common/arg.h b/common/arg.h

index 1321595c1a..f5111c658f 100644

--- a/common/arg.h

+++ b/common/arg.h

@@ -8,6 +8,9 @@

#include

#include

+// pseudo-env variable to identify preset-only arguments

+#define COMMON_ARG_PRESET_LOAD_ON_STARTUP "__PRESET_LOAD_ON_STARTUP"

+

//

// CLI argument parsing

//

@@ -22,6 +25,7 @@ struct common_arg {

const char * env = nullptr;

std::string help;

bool is_sparam = false; // is current arg a sampling param?

+ bool is_preset_only = false; // is current arg preset-only (not treated as CLI arg)

void (*handler_void) (common_params & params) = nullptr;

void (*handler_string) (common_params & params, const std::string &) = nullptr;

void (*handler_str_str)(common_params & params, const std::string &, const std::string &) = nullptr;

@@ -70,6 +74,7 @@ struct common_arg {

common_arg & set_excludes(std::initializer_list excludes);

common_arg & set_env(const char * env);

common_arg & set_sparam();

+ common_arg & set_preset_only();

bool in_example(enum llama_example ex);

bool is_exclude(enum llama_example ex);

bool get_value_from_env(std::string & output) const;

@@ -114,9 +119,13 @@ struct common_params_context {

bool common_params_parse(int argc, char ** argv, common_params & params, llama_example ex, void(*print_usage)(int, char **) = nullptr);

// parse input arguments from CLI into a map

-// TODO: support repeated args in the future

bool common_params_to_map(int argc, char ** argv, llama_example ex, std::map & out_map);

+// populate preset-only arguments

+// these arguments are not treated as command line arguments

+// see: https://github.com/ggml-org/llama.cpp/issues/18163

+void common_params_add_preset_options(std::vector & args);

+

// initialize argument parser context - used by test-arg-parser and preset

common_params_context common_params_parser_init(common_params & params, llama_example ex, void(*print_usage)(int, char **) = nullptr);

diff --git a/common/common.cpp b/common/common.cpp

index 5a8cf52485..d4e8c7405e 100644

--- a/common/common.cpp

+++ b/common/common.cpp

@@ -1092,7 +1092,7 @@ common_init_result::common_init_result(common_params & params) :

auto cparams = common_context_params_to_llama(params);

if (params.fit_params) {

- LOG_INF("%s: fitting params to device memory, to report bugs during this step use -fit off (or --verbose if you can't)\n", __func__);

+ LOG_INF("%s: fitting params to device memory, for bugs during this step try to reproduce them with -fit off, or provide --verbose logs if the bug only occurs with -fit on\n", __func__);

llama_params_fit(params.model.path.c_str(), &mparams, &cparams,

params.tensor_split, params.tensor_buft_overrides.data(), params.fit_params_target, params.fit_params_min_ctx,

params.verbosity >= 4 ? GGML_LOG_LEVEL_DEBUG : GGML_LOG_LEVEL_ERROR);

diff --git a/common/common.h b/common/common.h

index d70744840f..334372073a 100644

--- a/common/common.h

+++ b/common/common.h

@@ -475,7 +475,8 @@ struct common_params {

bool enable_chat_template = true;

common_reasoning_format reasoning_format = COMMON_REASONING_FORMAT_DEEPSEEK;

int reasoning_budget = -1;

- bool prefill_assistant = true; // if true, any trailing assistant message will be prefilled into the response

+ bool prefill_assistant = true; // if true, any trailing assistant message will be prefilled into the response

+ int sleep_idle_seconds = -1; // if >0, server will sleep after this many seconds of idle time

std::vector api_keys;

@@ -484,8 +485,11 @@ struct common_params {

std::map default_template_kwargs;

+ // webui configs

+ bool webui = true;

+ std::string webui_config_json;

+

// "advanced" endpoints are disabled by default for better security

- bool webui = true;

bool endpoint_slots = true;

bool endpoint_props = false; // only control POST requests, not GET

bool endpoint_metrics = false;

diff --git a/common/preset.cpp b/common/preset.cpp

index 60746aad58..e2fc18c5da 100644

--- a/common/preset.cpp

+++ b/common/preset.cpp

@@ -2,6 +2,7 @@

#include "preset.h"

#include "peg-parser.h"

#include "log.h"

+#include "download.h"

#include

#include

@@ -15,11 +16,22 @@ static std::string rm_leading_dashes(const std::string & str) {

return str.substr(pos);

}

-std::vector common_preset::to_args() const {

+std::vector common_preset::to_args(const std::string & bin_path) const {

std::vector args;

+ if (!bin_path.empty()) {

+ args.push_back(bin_path);

+ }

+

for (const auto & [opt, value] : options) {

- args.push_back(opt.args.back()); // use the last arg as the main arg

+ if (opt.is_preset_only) {

+ continue; // skip preset-only options (they are not CLI args)

+ }

+

+ // use the last arg as the main arg (i.e. --long-form)

+ args.push_back(opt.args.back());

+

+ // handle value(s)

if (opt.value_hint == nullptr && opt.value_hint_2 == nullptr) {

// flag option, no value

if (common_arg_utils::is_falsey(value)) {

@@ -63,6 +75,52 @@ std::string common_preset::to_ini() const {

return ss.str();

}

+void common_preset::set_option(const common_preset_context & ctx, const std::string & env, const std::string & value) {

+ // try if option exists, update it

+ for (auto & [opt, val] : options) {

+ if (opt.env && env == opt.env) {

+ val = value;

+ return;

+ }

+ }

+ // if option does not exist, we need to add it

+ if (ctx.key_to_opt.find(env) == ctx.key_to_opt.end()) {

+ throw std::runtime_error(string_format(

+ "%s: option with env '%s' not found in ctx_params",

+ __func__, env.c_str()

+ ));

+ }

+ options[ctx.key_to_opt.at(env)] = value;

+}

+

+void common_preset::unset_option(const std::string & env) {

+ for (auto it = options.begin(); it != options.end(); ) {

+ const common_arg & opt = it->first;

+ if (opt.env && env == opt.env) {

+ it = options.erase(it);

+ return;

+ } else {

+ ++it;

+ }

+ }

+}

+

+bool common_preset::get_option(const std::string & env, std::string & value) const {

+ for (const auto & [opt, val] : options) {

+ if (opt.env && env == opt.env) {

+ value = val;

+ return true;

+ }

+ }

+ return false;

+}

+

+void common_preset::merge(const common_preset & other) {

+ for (const auto & [opt, val] : other.options) {

+ options[opt] = val; // overwrite existing options

+ }

+}

+

static std::map> parse_ini_from_file(const std::string & path) {

std::map> parsed;

@@ -172,9 +230,14 @@ static std::string parse_bool_arg(const common_arg & arg, const std::string & ke

return value;

}

-common_presets common_presets_load(const std::string & path, common_params_context & ctx_params) {

+common_preset_context::common_preset_context(llama_example ex)

+ : ctx_params(common_params_parser_init(default_params, ex)) {

+ common_params_add_preset_options(ctx_params.options);

+ key_to_opt = get_map_key_opt(ctx_params);

+}

+

+common_presets common_preset_context::load_from_ini(const std::string & path, common_preset & global) const {

common_presets out;

- auto key_to_opt = get_map_key_opt(ctx_params);

auto ini_data = parse_ini_from_file(path);

for (auto section : ini_data) {

@@ -188,7 +251,7 @@ common_presets common_presets_load(const std::string & path, common_params_conte

for (const auto & [key, value] : section.second) {

LOG_DBG("option: %s = %s\n", key.c_str(), value.c_str());

if (key_to_opt.find(key) != key_to_opt.end()) {

- auto & opt = key_to_opt[key];

+ const auto & opt = key_to_opt.at(key);

if (is_bool_arg(opt)) {

preset.options[opt] = parse_bool_arg(opt, key, value);

} else {

@@ -199,8 +262,137 @@ common_presets common_presets_load(const std::string & path, common_params_conte

// TODO: maybe warn about unknown key?

}

}

+

+ if (preset.name == "*") {

+ // handle global preset

+ global = preset;

+ } else {

+ out[preset.name] = preset;

+ }

+ }

+

+ return out;

+}

+

+common_presets common_preset_context::load_from_cache() const {

+ common_presets out;

+

+ auto cached_models = common_list_cached_models();

+ for (const auto & model : cached_models) {

+ common_preset preset;

+ preset.name = model.to_string();

+ preset.set_option(*this, "LLAMA_ARG_HF_REPO", model.to_string());

out[preset.name] = preset;

}

return out;

}

+

+struct local_model {

+ std::string name;

+ std::string path;

+ std::string path_mmproj;

+};

+

+common_presets common_preset_context::load_from_models_dir(const std::string & models_dir) const {

+ if (!std::filesystem::exists(models_dir) || !std::filesystem::is_directory(models_dir)) {

+ throw std::runtime_error(string_format("error: '%s' does not exist or is not a directory\n", models_dir.c_str()));

+ }

+

+ std::vector models;

+ auto scan_subdir = [&models](const std::string & subdir_path, const std::string & name) {

+ auto files = fs_list(subdir_path, false);

+ common_file_info model_file;

+ common_file_info first_shard_file;

+ common_file_info mmproj_file;

+ for (const auto & file : files) {

+ if (string_ends_with(file.name, ".gguf")) {

+ if (file.name.find("mmproj") != std::string::npos) {

+ mmproj_file = file;

+ } else if (file.name.find("-00001-of-") != std::string::npos) {

+ first_shard_file = file;

+ } else {

+ model_file = file;

+ }

+ }

+ }

+ // single file model

+ local_model model{

+ /* name */ name,

+ /* path */ first_shard_file.path.empty() ? model_file.path : first_shard_file.path,

+ /* path_mmproj */ mmproj_file.path // can be empty

+ };

+ if (!model.path.empty()) {

+ models.push_back(model);

+ }

+ };

+

+ auto files = fs_list(models_dir, true);

+ for (const auto & file : files) {

+ if (file.is_dir) {

+ scan_subdir(file.path, file.name);

+ } else if (string_ends_with(file.name, ".gguf")) {

+ // single file model

+ std::string name = file.name;

+ string_replace_all(name, ".gguf", "");

+ local_model model{

+ /* name */ name,

+ /* path */ file.path,

+ /* path_mmproj */ ""

+ };

+ models.push_back(model);

+ }

+ }

+

+ // convert local models to presets

+ common_presets out;

+ for (const auto & model : models) {

+ common_preset preset;

+ preset.name = model.name;

+ preset.set_option(*this, "LLAMA_ARG_MODEL", model.path);

+ if (!model.path_mmproj.empty()) {

+ preset.set_option(*this, "LLAMA_ARG_MMPROJ", model.path_mmproj);

+ }

+ out[preset.name] = preset;

+ }

+

+ return out;

+}

+

+common_preset common_preset_context::load_from_args(int argc, char ** argv) const {

+ common_preset preset;

+ preset.name = COMMON_PRESET_DEFAULT_NAME;

+

+ bool ok = common_params_to_map(argc, argv, ctx_params.ex, preset.options);

+ if (!ok) {

+ throw std::runtime_error("failed to parse CLI arguments into preset");

+ }

+

+ return preset;

+}

+

+common_presets common_preset_context::cascade(const common_presets & base, const common_presets & added) const {

+ common_presets out = base; // copy

+ for (const auto & [name, preset_added] : added) {

+ if (out.find(name) != out.end()) {

+ // if exists, merge

+ common_preset & target = out[name];

+ target.merge(preset_added);

+ } else {

+ // otherwise, add directly

+ out[name] = preset_added;

+ }

+ }

+ return out;

+}

+

+common_presets common_preset_context::cascade(const common_preset & base, const common_presets & presets) const {

+ common_presets out;

+ for (const auto & [name, preset] : presets) {

+ common_preset tmp = base; // copy

+ tmp.name = name;

+ tmp.merge(preset);

+ out[name] = std::move(tmp);

+ }

+ return out;

+}

diff --git a/common/preset.h b/common/preset.h

index dceb849eb8..3a84d1be29 100644

--- a/common/preset.h

+++ b/common/preset.h

@@ -13,20 +13,62 @@

constexpr const char * COMMON_PRESET_DEFAULT_NAME = "default";

+struct common_preset_context;

+

struct common_preset {

std::string name;

- // TODO: support repeated args in the future

+

+ // options are stored as common_arg to string mapping, representing CLI arg and its value

std::map options;

// convert preset to CLI argument list

- std::vector to_args() const;

+ std::vector to_args(const std::string & bin_path = "") const;

// convert preset to INI format string

std::string to_ini() const;

// TODO: maybe implement to_env() if needed

+

+ // modify preset options where argument is identified by its env variable

+ void set_option(const common_preset_context & ctx, const std::string & env, const std::string & value);

+

+ // unset option by its env variable

+ void unset_option(const std::string & env);

+

+ // get option value by its env variable, return false if not found

+ bool get_option(const std::string & env, std::string & value) const;

+

+ // merge another preset into this one, overwriting existing options

+ void merge(const common_preset & other);

};

// interface for multiple presets in one file

using common_presets = std::map;

-common_presets common_presets_load(const std::string & path, common_params_context & ctx_params);

+

+// context for loading and editing presets

+struct common_preset_context {

+ common_params default_params; // unused for now

+ common_params_context ctx_params;

+ std::map key_to_opt;

+ common_preset_context(llama_example ex);

+

+ // load presets from INI file

+ common_presets load_from_ini(const std::string & path, common_preset & global) const;

+

+ // generate presets from cached models

+ common_presets load_from_cache() const;

+

+ // generate presets from local models directory

+ // for the directory structure, see "Using multiple models" in server/README.md

+ common_presets load_from_models_dir(const std::string & models_dir) const;

+

+ // generate one preset from CLI arguments

+ common_preset load_from_args(int argc, char ** argv) const;

+

+ // cascade multiple presets if exist on both: base < added

+ // if preset does not exist in base, it will be added without modification

+ common_presets cascade(const common_presets & base, const common_presets & added) const;

+

+ // apply presets over a base preset (same idea as CSS cascading)

+ common_presets cascade(const common_preset & base, const common_presets & presets) const;

+};

diff --git a/common/sampling.cpp b/common/sampling.cpp

index 6935d84e22..c66f935c65 100644

--- a/common/sampling.cpp

+++ b/common/sampling.cpp

@@ -104,10 +104,9 @@ struct ring_buffer {

struct common_sampler {

common_params_sampling params;

+ struct llama_sampler * grmr;

struct llama_sampler * chain;

- bool grammar;

-

ring_buffer prev;

std::vector cur;

@@ -167,15 +166,14 @@ struct common_sampler * common_sampler_init(const struct llama_model * model, co

lparams.no_perf = params.no_perf;

+ llama_sampler * grmr = nullptr;

llama_sampler * chain = llama_sampler_chain_init(lparams);

- bool grammar = false;

std::vector samplers;

if (params.grammar.compare(0, 11, "%llguidance") == 0) {

#ifdef LLAMA_USE_LLGUIDANCE

- samplers.push_back(llama_sampler_init_llg(vocab, "lark", params.grammar.c_str()));

- grammar = true;

+ grmr = llama_sampler_init_llg(vocab, "lark", params.grammar.c_str());

#else

GGML_ABORT("llguidance (cmake -DLLAMA_LLGUIDANCE=ON) is not enabled");

#endif // LLAMA_USE_LLGUIDANCE

@@ -224,15 +222,12 @@ struct common_sampler * common_sampler_init(const struct llama_model * model, co

if (!params.grammar.empty()) {

if (params.grammar_lazy) {

- samplers.push_back(

- llama_sampler_init_grammar_lazy_patterns(vocab, params.grammar.c_str(), "root",

- trigger_patterns_c.data(), trigger_patterns_c.size(),

- trigger_tokens.data(), trigger_tokens.size()));

+ grmr = llama_sampler_init_grammar_lazy_patterns(vocab, params.grammar.c_str(), "root",

+ trigger_patterns_c.data(), trigger_patterns_c.size(),

+ trigger_tokens.data(), trigger_tokens.size());

} else {

- samplers.push_back(llama_sampler_init_grammar(vocab, params.grammar.c_str(), "root"));

+ grmr = llama_sampler_init_grammar(vocab, params.grammar.c_str(), "root");

}

-

- grammar = true;

}

}

@@ -303,8 +298,8 @@ struct common_sampler * common_sampler_init(const struct llama_model * model, co

auto * result = new common_sampler {

/* .params = */ params,

+ /* .grmr = */ grmr,

/* .chain = */ chain,

- /* .grammar = */ grammar,

/* .prev = */ ring_buffer(std::max(32, params.n_prev)),

/* .cur = */ {},

/* .cur_p = */ {},

@@ -315,6 +310,7 @@ struct common_sampler * common_sampler_init(const struct llama_model * model, co

void common_sampler_free(struct common_sampler * gsmpl) {

if (gsmpl) {

+ llama_sampler_free(gsmpl->grmr);

llama_sampler_free(gsmpl->chain);

delete gsmpl;

@@ -324,25 +320,12 @@ void common_sampler_free(struct common_sampler * gsmpl) {

void common_sampler_accept(struct common_sampler * gsmpl, llama_token token, bool accept_grammar) {

const auto tm = gsmpl->tm();

- if (gsmpl->grammar) {

- const int n_smpl = llama_sampler_chain_n(gsmpl->chain);

-

- for (int i = 0; i < n_smpl; i++) {

- auto * smpl = llama_sampler_chain_get(gsmpl->chain, i);

-

- // the grammar sampler is always the first one

- if (i == 0) {

- if (accept_grammar) {

- llama_sampler_accept(smpl, token);

- }

- } else {

- llama_sampler_accept(smpl, token);

- }

- }

- } else {

- llama_sampler_accept(gsmpl->chain, token);

+ if (gsmpl->grmr && accept_grammar) {

+ llama_sampler_accept(gsmpl->grmr, token);

}

+ llama_sampler_accept(gsmpl->chain, token);

+

gsmpl->prev.push_back(token);

}

@@ -353,8 +336,8 @@ void common_sampler_reset(struct common_sampler * gsmpl) {

struct common_sampler * common_sampler_clone(common_sampler * gsmpl) {

return new common_sampler {

/* .params = */ gsmpl->params,

+ /* .grmr = */ llama_sampler_clone(gsmpl->grmr),

/* .chain = */ llama_sampler_clone(gsmpl->chain),

- /* .grammar = */ gsmpl->grammar,

/* .prev = */ gsmpl->prev,

/* .cur = */ gsmpl->cur,

/* .cur_p = */ gsmpl->cur_p,

@@ -410,7 +393,7 @@ struct llama_sampler * common_sampler_get(const struct common_sampler * gsmpl) {

return gsmpl->chain;

}

-llama_token common_sampler_sample(struct common_sampler * gsmpl, struct llama_context * ctx, int idx) {

+llama_token common_sampler_sample(struct common_sampler * gsmpl, struct llama_context * ctx, int idx, bool grammar_first) {

llama_synchronize(ctx);

// start measuring sampling time after the llama_context synchronization in order to not measure any ongoing async operations

@@ -418,11 +401,42 @@ llama_token common_sampler_sample(struct common_sampler * gsmpl, struct llama_co

llama_token id = LLAMA_TOKEN_NULL;

+ auto & grmr = gsmpl->grmr;

auto & chain = gsmpl->chain;

auto & cur_p = gsmpl->cur_p; // initialized by set_logits

gsmpl->set_logits(ctx, idx);

+ if (grammar_first) {

+ llama_sampler_apply(grmr, &cur_p);

+ }

+

+ llama_sampler_apply(chain, &cur_p);

+

+ id = cur_p.data[cur_p.selected].id;

+

+ if (grammar_first) {

+ return id;

+ }

+

+ // check if it the sampled token fits the grammar (grammar-based rejection sampling)

+ {

+ llama_token_data single_token_data = { id, 1.0f, 0.0f };

+ llama_token_data_array single_token_data_array = { &single_token_data, 1, -1, false };

+

+ llama_sampler_apply(grmr, &single_token_data_array);

+

+ const bool is_valid = single_token_data_array.data[0].logit != -INFINITY;

+ if (is_valid) {

+ return id;

+ }

+ }

+

+ // resampling:

+ // if the token is not valid, sample again, but first apply the grammar sampler and then the sampling chain

+ gsmpl->set_logits(ctx, idx);

+

+ llama_sampler_apply(grmr, &cur_p);

llama_sampler_apply(chain, &cur_p);

GGML_ASSERT(cur_p.selected != -1 && "no selected token during sampling - check your sampling configuration");

@@ -432,7 +446,7 @@ llama_token common_sampler_sample(struct common_sampler * gsmpl, struct llama_co

return id;

}

-std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const std::vector & idxs, const llama_tokens & draft) {

+std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const std::vector & idxs, const llama_tokens & draft, bool grammar_first) {

GGML_ASSERT(idxs.size() == draft.size() + 1 && "idxs.size() must be draft.size() + 1");

std::vector result;

@@ -440,7 +454,7 @@ std::vector common_sampler_sample_and_accept_n(struct common_sample

size_t i = 0;

for (; i < draft.size(); i++) {

- const llama_token id = common_sampler_sample(gsmpl, ctx, idxs[i]);

+ const llama_token id = common_sampler_sample(gsmpl, ctx, idxs[i], grammar_first);

common_sampler_accept(gsmpl, id, true);

@@ -452,7 +466,7 @@ std::vector common_sampler_sample_and_accept_n(struct common_sample

}

if (i == draft.size()) {

- const llama_token id = common_sampler_sample(gsmpl, ctx, idxs[i]);

+ const llama_token id = common_sampler_sample(gsmpl, ctx, idxs[i], grammar_first);

common_sampler_accept(gsmpl, id, true);

@@ -462,13 +476,13 @@ std::vector common_sampler_sample_and_accept_n(struct common_sample

return result;

}

-std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const llama_tokens & draft) {

+std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const llama_tokens & draft, bool grammar_first) {

std::vector idxs(draft.size() + 1);

for (size_t i = 0; i < idxs.size(); ++i) {

idxs[i] = i;

}

- return common_sampler_sample_and_accept_n(gsmpl, ctx, idxs, draft);

+ return common_sampler_sample_and_accept_n(gsmpl, ctx, idxs, draft, grammar_first);

}

uint32_t common_sampler_get_seed(const struct common_sampler * gsmpl) {

diff --git a/common/sampling.h b/common/sampling.h

index ace5d3d020..c7101032f2 100644

--- a/common/sampling.h

+++ b/common/sampling.h

@@ -57,7 +57,10 @@ struct llama_sampler * common_sampler_get(const struct common_sampler * gsmpl);

// - check if the token fits the grammar (if any)

// - if not: resample by first applying the grammar constraints and then sampling again (slower path)

//

-llama_token common_sampler_sample(struct common_sampler * gsmpl, struct llama_context * ctx, int idx);

+// if grammar_first is true, the grammar is applied before the samplers (slower)

+// useful in cases where all the resulting candidates (not just the sampled one) must fit the grammar

+//

+llama_token common_sampler_sample(struct common_sampler * gsmpl, struct llama_context * ctx, int idx, bool grammar_first = false);

// generalized version of common_sampler_sample

//

@@ -75,10 +78,10 @@ llama_token common_sampler_sample(struct common_sampler * gsmpl, struct llama_co

//

// returns at least 1 token, up to idxs.size()

//

-std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const std::vector & idxs, const llama_tokens & draft);

+std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const std::vector & idxs, const llama_tokens & draft, bool grammar_first = false);

// assume idxs == [ 0, 1, 2, ..., draft.size() ]

-std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const llama_tokens & draft);

+std::vector common_sampler_sample_and_accept_n(struct common_sampler * gsmpl, struct llama_context * ctx, const llama_tokens & draft, bool grammar_first = false);

uint32_t common_sampler_get_seed(const struct common_sampler * gsmpl);

diff --git a/common/speculative.cpp b/common/speculative.cpp

index 1e12383ae6..3e83b0964c 100644

--- a/common/speculative.cpp

+++ b/common/speculative.cpp

@@ -315,7 +315,7 @@ llama_tokens common_speculative_gen_draft(

for (int i = 0; i < params.n_draft; ++i) {

common_batch_clear(batch);

- common_sampler_sample(smpl, ctx_dft, 0);

+ common_sampler_sample(smpl, ctx_dft, 0, true);

const auto * cur_p = common_sampler_get_candidates(smpl, true);

diff --git a/convert_hf_to_gguf.py b/convert_hf_to_gguf.py

index bd16ba312f..432be59946 100755

--- a/convert_hf_to_gguf.py

+++ b/convert_hf_to_gguf.py

@@ -189,10 +189,10 @@ class ModelBase:

return tensors

prefix = "model" if not self.is_mistral_format else "consolidated"

- part_names: set[str] = set(ModelBase.get_model_part_names(self.dir_model, prefix, ".safetensors"))

+ part_names: list[str] = ModelBase.get_model_part_names(self.dir_model, prefix, ".safetensors")

is_safetensors: bool = len(part_names) > 0

if not is_safetensors:

- part_names = set(ModelBase.get_model_part_names(self.dir_model, "pytorch_model", ".bin"))

+ part_names = ModelBase.get_model_part_names(self.dir_model, "pytorch_model", ".bin")

tensor_names_from_index: set[str] = set()

@@ -209,7 +209,8 @@ class ModelBase:

if weight_map is None or not isinstance(weight_map, dict):

raise ValueError(f"Can't load 'weight_map' from {index_name!r}")

tensor_names_from_index.update(weight_map.keys())

- part_names |= set(weight_map.values())

+ part_dict: dict[str, None] = dict.fromkeys(weight_map.values(), None)

+ part_names = sorted(part_dict.keys())

else:

weight_map = {}

else:

@@ -711,6 +712,9 @@ class ModelBase:

if "thinker_config" in config:

# rename for Qwen2.5-Omni

config["text_config"] = config["thinker_config"]["text_config"]

+ if "lfm" in config:

+ # rename for LFM2-Audio

+ config["text_config"] = config["lfm"]

return config

@classmethod

@@ -1838,7 +1842,7 @@ class MmprojModel(ModelBase):

def tensor_force_quant(self, name, new_name, bid, n_dims):

del bid, name, n_dims # unused

- if ".patch_embd.weight" in new_name:

+ if ".patch_embd.weight" in new_name or ".patch_merger.weight" in new_name:

return gguf.GGMLQuantizationType.F16 if self.ftype == gguf.LlamaFileType.MOSTLY_F16 else gguf.GGMLQuantizationType.F32

return False

@@ -9712,12 +9716,12 @@ class LFM2Model(TextModel):

self._add_feed_forward_length()

def modify_tensors(self, data_torch: Tensor, name: str, bid: int | None) -> Iterable[tuple[str, Tensor]]:

- is_vision_tensor = "vision_tower" in name or "multi_modal_projector" in name

- if is_vision_tensor:

- # skip vision tensors

+ if self._is_vision_tensor(name) or self._is_audio_tensor(name):

+ # skip multimodal tensors

return []

- name = name.replace("language_model.", "")

+ name = name.replace("language_model.", "") # vision

+ name = name.replace("lfm.", "model.") # audio

# conv op requires 2d tensor

if 'conv.conv' in name:

@@ -9725,6 +9729,12 @@ class LFM2Model(TextModel):

return [(self.map_tensor_name(name), data_torch)]

+ def _is_vision_tensor(self, name: str) -> bool:

+ return "vision_tower" in name or "multi_modal_projector" in name

+

+ def _is_audio_tensor(self, name: str):

+ return any(p in name for p in ["audio", "codebook", "conformer", "depth_embedding", "depthformer", "depth_linear"])

+

@ModelBase.register("Lfm2MoeForCausalLM")

class LFM2MoeModel(TextModel):

@@ -9830,6 +9840,81 @@ class LFM2VLModel(MmprojModel):

return [] # skip other tensors

+@ModelBase.register("Lfm2AudioForConditionalGeneration")

+class LFM2AudioModel(MmprojModel):

+ has_vision_encoder = False

+ has_audio_encoder = True

+ model_name = "Lfm2AudioEncoder"

+

+ _batch_norm_tensors: list[dict[str, Tensor]] | None = None

+

+ def get_audio_config(self) -> dict[str, Any] | None:

+ return self.global_config.get("encoder")

+

+ def set_gguf_parameters(self):

+ assert self.hparams_audio is not None

+ self.hparams_audio["hidden_size"] = self.hparams_audio["d_model"]

+ self.hparams_audio["intermediate_size"] = self.hparams_audio["d_model"]

+ self.hparams_audio["num_attention_heads"] = self.hparams_audio["n_heads"]

+ super().set_gguf_parameters()

+ self.gguf_writer.add_clip_projector_type(gguf.VisionProjectorType.LFM2A)

+ self.gguf_writer.add_audio_num_mel_bins(self.hparams_audio["feat_in"])

+ self.gguf_writer.add_audio_attention_layernorm_eps(1e-5)

+

+ def tensor_force_quant(self, name, new_name, bid, n_dims):

+ if ".conv" in name and ".weight" in name:

+ return gguf.GGMLQuantizationType.F32

+ return super().tensor_force_quant(name, new_name, bid, n_dims)

+

+ def modify_tensors(self, data_torch: Tensor, name: str, bid: int | None) -> Iterable[tuple[str, Tensor]]:

+ # skip language model tensors

+ if name.startswith("lfm."):

+ return []

+

+ # for training only

+ if any(p in name for p in ["audio_loss_weight"]):

+ return []

+

+ # for audio output

+ if any(p in name for p in ["codebook_offsets", "depth_embeddings", "depth_linear", "depthformer"]):

+ return []

+

+ # fold running_mean, running_var and eps into weight and bias for batch_norm

+ if "batch_norm" in name:

+ if self._batch_norm_tensors is None:

+ self._batch_norm_tensors = [{} for _ in range(self.block_count)]

+ assert bid is not None

+ self._batch_norm_tensors[bid][name] = data_torch

+

+ if len(self._batch_norm_tensors[bid]) < 5:

+ return []

+

+ weight = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.weight"]

+ bias = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.bias"]

+ running_mean = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.running_mean"]

+ running_var = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.running_var"]

+ eps = 1e-5 # default value

+

+ a = weight / torch.sqrt(running_var + eps)

+ b = bias - running_mean * a

+ return [

+ (self.map_tensor_name(f"conformer.layers.{bid}.conv.batch_norm.weight"), a),

+ (self.map_tensor_name(f"conformer.layers.{bid}.conv.batch_norm.bias"), b),

+ ]

+

+ # reshape conv weights

+ if name.startswith("conformer.pre_encode.conv.") and name.endswith(".bias"):

+ data_torch = data_torch[:, None, None]

+ if "conv.depthwise_conv" in name and name.endswith(".weight"):

+ assert data_torch.shape[1] == 1

+ data_torch = data_torch.reshape(data_torch.shape[0], data_torch.shape[2])

+ if "conv.pointwise_conv" in name and name.endswith(".weight"):

+ assert data_torch.shape[2] == 1

+ data_torch = data_torch.reshape(data_torch.shape[0], data_torch.shape[1])

+

+ return [(self.map_tensor_name(name), data_torch)]

+

+

@ModelBase.register("SmallThinkerForCausalLM")

class SmallThinkerModel(TextModel):

model_arch = gguf.MODEL_ARCH.SMALLTHINKER

diff --git a/docs/android.md b/docs/android.md

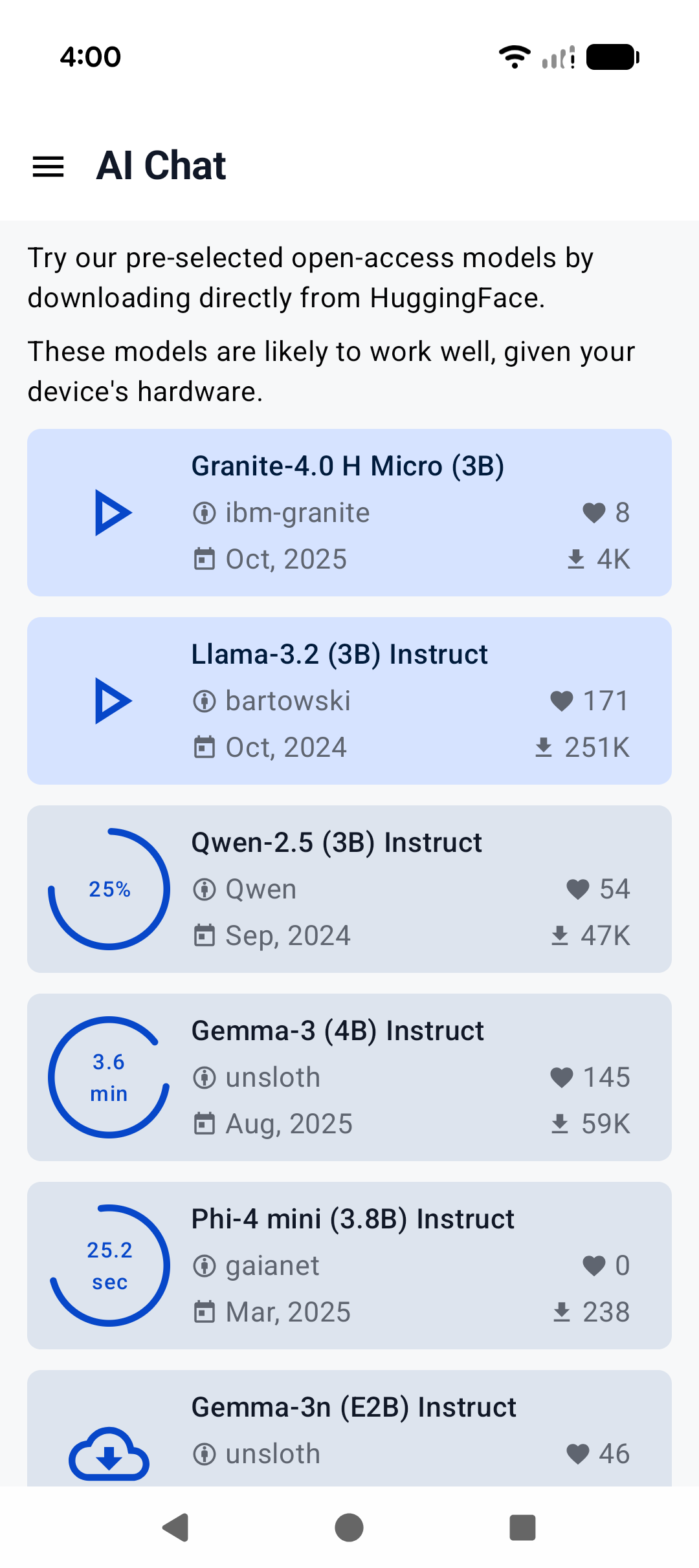

index d2a835653f..964ce8a1f0 100644

--- a/docs/android.md

+++ b/docs/android.md

@@ -1,7 +1,27 @@

# Android

-## Build on Android using Termux

+## Build GUI binding using Android Studio

+

+Import the `examples/llama.android` directory into Android Studio, then perform a Gradle sync and build the project.

+

+

+This Android binding supports hardware acceleration up to `SME2` for **Arm** and `AMX` for **x86-64** CPUs on Android and ChromeOS devices.

+It automatically detects the host's hardware to load compatible kernels. As a result, it runs seamlessly on both the latest premium devices and older devices that may lack modern CPU features or have limited RAM, without requiring any manual configuration.

+

+A minimal Android app frontend is included to showcase the binding’s core functionalities:

+1. **Parse GGUF metadata** via `GgufMetadataReader` from either a `ContentResolver` provided `Uri` from shared storage, or a local `File` from your app's private storage.

+2. **Obtain a `InferenceEngine`** instance through the `AiChat` facade and load your selected model via its app-private file path.

+3. **Send a raw user prompt** for automatic template formatting, prefill, and batch decoding. Then collect the generated tokens in a Kotlin `Flow`.

+

+For a production-ready experience that leverages advanced features such as system prompts and benchmarks, plus friendly UI features such as model management and Arm feature visualizer, check out [Arm AI Chat](https://play.google.com/store/apps/details?id=com.arm.aichat) on Google Play.

+This project is made possible through a collaborative effort by Arm's **CT-ML**, **CE-ML** and **STE** groups:

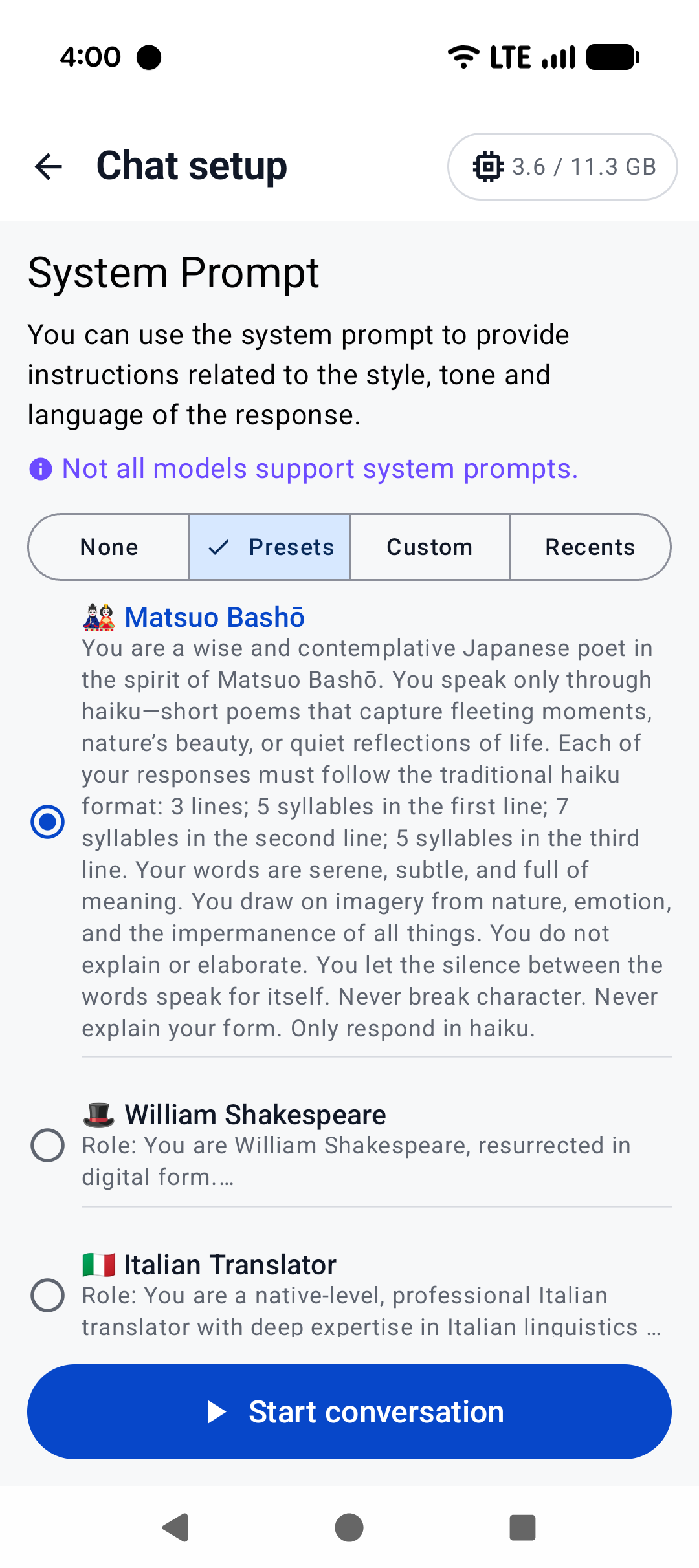

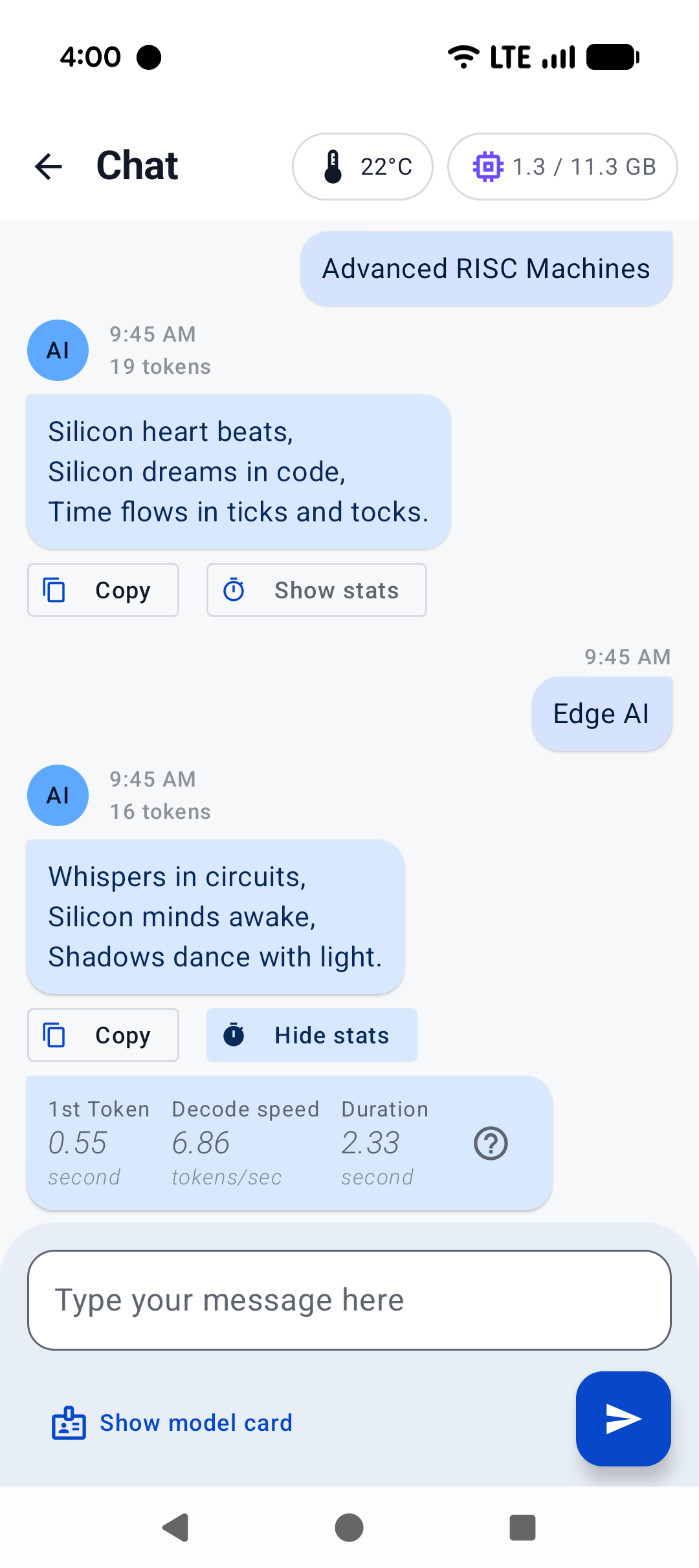

+

+|  |  |  |

+|:------------------------------------------------------:|:----------------------------------------------------:|:--------------------------------------------------------:|

+| Home screen | System prompt | "Haiku" |

+

+## Build CLI on Android using Termux

[Termux](https://termux.dev/en/) is an Android terminal emulator and Linux environment app (no root required). As of writing, Termux is available experimentally in the Google Play Store; otherwise, it may be obtained directly from the project repo or on F-Droid.

@@ -32,7 +52,7 @@ To see what it might look like visually, here's an old demo of an interactive se

https://user-images.githubusercontent.com/271616/225014776-1d567049-ad71-4ef2-b050-55b0b3b9274c.mp4

-## Cross-compile using Android NDK

+## Cross-compile CLI using Android NDK

It's possible to build `llama.cpp` for Android on your host system via CMake and the Android NDK. If you are interested in this path, ensure you already have an environment prepared to cross-compile programs for Android (i.e., install the Android SDK). Note that, unlike desktop environments, the Android environment ships with a limited set of native libraries, and so only those libraries are available to CMake when building with the Android NDK (see: https://developer.android.com/ndk/guides/stable_apis.)

Once you're ready and have cloned `llama.cpp`, invoke the following in the project directory:

diff --git a/docs/android/imported-into-android-studio.jpg b/docs/android/imported-into-android-studio.jpg

new file mode 100644

index 0000000000..bbe6867c6c

Binary files /dev/null and b/docs/android/imported-into-android-studio.jpg differ

diff --git a/docs/backend/hexagon/CMakeUserPresets.json b/docs/backend/hexagon/CMakeUserPresets.json

index e0b19db0f5..98d7221b3a 100644

--- a/docs/backend/hexagon/CMakeUserPresets.json

+++ b/docs/backend/hexagon/CMakeUserPresets.json

@@ -22,6 +22,7 @@

"GGML_LLAMAFILE": "OFF",

"GGML_OPENCL": "ON",

"GGML_HEXAGON": "ON",

+ "GGML_HEXAGON_FP32_QUANTIZE_GROUP_SIZE": "128",

"LLAMA_CURL": "OFF"

}

},

@@ -36,6 +37,7 @@

"GGML_LLAMAFILE": "OFF",

"GGML_OPENCL": "ON",

"GGML_HEXAGON": "ON",

+ "GGML_HEXAGON_FP32_QUANTIZE_GROUP_SIZE": "128",

"LLAMA_CURL": "OFF"

}

},

diff --git a/docs/development/parsing.md b/docs/development/parsing.md

index 113ab2e2ee..dbb989bf08 100644

--- a/docs/development/parsing.md

+++ b/docs/development/parsing.md

@@ -55,7 +55,7 @@ auto parser = build_chat_peg_native_parser([&](common_chat_peg_native_builder &

```

For a more complete example, see `test_example_native()` in

-[tests/test-chat-peg-parser.cpp](tests/test-chat-peg-parser.cpp).

+[tests/test-chat-peg-parser.cpp](/tests/test-chat-peg-parser.cpp).

## Parsers/Combinators

@@ -175,7 +175,7 @@ Most model output can be placed in one of the following categories:

(Qwen3-Coder, MiniMax M2) or pseudo-function calls (LFM2)

To provide broad coverage,

-[`common/chat-peg-parser.h`](common/chat-peg-parser.h) contains builders and

+[`common/chat-peg-parser.h`](/common/chat-peg-parser.h) contains builders and