Merge remote-tracking branch 'upstream/master' into backend-sampling

This commit is contained in:

commit

bc5195c585

|

|

@ -873,7 +873,9 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

sampler_type_chars += common_sampler_type_to_chr(sampler);

|

||||

sampler_type_names += common_sampler_type_to_str(sampler) + ";";

|

||||

}

|

||||

sampler_type_names.pop_back();

|

||||

if (!sampler_type_names.empty()) {

|

||||

sampler_type_names.pop_back(); // remove last semicolon

|

||||

}

|

||||

|

||||

|

||||

/**

|

||||

|

|

@ -1194,7 +1196,7 @@ common_params_context common_params_parser_init(common_params & params, llama_ex

|

|||

[](common_params & params, const std::string & value) {

|

||||

params.system_prompt = value;

|

||||

}

|

||||

).set_examples({LLAMA_EXAMPLE_COMPLETION, LLAMA_EXAMPLE_CLI, LLAMA_EXAMPLE_DIFFUSION}));

|

||||

).set_examples({LLAMA_EXAMPLE_COMPLETION, LLAMA_EXAMPLE_CLI, LLAMA_EXAMPLE_DIFFUSION, LLAMA_EXAMPLE_MTMD}));

|

||||

add_opt(common_arg(

|

||||

{"--perf"},

|

||||

{"--no-perf"},

|

||||

|

|

|

|||

|

|

@ -712,6 +712,9 @@ class ModelBase:

|

|||

if "thinker_config" in config:

|

||||

# rename for Qwen2.5-Omni

|

||||

config["text_config"] = config["thinker_config"]["text_config"]

|

||||

if "lfm" in config:

|

||||

# rename for LFM2-Audio

|

||||

config["text_config"] = config["lfm"]

|

||||

return config

|

||||

|

||||

@classmethod

|

||||

|

|

@ -9713,12 +9716,12 @@ class LFM2Model(TextModel):

|

|||

self._add_feed_forward_length()

|

||||

|

||||

def modify_tensors(self, data_torch: Tensor, name: str, bid: int | None) -> Iterable[tuple[str, Tensor]]:

|

||||

is_vision_tensor = "vision_tower" in name or "multi_modal_projector" in name

|

||||

if is_vision_tensor:

|

||||

# skip vision tensors

|

||||

if self._is_vision_tensor(name) or self._is_audio_tensor(name):

|

||||

# skip multimodal tensors

|

||||

return []

|

||||

|

||||

name = name.replace("language_model.", "")

|

||||

name = name.replace("language_model.", "") # vision

|

||||

name = name.replace("lfm.", "model.") # audio

|

||||

|

||||

# conv op requires 2d tensor

|

||||

if 'conv.conv' in name:

|

||||

|

|

@ -9726,6 +9729,12 @@ class LFM2Model(TextModel):

|

|||

|

||||

return [(self.map_tensor_name(name), data_torch)]

|

||||

|

||||

def _is_vision_tensor(self, name: str) -> bool:

|

||||

return "vision_tower" in name or "multi_modal_projector" in name

|

||||

|

||||

def _is_audio_tensor(self, name: str):

|

||||

return any(p in name for p in ["audio", "codebook", "conformer", "depth_embedding", "depthformer", "depth_linear"])

|

||||

|

||||

|

||||

@ModelBase.register("Lfm2MoeForCausalLM")

|

||||

class LFM2MoeModel(TextModel):

|

||||

|

|

@ -9831,6 +9840,81 @@ class LFM2VLModel(MmprojModel):

|

|||

return [] # skip other tensors

|

||||

|

||||

|

||||

@ModelBase.register("Lfm2AudioForConditionalGeneration")

|

||||

class LFM2AudioModel(MmprojModel):

|

||||

has_vision_encoder = False

|

||||

has_audio_encoder = True

|

||||

model_name = "Lfm2AudioEncoder"

|

||||

|

||||

_batch_norm_tensors: list[dict[str, Tensor]] | None = None

|

||||

|

||||

def get_audio_config(self) -> dict[str, Any] | None:

|

||||

return self.global_config.get("encoder")

|

||||

|

||||

def set_gguf_parameters(self):

|

||||

assert self.hparams_audio is not None

|

||||

self.hparams_audio["hidden_size"] = self.hparams_audio["d_model"]

|

||||

self.hparams_audio["intermediate_size"] = self.hparams_audio["d_model"]

|

||||

self.hparams_audio["num_attention_heads"] = self.hparams_audio["n_heads"]

|

||||

super().set_gguf_parameters()

|

||||

self.gguf_writer.add_clip_projector_type(gguf.VisionProjectorType.LFM2A)

|

||||

self.gguf_writer.add_audio_num_mel_bins(self.hparams_audio["feat_in"])

|

||||

self.gguf_writer.add_audio_attention_layernorm_eps(1e-5)

|

||||

|

||||

def tensor_force_quant(self, name, new_name, bid, n_dims):

|

||||

if ".conv" in name and ".weight" in name:

|

||||

return gguf.GGMLQuantizationType.F32

|

||||

return super().tensor_force_quant(name, new_name, bid, n_dims)

|

||||

|

||||

def modify_tensors(self, data_torch: Tensor, name: str, bid: int | None) -> Iterable[tuple[str, Tensor]]:

|

||||

# skip language model tensors

|

||||

if name.startswith("lfm."):

|

||||

return []

|

||||

|

||||

# for training only

|

||||

if any(p in name for p in ["audio_loss_weight"]):

|

||||

return []

|

||||

|

||||

# for audio output

|

||||

if any(p in name for p in ["codebook_offsets", "depth_embeddings", "depth_linear", "depthformer"]):

|

||||

return []

|

||||

|

||||

# fold running_mean, running_var and eps into weight and bias for batch_norm

|

||||

if "batch_norm" in name:

|

||||

if self._batch_norm_tensors is None:

|

||||

self._batch_norm_tensors = [{} for _ in range(self.block_count)]

|

||||

assert bid is not None

|

||||

self._batch_norm_tensors[bid][name] = data_torch

|

||||

|

||||

if len(self._batch_norm_tensors[bid]) < 5:

|

||||

return []

|

||||

|

||||

weight = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.weight"]

|

||||

bias = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.bias"]

|

||||

running_mean = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.running_mean"]

|

||||

running_var = self._batch_norm_tensors[bid][f"conformer.layers.{bid}.conv.batch_norm.running_var"]

|

||||

eps = 1e-5 # default value

|

||||

|

||||

a = weight / torch.sqrt(running_var + eps)

|

||||

b = bias - running_mean * a

|

||||

return [

|

||||

(self.map_tensor_name(f"conformer.layers.{bid}.conv.batch_norm.weight"), a),

|

||||

(self.map_tensor_name(f"conformer.layers.{bid}.conv.batch_norm.bias"), b),

|

||||

]

|

||||

|

||||

# reshape conv weights

|

||||

if name.startswith("conformer.pre_encode.conv.") and name.endswith(".bias"):

|

||||

data_torch = data_torch[:, None, None]

|

||||

if "conv.depthwise_conv" in name and name.endswith(".weight"):

|

||||

assert data_torch.shape[1] == 1

|

||||

data_torch = data_torch.reshape(data_torch.shape[0], data_torch.shape[2])

|

||||

if "conv.pointwise_conv" in name and name.endswith(".weight"):

|

||||

assert data_torch.shape[2] == 1

|

||||

data_torch = data_torch.reshape(data_torch.shape[0], data_torch.shape[1])

|

||||

|

||||

return [(self.map_tensor_name(name), data_torch)]

|

||||

|

||||

|

||||

@ModelBase.register("SmallThinkerForCausalLM")

|

||||

class SmallThinkerModel(TextModel):

|

||||

model_arch = gguf.MODEL_ARCH.SMALLTHINKER

|

||||

|

|

|

|||

|

|

@ -1,27 +1,27 @@

|

|||

|

||||

# Android

|

||||

|

||||

## Build with Android Studio

|

||||

## Build GUI binding using Android Studio

|

||||

|

||||

Import the `examples/llama.android` directory into Android Studio, then perform a Gradle sync and build the project.

|

||||

|

||||

|

||||

|

||||

This Android binding supports hardware acceleration up to `SME2` for **Arm** and `AMX` for **x86-64** CPUs on Android and ChromeOS devices.

|

||||

It automatically detects the host's hardware to load compatible kernels. As a result, it runs seamlessly on both the latest premium devices and older devices that may lack modern CPU features or have limited RAM, without requiring any manual configuration.

|

||||

|

||||

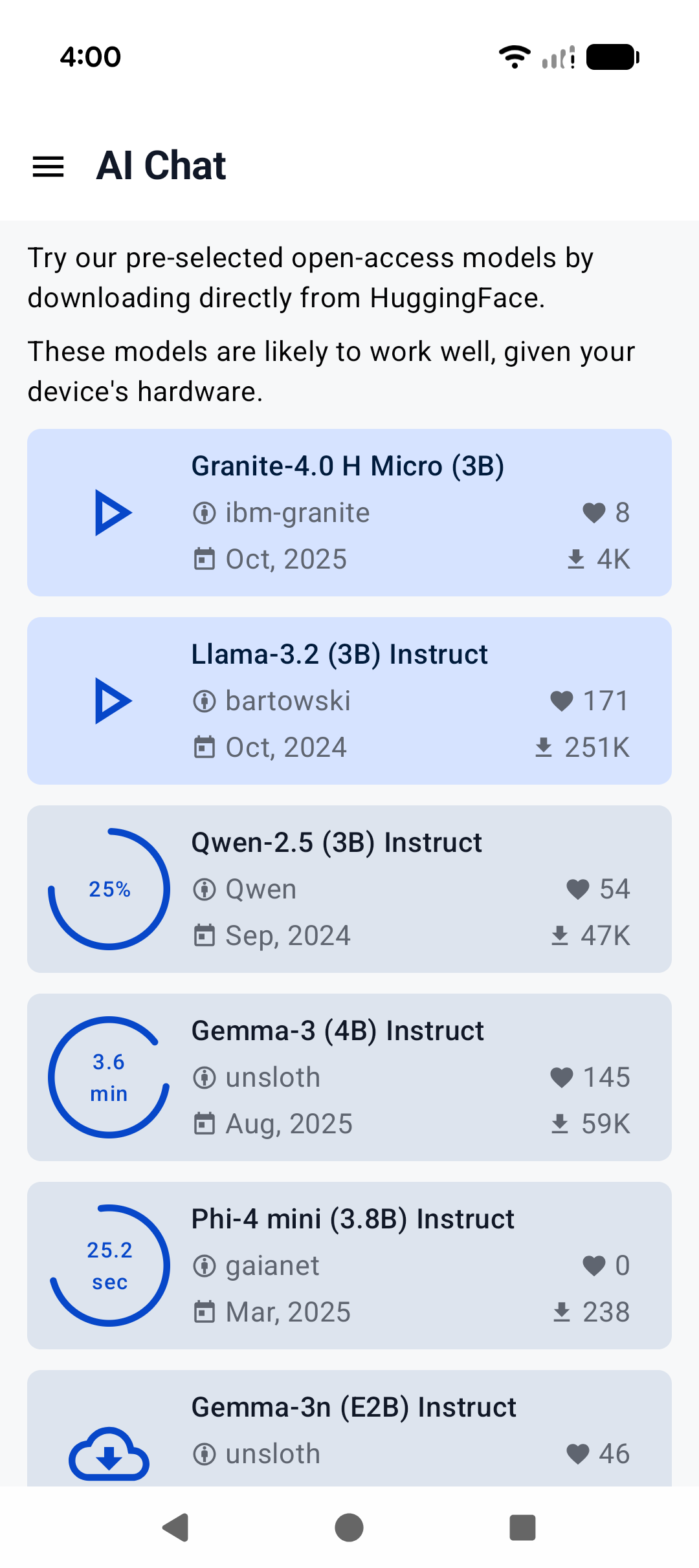

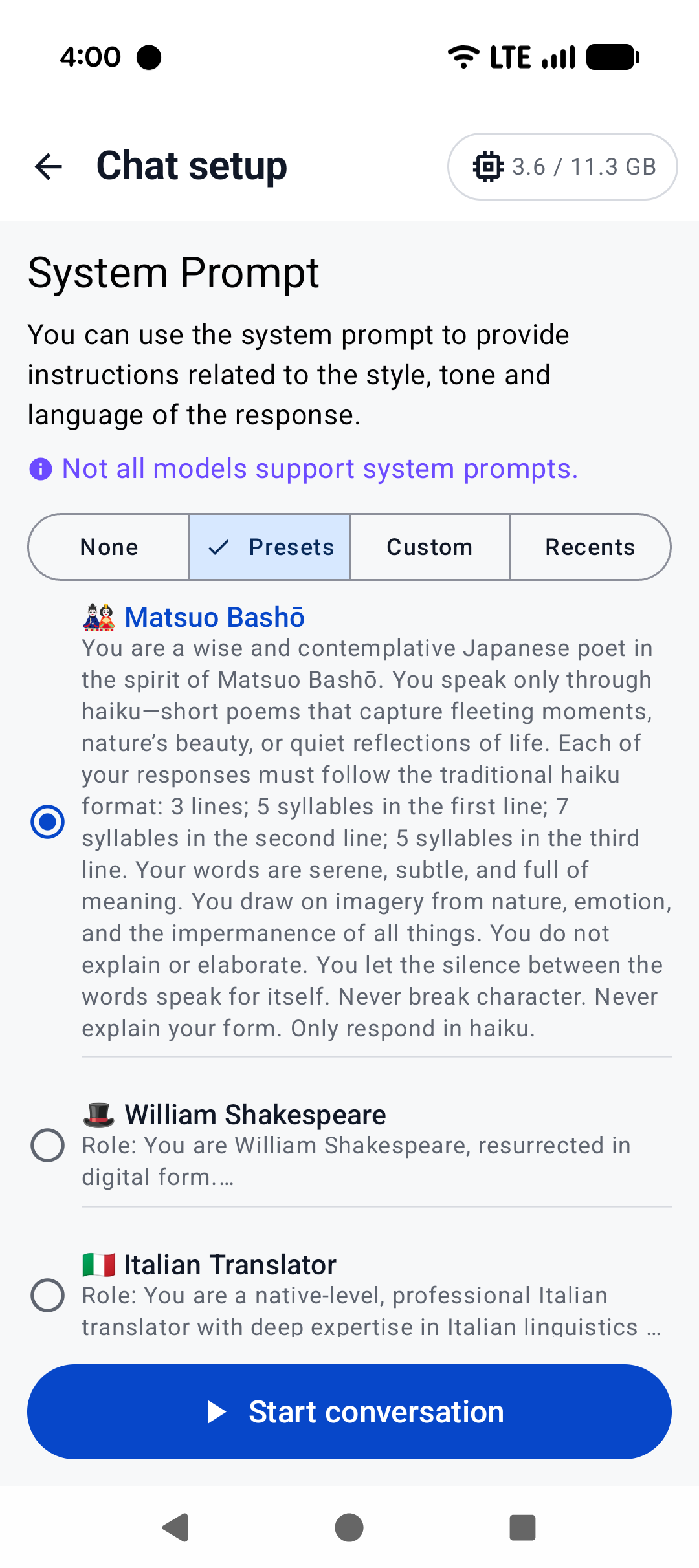

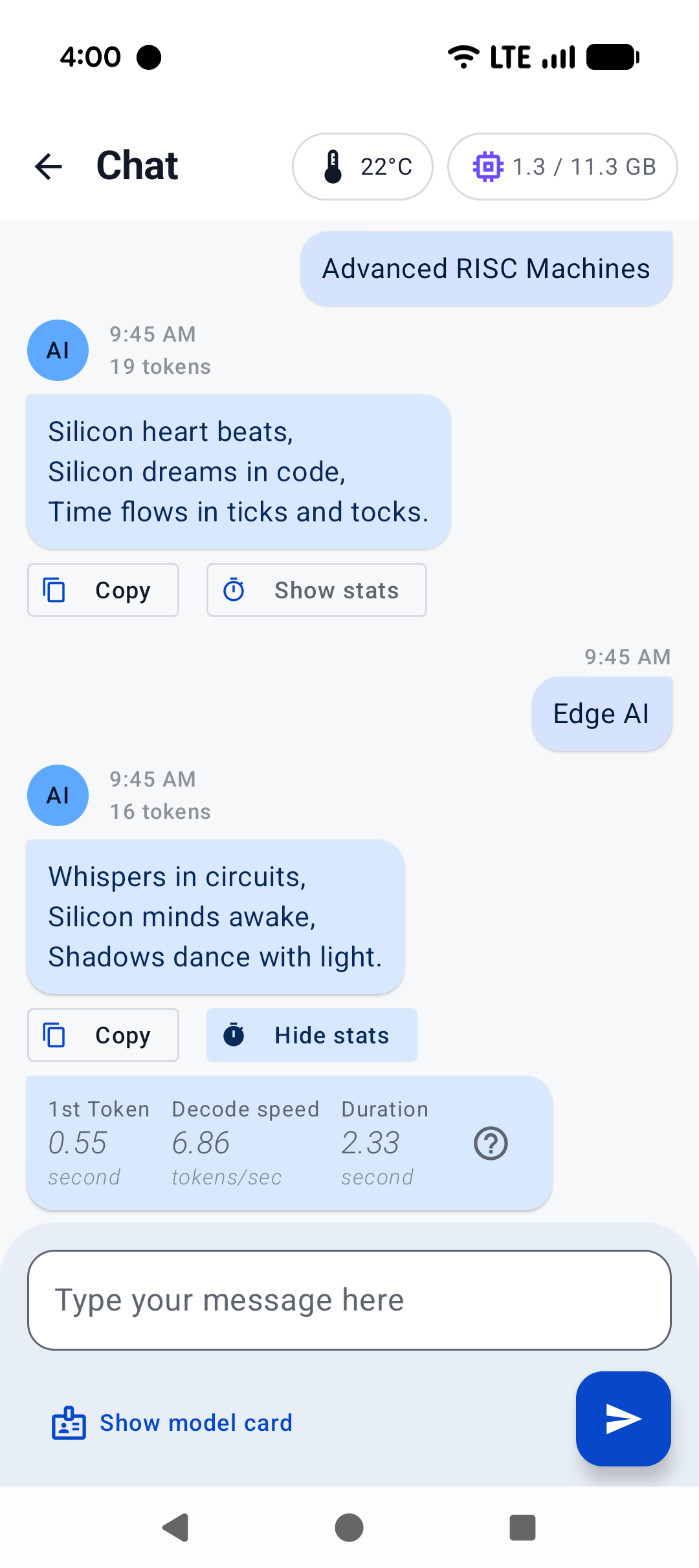

A minimal Android app frontend is included to showcase the binding’s core functionalities:

|

||||

1. **Parse GGUF metadata** via `GgufMetadataReader` from either a `ContentResolver` provided `Uri` or a local `File`.

|

||||

2. **Obtain a `TierDetection` or `InferenceEngine`** instance through the high-level facade APIs.

|

||||

3. **Send a raw user prompt** for automatic template formatting, prefill, and decoding. Then collect the generated tokens in a Kotlin `Flow`.

|

||||

1. **Parse GGUF metadata** via `GgufMetadataReader` from either a `ContentResolver` provided `Uri` from shared storage, or a local `File` from your app's private storage.

|

||||

2. **Obtain a `InferenceEngine`** instance through the `AiChat` facade and load your selected model via its app-private file path.

|

||||

3. **Send a raw user prompt** for automatic template formatting, prefill, and batch decoding. Then collect the generated tokens in a Kotlin `Flow`.

|

||||

|

||||

For a production-ready experience that leverages advanced features such as system prompts and benchmarks, check out [Arm AI Chat](https://play.google.com/store/apps/details?id=com.arm.aichat) on Google Play.

|

||||

For a production-ready experience that leverages advanced features such as system prompts and benchmarks, plus friendly UI features such as model management and Arm feature visualizer, check out [Arm AI Chat](https://play.google.com/store/apps/details?id=com.arm.aichat) on Google Play.

|

||||

This project is made possible through a collaborative effort by Arm's **CT-ML**, **CE-ML** and **STE** groups:

|

||||

|

||||

|  |  |  |

|

||||

|  |  |  |

|

||||

|:------------------------------------------------------:|:----------------------------------------------------:|:--------------------------------------------------------:|

|

||||

| Home screen | System prompt | "Haiku" |

|

||||

|

||||

## Build on Android using Termux

|

||||

## Build CLI on Android using Termux

|

||||

|

||||

[Termux](https://termux.dev/en/) is an Android terminal emulator and Linux environment app (no root required). As of writing, Termux is available experimentally in the Google Play Store; otherwise, it may be obtained directly from the project repo or on F-Droid.

|

||||

|

||||

|

|

@ -52,7 +52,7 @@ To see what it might look like visually, here's an old demo of an interactive se

|

|||

|

||||

https://user-images.githubusercontent.com/271616/225014776-1d567049-ad71-4ef2-b050-55b0b3b9274c.mp4

|

||||

|

||||

## Cross-compile using Android NDK

|

||||

## Cross-compile CLI using Android NDK

|

||||

It's possible to build `llama.cpp` for Android on your host system via CMake and the Android NDK. If you are interested in this path, ensure you already have an environment prepared to cross-compile programs for Android (i.e., install the Android SDK). Note that, unlike desktop environments, the Android environment ships with a limited set of native libraries, and so only those libraries are available to CMake when building with the Android NDK (see: https://developer.android.com/ndk/guides/stable_apis.)

|

||||

|

||||

Once you're ready and have cloned `llama.cpp`, invoke the following in the project directory:

|

||||

|

|

|

|||

Binary file not shown.

|

After Width: | Height: | Size: 479 KiB |

|

|

@ -1,55 +1,57 @@

|

|||

<?xml version="1.0" encoding="utf-8"?>

|

||||

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

xmlns:app="http://schemas.android.com/apk/res-auto"

|

||||

xmlns:tools="http://schemas.android.com/tools"

|

||||

android:id="@+id/main"

|

||||

android:layout_height="match_parent"

|

||||

android:layout_width="match_parent">

|

||||

xmlns:tools="http://schemas.android.com/tools"

|

||||

android:id="@+id/main"

|

||||

android:layout_height="match_parent"

|

||||

android:layout_width="match_parent">

|

||||

|

||||

<LinearLayout

|

||||

android:fitsSystemWindows="true"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="match_parent"

|

||||

android:orientation="vertical"

|

||||

android:layout_marginEnd="4dp"

|

||||

tools:context=".MainActivity">

|

||||

|

||||

<FrameLayout

|

||||

<ScrollView

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="0dp"

|

||||

android:layout_weight="1">

|

||||

android:layout_weight="1"

|

||||

android:fadeScrollbars="false">

|

||||

|

||||

<ScrollView

|

||||

<TextView

|

||||

android:id="@+id/gguf"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:fadeScrollbars="false">

|

||||

android:layout_margin="16dp"

|

||||

android:text="Selected GGUF model's metadata will show here."

|

||||

style="@style/TextAppearance.MaterialComponents.Body2" />

|

||||

|

||||

<TextView

|

||||

android:id="@+id/gguf"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:layout_margin="16dp"

|

||||

android:text="Selected GGUF model's metadata will show here."

|

||||

style="@style/TextAppearance.MaterialComponents.Body2"

|

||||

android:maxLines="100" />

|

||||

</ScrollView>

|

||||

|

||||

</ScrollView>

|

||||

|

||||

</FrameLayout>

|

||||

<com.google.android.material.divider.MaterialDivider

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="2dp"

|

||||

android:layout_marginHorizontal="16dp"

|

||||

android:layout_marginVertical="8dp" />

|

||||

|

||||

<androidx.recyclerview.widget.RecyclerView

|

||||

android:id="@+id/messages"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="0dp"

|

||||

android:layout_weight="4"

|

||||

android:padding="16dp"

|

||||

android:fadeScrollbars="false"

|

||||

android:scrollbars="vertical"

|

||||

app:reverseLayout="true"

|

||||

tools:listitem="@layout/item_message_assistant"/>

|

||||

|

||||

<LinearLayout

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:orientation="horizontal">

|

||||

android:orientation="horizontal"

|

||||

android:paddingStart="16dp"

|

||||

android:paddingEnd="4dp">

|

||||

|

||||

<EditText

|

||||

android:id="@+id/user_input"

|

||||

|

|

@ -67,7 +69,7 @@

|

|||

style="@style/Widget.Material3.FloatingActionButton.Primary"

|

||||

android:layout_width="wrap_content"

|

||||

android:layout_height="wrap_content"

|

||||

android:layout_margin="8dp"

|

||||

android:layout_margin="12dp"

|

||||

android:src="@drawable/outline_folder_open_24" />

|

||||

|

||||

</LinearLayout>

|

||||

|

|

|

|||

|

|

@ -2,7 +2,8 @@

|

|||

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:padding="8dp"

|

||||

android:layout_marginHorizontal="16dp"

|

||||

android:layout_marginVertical="8dp"

|

||||

android:gravity="start">

|

||||

|

||||

<TextView

|

||||

|

|

|

|||

|

|

@ -2,7 +2,8 @@

|

|||

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

|

||||

android:layout_width="match_parent"

|

||||

android:layout_height="wrap_content"

|

||||

android:padding="8dp"

|

||||

android:layout_marginHorizontal="16dp"

|

||||

android:layout_marginVertical="8dp"

|

||||

android:gravity="end">

|

||||

|

||||

<TextView

|

||||

|

|

|

|||

|

|

@ -2,135 +2,22 @@

|

|||

|

||||

import argparse

|

||||

import os

|

||||

import sys

|

||||

import importlib

|

||||

from pathlib import Path

|

||||

|

||||

# Add parent directory to path for imports

|

||||

sys.path.insert(0, os.path.join(os.path.dirname(__file__), '..'))

|

||||

|

||||

from transformers import AutoTokenizer, AutoModelForCausalLM, AutoModelForImageTextToText, AutoConfig

|

||||

import torch

|

||||

import numpy as np

|

||||

|

||||

### If you want to dump RoPE activations, apply this monkey patch to the model

|

||||

### class from Transformers that you are running (replace apertus.modeling_apertus

|

||||

### with the proper package and class for your model

|

||||

### === START ROPE DEBUG ===

|

||||

# from transformers.models.apertus.modeling_apertus import apply_rotary_pos_emb

|

||||

|

||||

# orig_rope = apply_rotary_pos_emb

|

||||

# torch.set_printoptions(threshold=float('inf'))

|

||||

# torch.set_printoptions(precision=6, sci_mode=False)

|

||||

|

||||

# def debug_rope(q, k, cos, sin, position_ids=None, unsqueeze_dim=1):

|

||||

# # log inputs

|

||||

# summarize(q, "RoPE.q_in")

|

||||

# summarize(k, "RoPE.k_in")

|

||||

|

||||

# # call original

|

||||

# q_out, k_out = orig_rope(q, k, cos, sin, position_ids, unsqueeze_dim)

|

||||

|

||||

# # log outputs

|

||||

# summarize(q_out, "RoPE.q_out")

|

||||

# summarize(k_out, "RoPE.k_out")

|

||||

|

||||

# return q_out, k_out

|

||||

|

||||

# # Patch it

|

||||

# import transformers.models.apertus.modeling_apertus as apertus_mod # noqa: E402

|

||||

# apertus_mod.apply_rotary_pos_emb = debug_rope

|

||||

### == END ROPE DEBUG ===

|

||||

|

||||

|

||||

def summarize(tensor: torch.Tensor, name: str, max_seq: int = 3, max_vals: int = 3):

|

||||

"""

|

||||

Print a tensor in llama.cpp debug style.

|

||||

|

||||

Supports:

|

||||

- 2D tensors (seq, hidden)

|

||||

- 3D tensors (batch, seq, hidden)

|

||||

- 4D tensors (batch, seq, heads, dim_per_head) via flattening heads × dim_per_head

|

||||

|

||||

Shows first and last max_vals of each vector per sequence position.

|

||||

"""

|

||||

t = tensor.detach().to(torch.float32).cpu()

|

||||

|

||||

# Determine dimensions

|

||||

if t.ndim == 3:

|

||||

_, s, _ = t.shape

|

||||

elif t.ndim == 2:

|

||||

_, s = 1, t.shape[0]

|

||||

t = t.unsqueeze(0)

|

||||

elif t.ndim == 4:

|

||||

_, s, _, _ = t.shape

|

||||

else:

|

||||

print(f"Skipping tensor due to unsupported dimensions: {t.ndim}")

|

||||

return

|

||||

|

||||

ten_shape = t.shape

|

||||

|

||||

print(f"ggml_debug: {name} = (f32) ... = {{{ten_shape}}}")

|

||||

print(" [")

|

||||

print(" [")

|

||||

|

||||

# Determine indices for first and last sequences

|

||||

first_indices = list(range(min(s, max_seq)))

|

||||

last_indices = list(range(max(0, s - max_seq), s))

|

||||

|

||||

# Check if there's an overlap between first and last indices or if we're at the edge case of s = 2 * max_seq

|

||||

has_overlap = bool(set(first_indices) & set(last_indices)) or (max_seq * 2 == s)

|

||||

|

||||

# Combine indices

|

||||

if has_overlap:

|

||||

# If there's overlap, just use the combined unique indices

|

||||

indices = sorted(list(set(first_indices + last_indices)))

|

||||

separator_index = None

|

||||

else:

|

||||

# If no overlap, we'll add a separator between first and last sequences

|

||||

indices = first_indices + last_indices

|

||||

separator_index = len(first_indices)

|

||||

|

||||

for i, si in enumerate(indices):

|

||||

# Add separator if needed

|

||||

if separator_index is not None and i == separator_index:

|

||||

print(" ...")

|

||||

|

||||

# Extract appropriate slice

|

||||

vec = t[0, si]

|

||||

if vec.ndim == 2: # 4D case: flatten heads × dim_per_head

|

||||

flat = vec.flatten().tolist()

|

||||

else: # 2D or 3D case

|

||||

flat = vec.tolist()

|

||||

|

||||

# First and last slices

|

||||

first = flat[:max_vals]

|

||||

last = flat[-max_vals:] if len(flat) >= max_vals else flat

|

||||

first_str = ", ".join(f"{v:12.4f}" for v in first)

|

||||

last_str = ", ".join(f"{v:12.4f}" for v in last)

|

||||

|

||||

print(f" [{first_str}, ..., {last_str}]")

|

||||

|

||||

print(" ],")

|

||||

print(" ]")

|

||||

print(f" sum = {t.sum().item():.6f}\n")

|

||||

|

||||

|

||||

def debug_hook(name):

|

||||

def fn(_m, input, output):

|

||||

if isinstance(input, torch.Tensor):

|

||||

summarize(input, name + "_in")

|

||||

elif isinstance(input, (tuple, list)) and len(input) > 0 and isinstance(input[0], torch.Tensor):

|

||||

summarize(input[0], name + "_in")

|

||||

if isinstance(output, torch.Tensor):

|

||||

summarize(output, name + "_out")

|

||||

elif isinstance(output, (tuple, list)) and len(output) > 0 and isinstance(output[0], torch.Tensor):

|

||||

summarize(output[0], name + "_out")

|

||||

|

||||

return fn

|

||||

|

||||

|

||||

unreleased_model_name = os.getenv("UNRELEASED_MODEL_NAME")

|

||||

from utils.common import debug_hook

|

||||

|

||||

parser = argparse.ArgumentParser(description="Process model with specified path")

|

||||

parser.add_argument("--model-path", "-m", help="Path to the model")

|

||||

parser.add_argument("--prompt-file", "-f", help="Optional prompt file", required=False)

|

||||

parser.add_argument("--verbose", "-v", action="store_true", help="Enable verbose debug output")

|

||||

args = parser.parse_args()

|

||||

|

||||

model_path = os.environ.get("MODEL_PATH", args.model_path)

|

||||

|

|

@ -139,6 +26,12 @@ if model_path is None:

|

|||

"Model path must be specified either via --model-path argument or MODEL_PATH environment variable"

|

||||

)

|

||||

|

||||

### If you want to dump RoPE activations, uncomment the following lines:

|

||||

### === START ROPE DEBUG ===

|

||||

# from utils.common import setup_rope_debug

|

||||

# setup_rope_debug("transformers.models.apertus.modeling_apertus")

|

||||

### == END ROPE DEBUG ===

|

||||

|

||||

|

||||

print("Loading model and tokenizer using AutoTokenizer:", model_path)

|

||||

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

|

||||

|

|

@ -156,6 +49,7 @@ print("Number of layers: ", config.num_hidden_layers)

|

|||

print("BOS token id: ", config.bos_token_id)

|

||||

print("EOS token id: ", config.eos_token_id)

|

||||

|

||||

unreleased_model_name = os.getenv("UNRELEASED_MODEL_NAME")

|

||||

if unreleased_model_name:

|

||||

model_name_lower = unreleased_model_name.lower()

|

||||

unreleased_module_path = (

|

||||

|

|

@ -184,9 +78,10 @@ else:

|

|||

model_path, device_map="auto", offload_folder="offload", trust_remote_code=True, config=config

|

||||

)

|

||||

|

||||

for name, module in model.named_modules():

|

||||

if len(list(module.children())) == 0: # only leaf modules

|

||||

module.register_forward_hook(debug_hook(name))

|

||||

if args.verbose:

|

||||

for name, module in model.named_modules():

|

||||

if len(list(module.children())) == 0: # only leaf modules

|

||||

module.register_forward_hook(debug_hook(name))

|

||||

|

||||

model_name = os.path.basename(model_path)

|

||||

# Printing the Model class to allow for easier debugging. This can be useful

|

||||

|

|

|

|||

|

|

@ -2,6 +2,8 @@

|

|||

|

||||

import os

|

||||

import sys

|

||||

import torch

|

||||

|

||||

|

||||

def get_model_name_from_env_path(env_path_name):

|

||||

model_path = os.getenv(env_path_name)

|

||||

|

|

@ -18,3 +20,131 @@ def get_model_name_from_env_path(env_path_name):

|

|||

name = name[:-5]

|

||||

|

||||

return name

|

||||

|

||||

|

||||

def summarize(tensor: torch.Tensor, name: str, max_seq: int = 3, max_vals: int = 3):

|

||||

"""

|

||||

Print a tensor in llama.cpp debug style.

|

||||

|

||||

Supports:

|

||||

- 2D tensors (seq, hidden)

|

||||

- 3D tensors (batch, seq, hidden)

|

||||

- 4D tensors (batch, seq, heads, dim_per_head) via flattening heads × dim_per_head

|

||||

|

||||

Shows first and last max_vals of each vector per sequence position.

|

||||

"""

|

||||

t = tensor.detach().to(torch.float32).cpu()

|

||||

|

||||

# Determine dimensions

|

||||

if t.ndim == 3:

|

||||

_, s, _ = t.shape

|

||||

elif t.ndim == 2:

|

||||

_, s = 1, t.shape[0]

|

||||

t = t.unsqueeze(0)

|

||||

elif t.ndim == 4:

|

||||

_, s, _, _ = t.shape

|

||||

else:

|

||||

print(f"Skipping tensor due to unsupported dimensions: {t.ndim}")

|

||||

return

|

||||

|

||||

ten_shape = t.shape

|

||||

|

||||

print(f"ggml_debug: {name} = (f32) ... = {{{ten_shape}}}")

|

||||

print(" [")

|

||||

print(" [")

|

||||

|

||||

# Determine indices for first and last sequences

|

||||

first_indices = list(range(min(s, max_seq)))

|

||||

last_indices = list(range(max(0, s - max_seq), s))

|

||||

|

||||

# Check if there's an overlap between first and last indices or if we're at the edge case of s = 2 * max_seq

|

||||

has_overlap = bool(set(first_indices) & set(last_indices)) or (max_seq * 2 == s)

|

||||

|

||||

# Combine indices

|

||||

if has_overlap:

|

||||

# If there's overlap, just use the combined unique indices

|

||||

indices = sorted(list(set(first_indices + last_indices)))

|

||||

separator_index = None

|

||||

else:

|

||||

# If no overlap, we'll add a separator between first and last sequences

|

||||

indices = first_indices + last_indices

|

||||

separator_index = len(first_indices)

|

||||

|

||||

for i, si in enumerate(indices):

|

||||

# Add separator if needed

|

||||

if separator_index is not None and i == separator_index:

|

||||

print(" ...")

|

||||

|

||||

# Extract appropriate slice

|

||||

vec = t[0, si]

|

||||

if vec.ndim == 2: # 4D case: flatten heads × dim_per_head

|

||||

flat = vec.flatten().tolist()

|

||||

else: # 2D or 3D case

|

||||

flat = vec.tolist()

|

||||

|

||||

# First and last slices

|

||||

first = flat[:max_vals]

|

||||

last = flat[-max_vals:] if len(flat) >= max_vals else flat

|

||||

first_str = ", ".join(f"{v:12.4f}" for v in first)

|

||||

last_str = ", ".join(f"{v:12.4f}" for v in last)

|

||||

|

||||

print(f" [{first_str}, ..., {last_str}]")

|

||||

|

||||

print(" ],")

|

||||

print(" ]")

|

||||

print(f" sum = {t.sum().item():.6f}\n")

|

||||

|

||||

|

||||

def debug_hook(name):

|

||||

def fn(_m, input, output):

|

||||

if isinstance(input, torch.Tensor):

|

||||

summarize(input, name + "_in")

|

||||

elif isinstance(input, (tuple, list)) and len(input) > 0 and isinstance(input[0], torch.Tensor):

|

||||

summarize(input[0], name + "_in")

|

||||

if isinstance(output, torch.Tensor):

|

||||

summarize(output, name + "_out")

|

||||

elif isinstance(output, (tuple, list)) and len(output) > 0 and isinstance(output[0], torch.Tensor):

|

||||

summarize(output[0], name + "_out")

|

||||

|

||||

return fn

|

||||

|

||||

|

||||

def setup_rope_debug(model_module_path: str, function_name: str = "apply_rotary_pos_emb"):

|

||||

"""

|

||||

Apply monkey patch to dump RoPE activations for debugging.

|

||||

|

||||

Args:

|

||||

model_module_path: Path to the model module (e.g., "transformers.models.apertus.modeling_apertus")

|

||||

function_name: Name of the RoPE function to patch (default: "apply_rotary_pos_emb")

|

||||

|

||||

Example:

|

||||

from utils.common import setup_rope_debug

|

||||

setup_rope_debug("transformers.models.apertus.modeling_apertus")

|

||||

"""

|

||||

import importlib

|

||||

|

||||

# Import the module and get the original function

|

||||

module = importlib.import_module(model_module_path)

|

||||

orig_rope = getattr(module, function_name)

|

||||

|

||||

# Set torch print options for better debugging

|

||||

torch.set_printoptions(threshold=float('inf'))

|

||||

torch.set_printoptions(precision=6, sci_mode=False)

|

||||

|

||||

def debug_rope(q, k, cos, sin, position_ids=None, unsqueeze_dim=1):

|

||||

# log inputs

|

||||

summarize(q, "RoPE.q_in")

|

||||

summarize(k, "RoPE.k_in")

|

||||

|

||||

# call original

|

||||

q_out, k_out = orig_rope(q, k, cos, sin, position_ids, unsqueeze_dim)

|

||||

|

||||

# log outputs

|

||||

summarize(q_out, "RoPE.q_out")

|

||||

summarize(k_out, "RoPE.k_out")

|

||||

|

||||

return q_out, k_out

|

||||

|

||||

# Patch it

|

||||

setattr(module, function_name, debug_rope)

|

||||

print(f"RoPE debug patching applied to {model_module_path}.{function_name}")

|

||||

|

|

|

|||

|

|

@ -458,6 +458,7 @@ function(ggml_add_cpu_backend_variant_impl tag_name)

|

|||

if (GGML_RV_ZFH)

|

||||

string(APPEND MARCH_STR "_zfh")

|

||||

endif()

|

||||

|

||||

if (GGML_XTHEADVECTOR)

|

||||

string(APPEND MARCH_STR "_xtheadvector")

|

||||

elseif (GGML_RVV)

|

||||

|

|

@ -465,6 +466,9 @@ function(ggml_add_cpu_backend_variant_impl tag_name)

|

|||

if (GGML_RV_ZVFH)

|

||||

string(APPEND MARCH_STR "_zvfh")

|

||||

endif()

|

||||

if (GGML_RV_ZVFBFWMA)

|

||||

string(APPEND MARCH_STR "_zvfbfwma")

|

||||

endif()

|

||||

endif()

|

||||

if (GGML_RV_ZICBOP)

|

||||

string(APPEND MARCH_STR "_zicbop")

|

||||

|

|

|

|||

|

|

@ -3320,13 +3320,33 @@ void ggml_cpu_fp16_to_fp32(const ggml_fp16_t * x, float * y, int64_t n) {

|

|||

__m128 y_vec = _mm_cvtph_ps(x_vec);

|

||||

_mm_storeu_ps(y + i, y_vec);

|

||||

}

|

||||

#elif defined(__riscv_zvfh)

|

||||

for (int vl; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m1(n - i);

|

||||

vfloat16m1_t vx = __riscv_vle16_v_f16m1((_Float16 *)&x[i], vl);

|

||||

vfloat32m2_t vy = __riscv_vfwcvt_f_f_v_f32m2(vx, vl);

|

||||

__riscv_vse32_v_f32m2(&y[i], vy, vl);

|

||||

|

||||

#elif defined(__riscv_v_intrinsic) && defined(__riscv_zvfhmin)

|

||||

// calculate step size

|

||||

const int epr = __riscv_vsetvlmax_e16m2();

|

||||

const int step = epr * 2;

|

||||

const int np = (n & ~(step - 1));

|

||||

|

||||

// unroll by 2

|

||||

for (; i < np; i += step) {

|

||||

vfloat16m2_t ax0 = __riscv_vle16_v_f16m2((const _Float16*)x + i, epr);

|

||||

vfloat32m4_t ay0 = __riscv_vfwcvt_f_f_v_f32m4(ax0, epr);

|

||||

__riscv_vse32_v_f32m4(y + i, ay0, epr);

|

||||

|

||||

vfloat16m2_t ax1 = __riscv_vle16_v_f16m2((const _Float16*)x + i + epr, epr);

|

||||

vfloat32m4_t ay1 = __riscv_vfwcvt_f_f_v_f32m4(ax1, epr);

|

||||

__riscv_vse32_v_f32m4(y + i + epr, ay1, epr);

|

||||

}

|

||||

|

||||

// leftovers

|

||||

int vl;

|

||||

for (i = np; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m2(n - i);

|

||||

vfloat16m2_t ax0 = __riscv_vle16_v_f16m2((const _Float16*)x + i, vl);

|

||||

vfloat32m4_t ay0 = __riscv_vfwcvt_f_f_v_f32m4(ax0, vl);

|

||||

__riscv_vse32_v_f32m4(y + i, ay0, vl);

|

||||

}

|

||||

|

||||

#endif

|

||||

|

||||

for (; i < n; ++i) {

|

||||

|

|

@ -3371,6 +3391,31 @@ void ggml_cpu_bf16_to_fp32(const ggml_bf16_t * x, float * y, int64_t n) {

|

|||

(const __m128i *)(x + i))),

|

||||

16)));

|

||||

}

|

||||

#elif defined(__riscv_v_intrinsic) && defined(__riscv_zvfbfmin)

|

||||

// calculate step size

|

||||

const int epr = __riscv_vsetvlmax_e16m2();

|

||||

const int step = epr * 2;

|

||||

const int np = (n & ~(step - 1));

|

||||

|

||||

// unroll by 2

|

||||

for (; i < np; i += step) {

|

||||

vbfloat16m2_t ax0 = __riscv_vle16_v_bf16m2((const __bf16*)x + i, epr);

|

||||

vfloat32m4_t ay0 = __riscv_vfwcvtbf16_f_f_v_f32m4(ax0, epr);

|

||||

__riscv_vse32_v_f32m4(y + i, ay0, epr);

|

||||

|

||||

vbfloat16m2_t ax1 = __riscv_vle16_v_bf16m2((const __bf16*)x + i + epr, epr);

|

||||

vfloat32m4_t ay1 = __riscv_vfwcvtbf16_f_f_v_f32m4(ax1, epr);

|

||||

__riscv_vse32_v_f32m4(y + i + epr, ay1, epr);

|

||||

}

|

||||

|

||||

// leftovers

|

||||

int vl;

|

||||

for (i = np; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m2(n - i);

|

||||

vbfloat16m2_t ax0 = __riscv_vle16_v_bf16m2((const __bf16*)x + i, vl);

|

||||

vfloat32m4_t ay0 = __riscv_vfwcvtbf16_f_f_v_f32m4(ax0, vl);

|

||||

__riscv_vse32_v_f32m4(y + i, ay0, vl);

|

||||

}

|

||||

#endif

|

||||

for (; i < n; i++) {

|

||||

y[i] = GGML_BF16_TO_FP32(x[i]);

|

||||

|

|

|

|||

|

|

@ -195,8 +195,48 @@ void ggml_vec_dot_bf16(int n, float * GGML_RESTRICT s, size_t bs, ggml_bf16_t *

|

|||

sumf += (ggml_float)_mm_cvtss_f32(g);

|

||||

|

||||

#undef LOAD

|

||||

#endif

|

||||

#elif defined(__riscv_v_intrinsic) && defined(__riscv_zvfbfwma)

|

||||

size_t vl = __riscv_vsetvlmax_e32m4();

|

||||

|

||||

// initialize accumulators to all zeroes

|

||||

vfloat32m4_t vsum0 = __riscv_vfmv_v_f_f32m4(0.0f, vl);

|

||||

vfloat32m4_t vsum1 = __riscv_vfmv_v_f_f32m4(0.0f, vl);

|

||||

|

||||

// calculate step size

|

||||

const size_t epr = __riscv_vsetvlmax_e16m2();

|

||||

const size_t step = epr * 2;

|

||||

const int np = (n & ~(step - 1));

|

||||

|

||||

// unroll by 2

|

||||

for (; i < np; i += step) {

|

||||

vbfloat16m2_t ax0 = __riscv_vle16_v_bf16m2((const __bf16 *)&x[i], epr);

|

||||

vbfloat16m2_t ay0 = __riscv_vle16_v_bf16m2((const __bf16 *)&y[i], epr);

|

||||

vsum0 = __riscv_vfwmaccbf16_vv_f32m4(vsum0, ax0, ay0, epr);

|

||||

__asm__ __volatile__ ("" ::: "memory");

|

||||

|

||||

vbfloat16m2_t ax1 = __riscv_vle16_v_bf16m2((const __bf16 *)&x[i + epr], epr);

|

||||

vbfloat16m2_t ay1 = __riscv_vle16_v_bf16m2((const __bf16 *)&y[i + epr], epr);

|

||||

vsum1 = __riscv_vfwmaccbf16_vv_f32m4(vsum1, ax1, ay1, epr);

|

||||

__asm__ __volatile__ ("" ::: "memory");

|

||||

}

|

||||

|

||||

// accumulate in 1 register

|

||||

vsum0 = __riscv_vfadd_vv_f32m4(vsum0, vsum1, vl);

|

||||

|

||||

// leftovers

|

||||

for (i = np; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m2(n - i);

|

||||

vbfloat16m2_t ax0 = __riscv_vle16_v_bf16m2((const __bf16 *)&x[i], vl);

|

||||

vbfloat16m2_t ay0 = __riscv_vle16_v_bf16m2((const __bf16 *)&y[i], vl);

|

||||

vsum0 = __riscv_vfwmaccbf16_vv_f32m4(vsum0, ax0, ay0, vl);

|

||||

}

|

||||

|

||||

// reduce

|

||||

vl = __riscv_vsetvlmax_e32m4();

|

||||

vfloat32m1_t redsum = __riscv_vfredusum_vs_f32m4_f32m1(vsum0, __riscv_vfmv_v_f_f32m1(0.0f, 1), vl);

|

||||

sumf += __riscv_vfmv_f_s_f32m1_f32(redsum);

|

||||

|

||||

#endif

|

||||

for (; i < n; ++i) {

|

||||

sumf += (ggml_float)(GGML_BF16_TO_FP32(x[i]) *

|

||||

GGML_BF16_TO_FP32(y[i]));

|

||||

|

|

|

|||

|

|

@ -224,13 +224,71 @@ inline static void ggml_vec_dot_f16_unroll(const int n, const int xs, float * GG

|

|||

}

|

||||

GGML_F16x_VEC_REDUCE(sumf[0], sum_00, sum_01, sum_02, sum_03);

|

||||

GGML_F16x_VEC_REDUCE(sumf[1], sum_10, sum_11, sum_12, sum_13);

|

||||

#elif defined(__riscv_v_intrinsic)

|

||||

// todo: RVV impl

|

||||

for (int i = 0; i < n; ++i) {

|

||||

for (int j = 0; j < GGML_VEC_DOT_UNROLL; ++j) {

|

||||

sumf[j] += (ggml_float)(GGML_CPU_FP16_TO_FP32(x[j][i])*GGML_CPU_FP16_TO_FP32(y[i]));

|

||||

}

|

||||

}

|

||||

|

||||

#elif defined(__riscv_v_intrinsic) && defined(__riscv_zvfh)

|

||||

size_t vl = __riscv_vsetvlmax_e32m4();

|

||||

|

||||

// initialize accumulators to all zeroes

|

||||

vfloat32m4_t vsum0_0 = __riscv_vfmv_v_f_f32m4(0.0f, vl);

|

||||

vfloat32m4_t vsum0_1 = __riscv_vfmv_v_f_f32m4(0.0f, vl);

|

||||

vfloat32m4_t vsum1_0 = __riscv_vfmv_v_f_f32m4(0.0f, vl);

|

||||

vfloat32m4_t vsum1_1 = __riscv_vfmv_v_f_f32m4(0.0f, vl);

|

||||

|

||||

// calculate step size

|

||||

const size_t epr = __riscv_vsetvlmax_e16m2();

|

||||

const size_t step = epr * 2;

|

||||

const int np = (n & ~(step - 1));

|

||||

|

||||

// unroll by 2 along the row dimension

|

||||

for (int i = 0; i < np; i += step) {

|

||||

vfloat16m2_t ay0 = __riscv_vle16_v_f16m2((const _Float16 *)(y + i), epr);

|

||||

vfloat16m2_t ax0_0 = __riscv_vle16_v_f16m2((const _Float16 *)(x[0] + i), epr);

|

||||

vfloat16m2_t ax1_0 = __riscv_vle16_v_f16m2((const _Float16 *)(x[1] + i), epr);

|

||||

vsum0_0 = __riscv_vfwmacc_vv_f32m4(vsum0_0, ax0_0, ay0, epr);

|

||||

vsum1_0 = __riscv_vfwmacc_vv_f32m4(vsum1_0, ax1_0, ay0, epr);

|

||||

|

||||

vfloat16m2_t ay1 = __riscv_vle16_v_f16m2((const _Float16 *)(y + i + epr), epr);

|

||||

vfloat16m2_t ax0_1 = __riscv_vle16_v_f16m2((const _Float16 *)(x[0] + i + epr), epr);

|

||||

vfloat16m2_t ax1_1 = __riscv_vle16_v_f16m2((const _Float16 *)(x[1] + i + epr), epr);

|

||||

vsum0_1 = __riscv_vfwmacc_vv_f32m4(vsum0_1, ax0_1, ay1, epr);

|

||||

vsum1_1 = __riscv_vfwmacc_vv_f32m4(vsum1_1, ax1_1, ay1, epr);

|

||||

}

|

||||

|

||||

vfloat32m4_t vsum0 = __riscv_vfadd_vv_f32m4(vsum0_0, vsum0_1, vl);

|

||||

vfloat32m4_t vsum1 = __riscv_vfadd_vv_f32m4(vsum1_0, vsum1_1, vl);

|

||||

|

||||

// leftovers

|

||||

for (int i = np; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m2(n - i);

|

||||

vfloat16m2_t ay = __riscv_vle16_v_f16m2((const _Float16 *)(y + i), vl);

|

||||

vfloat16m2_t ax0 = __riscv_vle16_v_f16m2((const _Float16 *)(x[0] + i), vl);

|

||||

vfloat16m2_t ax1 = __riscv_vle16_v_f16m2((const _Float16 *)(x[1] + i), vl);

|

||||

|

||||

vsum0 = __riscv_vfwmacc_vv_f32m4(vsum0, ax0, ay, vl);

|

||||

vsum1 = __riscv_vfwmacc_vv_f32m4(vsum1, ax1, ay, vl);

|

||||

}

|

||||

|

||||

// reduce

|

||||

vl = __riscv_vsetvlmax_e32m2();

|

||||

vfloat32m2_t acc0_0 = __riscv_vfadd_vv_f32m2(__riscv_vget_v_f32m4_f32m2(vsum0, 0),

|

||||

__riscv_vget_v_f32m4_f32m2(vsum0, 1), vl);

|

||||

vl = __riscv_vsetvlmax_e32m1();

|

||||

vfloat32m1_t acc0_1 = __riscv_vfadd_vv_f32m1(__riscv_vget_v_f32m2_f32m1(acc0_0, 0),

|

||||

__riscv_vget_v_f32m2_f32m1(acc0_0, 1), vl);

|

||||

vfloat32m1_t redsum0 = __riscv_vfredusum_vs_f32m1_f32m1(

|

||||

acc0_1, __riscv_vfmv_v_f_f32m1(0.0f, 1), vl);

|

||||

|

||||

vl = __riscv_vsetvlmax_e32m2();

|

||||

vfloat32m2_t acc1_0 = __riscv_vfadd_vv_f32m2(__riscv_vget_v_f32m4_f32m2(vsum1, 0),

|

||||

__riscv_vget_v_f32m4_f32m2(vsum1, 1), vl);

|

||||

vl = __riscv_vsetvlmax_e32m1();

|

||||

vfloat32m1_t acc1_1 = __riscv_vfadd_vv_f32m1(__riscv_vget_v_f32m2_f32m1(acc1_0, 0),

|

||||

__riscv_vget_v_f32m2_f32m1(acc1_0, 1), vl);

|

||||

vfloat32m1_t redsum1 = __riscv_vfredusum_vs_f32m1_f32m1(

|

||||

acc1_1, __riscv_vfmv_v_f_f32m1(0.0f, 1), vl);

|

||||

sumf[0] = __riscv_vfmv_f_s_f32m1_f32(redsum0);

|

||||

sumf[1] = __riscv_vfmv_f_s_f32m1_f32(redsum1);

|

||||

|

||||

#else

|

||||

const int np = (n & ~(GGML_F16_STEP - 1));

|

||||

|

||||

|

|

@ -475,15 +533,39 @@ inline static void ggml_vec_mad_f16(const int n, ggml_fp16_t * GGML_RESTRICT y,

|

|||

}

|

||||

np = n;

|

||||

#elif defined(__riscv_zvfh) // implies __riscv_v_intrinsic

|

||||

const int np = n;

|

||||

_Float16 hv = (_Float16)v;

|

||||

for (int i = 0, avl; i < n; i += avl) {

|

||||

avl = __riscv_vsetvl_e16m8(n - i);

|

||||

vfloat16m8_t ax = __riscv_vle16_v_f16m8((const _Float16 *)&x[i], avl);

|

||||

vfloat16m8_t ay = __riscv_vle16_v_f16m8((_Float16 *)&y[i], avl);

|

||||

vfloat16m8_t ny = __riscv_vfmadd_vf_f16m8(ax, hv, ay, avl);

|

||||

__riscv_vse16_v_f16m8((_Float16 *)&y[i], ny, avl);

|

||||

const ggml_fp16_t s = GGML_CPU_FP32_TO_FP16(v);

|

||||

const _Float16 scale = *(const _Float16*)(&s);

|

||||

|

||||

// calculate step size

|

||||

const int epr = __riscv_vsetvlmax_e16m4();

|

||||

const int step = epr * 2;

|

||||

int np = (n & ~(step - 1));

|

||||

|

||||

// unroll by 2

|

||||

for (int i = 0; i < np; i += step) {

|

||||

vfloat16m4_t ax0 = __riscv_vle16_v_f16m4((const _Float16*)x + i, epr);

|

||||

vfloat16m4_t ay0 = __riscv_vle16_v_f16m4((const _Float16*)y + i, epr);

|

||||

ay0 = __riscv_vfmacc_vf_f16m4(ay0, scale, ax0, epr);

|

||||

__riscv_vse16_v_f16m4((_Float16*)y + i, ay0, epr);

|

||||

__asm__ __volatile__ ("" ::: "memory");

|

||||

|

||||

vfloat16m4_t ax1 = __riscv_vle16_v_f16m4((const _Float16*)x + i + epr, epr);

|

||||

vfloat16m4_t ay1 = __riscv_vle16_v_f16m4((const _Float16*)y + i + epr, epr);

|

||||

ay1 = __riscv_vfmacc_vf_f16m4(ay1, scale, ax1, epr);

|

||||

__riscv_vse16_v_f16m4((_Float16*)y + i + epr, ay1, epr);

|

||||

__asm__ __volatile__ ("" ::: "memory");

|

||||

}

|

||||

|

||||

// leftovers

|

||||

int vl;

|

||||

for (int i = np; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m4(n - i);

|

||||

vfloat16m4_t ax0 = __riscv_vle16_v_f16m4((const _Float16*)x + i, vl);

|

||||

vfloat16m4_t ay0 = __riscv_vle16_v_f16m4((const _Float16*)y + i, vl);

|

||||

ay0 = __riscv_vfmacc_vf_f16m4(ay0, scale, ax0, vl);

|

||||

__riscv_vse16_v_f16m4((_Float16*)y + i, ay0, vl);

|

||||

}

|

||||

np = n;

|

||||

#elif defined(GGML_SIMD)

|

||||

const int np = (n & ~(GGML_F16_STEP - 1));

|

||||

|

||||

|

|

@ -724,13 +806,34 @@ inline static void ggml_vec_scale_f16(const int n, ggml_fp16_t * y, const float

|

|||

svst1_f16(pg, (__fp16 *)(y + np), out);

|

||||

}

|

||||

#elif defined(__riscv_v_intrinsic) && defined(__riscv_zvfh)

|

||||

for (int i = 0, vl; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m2(n - i);

|

||||

vfloat16m2_t vy = __riscv_vle16_v_f16m2((_Float16 *)&y[i], vl);

|

||||

vfloat32m4_t vy32 = __riscv_vfwcvt_f_f_v_f32m4(vy, vl);

|

||||

vy32 = __riscv_vfmul_vf_f32m4(vy32, v, vl);

|

||||

vy = __riscv_vfncvt_f_f_w_f16m2(vy32, vl);

|

||||

__riscv_vse16_v_f16m2((_Float16 *)&y[i], vy, vl);

|

||||

const ggml_fp16_t s = GGML_CPU_FP32_TO_FP16(v);

|

||||

const _Float16 scale = *(const _Float16*)(&s);

|

||||

|

||||

// calculate step size

|

||||

const int epr = __riscv_vsetvlmax_e16m4();

|

||||

const int step = epr * 2;

|

||||

const int np = (n & ~(step - 1));

|

||||

|

||||

// unroll by 2

|

||||

for (int i = 0; i < np; i += step) {

|

||||

vfloat16m4_t ay0 = __riscv_vle16_v_f16m4((const _Float16*)y + i, epr);

|

||||

ay0 = __riscv_vfmul_vf_f16m4(ay0, scale, epr);

|

||||

__riscv_vse16_v_f16m4((_Float16*)y + i, ay0, epr);

|

||||

__asm__ __volatile__ ("" ::: "memory");

|

||||

|

||||

vfloat16m4_t ay1 = __riscv_vle16_v_f16m4((const _Float16*)y + i + epr, epr);

|

||||

ay1 = __riscv_vfmul_vf_f16m4(ay1, scale, epr);

|

||||

__riscv_vse16_v_f16m4((_Float16*)y + i + epr, ay1, epr);

|

||||

__asm__ __volatile__ ("" ::: "memory");

|

||||

}

|

||||

|

||||

// leftovers

|

||||

int vl;

|

||||

for (int i = np; i < n; i += vl) {

|

||||

vl = __riscv_vsetvl_e16m4(n - i);

|

||||

vfloat16m4_t ay0 = __riscv_vle16_v_f16m4((const _Float16*)y + i, vl);

|

||||

ay0 = __riscv_vfmul_vf_f16m4(ay0, scale, vl);

|

||||

__riscv_vse16_v_f16m4((_Float16*)y + i, ay0, vl);

|

||||

}

|

||||

#elif defined(GGML_SIMD)

|

||||

const int np = (n & ~(GGML_F16_STEP - 1));

|

||||

|

|

|

|||

|

|

@ -78,27 +78,25 @@ namespace ggml_cuda_mma {

|

|||

// MIRRORED == Each data value is held exactly once per thread subgroup.

|

||||

DATA_LAYOUT_I_MAJOR = 0, // Always used for Turing, Ampere, Ada Lovelace, consumer Blackwell, matrix A&B for RDNA4 and CDNA.

|

||||

DATA_LAYOUT_J_MAJOR = 10, // Matrix C for CDNA and RDNA4, int and float matrix C for RDNA3.

|

||||

DATA_LAYOUT_I_MAJOR_MIRRORED = 20,

|

||||

DATA_LAYOUT_I_MAJOR_MIRRORED = 20, // Volta, matrix A&B for RDNA3.

|

||||

DATA_LAYOUT_J_MAJOR_MIRRORED = 30,

|

||||

DATA_LAYOUT_I_MAJOR_DUAL = 40, // Matrix A&B for RDNA3.

|

||||

};

|

||||

// Implemented mma combinations are:

|

||||

// - (I_MAJOR, I_MAJOR) -> I_MAJOR

|

||||

// - (I_MAJOR, I_MAJOR_MIRRORED) -> I_MAJOR

|

||||

// - (I_MAJOR, J_MAJOR_MIRRORED) -> I_MAJOR

|

||||

|

||||

constexpr bool is_i_major(const data_layout dl) {

|

||||

static constexpr bool is_i_major(const data_layout dl) {

|

||||

return dl == DATA_LAYOUT_I_MAJOR ||

|

||||

dl == DATA_LAYOUT_I_MAJOR_MIRRORED ||

|

||||

dl == DATA_LAYOUT_I_MAJOR_DUAL;

|

||||

dl == DATA_LAYOUT_I_MAJOR_MIRRORED;

|

||||

}

|

||||

|

||||

constexpr data_layout get_input_data_layout() {

|

||||

#if defined(RDNA3)

|

||||

return DATA_LAYOUT_I_MAJOR_DUAL;

|

||||

static constexpr __device__ data_layout get_input_data_layout() {

|

||||

#if defined(RDNA3) || __CUDA_ARCH__ == GGML_CUDA_CC_VOLTA

|

||||

return DATA_LAYOUT_I_MAJOR_MIRRORED;

|

||||

#else

|

||||

return DATA_LAYOUT_I_MAJOR;

|

||||

#endif // defined(RDNA3)

|

||||

#endif // defined(RDNA3) || __CUDA_ARCH__ == GGML_CUDA_CC_VOLTA

|

||||

}

|

||||

|

||||

template <int I_, int J_, typename T, data_layout ds_=DATA_LAYOUT_I_MAJOR>

|

||||

|

|

@ -462,11 +460,65 @@ namespace ggml_cuda_mma {

|

|||

}

|

||||

};

|

||||

|

||||

template <int I_, int J_, typename T>

|

||||

struct tile<I_, J_, T, DATA_LAYOUT_I_MAJOR_MIRRORED> {

|

||||

static constexpr int I = I_;

|

||||

static constexpr int J = J_;

|

||||

static constexpr data_layout dl = DATA_LAYOUT_I_MAJOR_MIRRORED;

|

||||

|

||||

// RDNA3

|

||||

static constexpr int ne = I * J / 32 * 2;

|

||||

|

||||

T x[ne] = {0};

|

||||

|

||||

static constexpr __device__ bool supported() {

|

||||

if (I == 16 && J == 16) return true;

|

||||

if (I == 16 && J == 8) return true;

|

||||

if (I == 16 && J == 4) return true;

|

||||

return false;

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_i(const int /*l*/) {

|

||||

if constexpr (supported()) {

|

||||

return threadIdx.x % 16;

|

||||

} else {

|

||||

NO_DEVICE_CODE;

|

||||

return -1;

|

||||

}

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_j(const int l) {

|

||||

if constexpr (supported()) {

|

||||

return l;

|

||||

} else {

|

||||

NO_DEVICE_CODE;

|

||||

return -1;

|

||||

}

|

||||

}

|

||||

};

|

||||

|

||||

template <int I_, int J_>

|

||||

struct tile<I_, J_, half2, DATA_LAYOUT_I_MAJOR_MIRRORED> {

|

||||

static constexpr int I = I_;

|

||||

static constexpr int J = J_;

|

||||

static constexpr data_layout dl = DATA_LAYOUT_I_MAJOR_MIRRORED;

|

||||

#if defined(RDNA3)

|

||||

static constexpr int ne = tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::ne;

|

||||

|

||||

half2 x[ne] = {{0.0f, 0.0f}};

|

||||

|

||||

static constexpr __device__ bool supported() {

|

||||

return tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::supported();

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_i(const int l) {

|

||||

return tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::get_i(l);

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_j(const int l) {

|

||||

return tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::get_j(l);

|

||||

}

|

||||

#else // Volta

|

||||

static constexpr int ne = I * J / (WARP_SIZE/4);

|

||||

|

||||

half2 x[ne] = {{0.0f, 0.0f}};

|

||||

|

|

@ -493,6 +545,29 @@ namespace ggml_cuda_mma {

|

|||

return -1;

|

||||

}

|

||||

}

|

||||

#endif // defined(RDNA3)

|

||||

};

|

||||

|

||||

template <int I_, int J_>

|

||||

struct tile<I_, J_, nv_bfloat162, DATA_LAYOUT_I_MAJOR_MIRRORED> {

|

||||

static constexpr int I = I_;

|

||||

static constexpr int J = J_;

|

||||

static constexpr data_layout dl = DATA_LAYOUT_I_MAJOR_MIRRORED;

|

||||

static constexpr int ne = tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::ne;

|

||||

|

||||

nv_bfloat162 x[ne] = {{0.0f, 0.0f}};

|

||||

|

||||

static constexpr __device__ bool supported() {

|

||||

return tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::supported();

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_i(const int l) {

|

||||

return tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::get_i(l);

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_j(const int l) {

|

||||

return tile<I_, J_, float, DATA_LAYOUT_I_MAJOR_MIRRORED>::get_j(l);

|

||||

}

|

||||

};

|

||||

|

||||

template <int I_, int J_>

|

||||

|

|

@ -528,42 +603,6 @@ namespace ggml_cuda_mma {

|

|||

}

|

||||

};

|

||||

|

||||

template <int I_, int J_, typename T>

|

||||

struct tile<I_, J_, T, DATA_LAYOUT_I_MAJOR_DUAL> {

|

||||

static constexpr int I = I_;

|

||||

static constexpr int J = J_;

|

||||

static constexpr data_layout dl = DATA_LAYOUT_I_MAJOR_DUAL;

|

||||

|

||||

static constexpr int ne = I * J / 32 * 2;

|

||||

|

||||

T x[ne] = {0};

|

||||

|

||||

static constexpr __device__ bool supported() {

|

||||

if (I == 16 && J == 16) return true;

|

||||

if (I == 16 && J == 8) return true;

|

||||

if (I == 16 && J == 4) return true;

|

||||

return false;

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_i(const int l) {

|

||||

if constexpr (supported()) {

|

||||

return threadIdx.x % 16;

|

||||

} else {

|

||||

NO_DEVICE_CODE;

|

||||

return -1;

|

||||

}

|

||||

}

|

||||

|

||||

static __device__ __forceinline__ int get_j(const int l) {

|

||||

if constexpr (supported()) {

|

||||

return l;

|

||||

} else {

|

||||

NO_DEVICE_CODE;

|

||||

return -1;

|

||||

}

|

||||

}

|

||||

};

|

||||

|

||||

#if defined(TURING_MMA_AVAILABLE)

|

||||

template <int I, int J>

|

||||

static __device__ __forceinline__ tile<I, J/2, half2> get_half2(const tile<I, J, float> & tile_float) {

|

||||

|

|

|

|||

|

|

@ -102,31 +102,25 @@ static void ssm_conv_f32_cuda(const float * src0, const float * src1, const int

|

|||

const int threads = 128;

|

||||

GGML_ASSERT(nr % threads == 0);

|

||||

|

||||

if (n_t <= 32) {

|

||||

const dim3 blocks(n_s, (nr + threads - 1) / threads, 1);

|

||||

if (nc == 4) {

|

||||

ssm_conv_f32<threads, 4><<<blocks, threads, 0, stream>>>(src0, src1, src0_nb0, src0_nb1, src0_nb2, src1_nb1,

|

||||

dst, dst_nb0, dst_nb1, dst_nb2, n_t);

|

||||

} else if (nc == 3) {

|

||||

ssm_conv_f32<threads, 3><<<blocks, threads, 0, stream>>>(src0, src1, src0_nb0, src0_nb1, src0_nb2, src1_nb1,

|

||||

dst, dst_nb0, dst_nb1, dst_nb2, n_t);

|

||||

auto launch_kernel = [&](auto NC) {

|

||||

constexpr int kNC = decltype(NC)::value;

|

||||

if (n_t <= 32) {

|

||||

const dim3 blocks(n_s, (nr + threads - 1) / threads, 1);

|

||||

ssm_conv_f32<threads, kNC><<<blocks, threads, 0, stream>>>(src0, src1, src0_nb0, src0_nb1, src0_nb2, src1_nb1,

|

||||

dst, dst_nb0, dst_nb1, dst_nb2, n_t);

|

||||

} else {

|

||||

GGML_ABORT("Only support kernel size = 3 or size = 4 right now.");

|

||||

}

|

||||

} else {

|

||||

if (nc == 4) {

|

||||

const int64_t split_n_t = 32;

|

||||

dim3 blocks(n_s, (nr + threads - 1) / threads, (n_t + split_n_t - 1) / split_n_t);

|

||||

ssm_conv_long_token_f32<threads, 4, split_n_t><<<blocks, threads, 0, stream>>>(

|

||||

ssm_conv_long_token_f32<threads, kNC, split_n_t><<<blocks, threads, 0, stream>>>(

|

||||

src0, src1, src0_nb0, src0_nb1, src0_nb2, src1_nb1, dst, dst_nb0, dst_nb1, dst_nb2, n_t);

|

||||

} else if (nc == 3) {

|

||||

const int64_t split_n_t = 32;

|

||||

dim3 blocks(n_s, (nr + threads - 1) / threads, (n_t + split_n_t - 1) / split_n_t);

|

||||

ssm_conv_long_token_f32<threads, 3, split_n_t><<<blocks, threads, 0, stream>>>(

|

||||

src0, src1, src0_nb0, src0_nb1, src0_nb2, src1_nb1, dst, dst_nb0, dst_nb1, dst_nb2, n_t);

|

||||

} else {

|

||||

GGML_ABORT("Only support kernel size = 3 or size = 4 right now.");

|

||||

}

|

||||

};

|

||||

|

||||

switch (nc) {

|

||||

case 3: launch_kernel(std::integral_constant<int, 3>{}); break;

|

||||

case 4: launch_kernel(std::integral_constant<int, 4>{}); break;

|

||||

case 9: launch_kernel(std::integral_constant<int, 9>{}); break;

|

||||

default: GGML_ABORT("Only support kernel sizes 3, 4, 9 right now.");

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -1527,6 +1527,8 @@ private:

|

|||

#endif // GGML_VULKAN_MEMORY_DEBUG

|

||||

|

||||

static bool vk_perf_logger_enabled = false;

|

||||

static bool vk_perf_logger_concurrent = false;

|

||||

static bool vk_enable_sync_logger = false;

|

||||

// number of calls between perf logger prints

|

||||

static uint32_t vk_perf_logger_frequency = 1;

|

||||

|

||||

|

|

@ -1577,14 +1579,14 @@ class vk_perf_logger {

|

|||

flops.clear();

|

||||

}

|

||||

|

||||

void log_timing(const ggml_tensor * node, const char *fusion_name, uint64_t time) {

|

||||

std::string get_node_fusion_name(const ggml_tensor * node, const char *fusion_name, uint64_t *n_flops) {

|

||||

*n_flops = 0;

|

||||

std::string fusion_str;

|

||||

if (fusion_name) {

|

||||

fusion_str = fusion_name + std::string(" ");

|

||||

}

|

||||

if (node->op == GGML_OP_UNARY) {

|

||||

timings[fusion_str + ggml_unary_op_name(ggml_get_unary_op(node))].push_back(time);

|

||||

return;

|

||||

return fusion_str + ggml_unary_op_name(ggml_get_unary_op(node));

|

||||

}

|

||||

if (node->op == GGML_OP_MUL_MAT || node->op == GGML_OP_MUL_MAT_ID) {

|

||||

const uint64_t m = node->ne[0];

|

||||

|

|

@ -1606,9 +1608,8 @@ class vk_perf_logger {

|

|||

name += " batch=" + std::to_string(batch);

|

||||

}

|

||||

name = fusion_str + name;

|

||||

timings[name].push_back(time);

|

||||

flops[name].push_back(m * n * (k + (k - 1)) * batch);

|

||||

return;

|

||||

*n_flops = m * n * (k + (k - 1)) * batch;

|

||||

return name;

|

||||

}

|

||||

if (node->op == GGML_OP_CONV_2D || node->op == GGML_OP_CONV_TRANSPOSE_2D) {

|

||||

std::string name = ggml_op_name(node->op);

|

||||

|

|

@ -1624,20 +1625,17 @@ class vk_perf_logger {

|

|||

uint64_t size_M = Cout;

|

||||

uint64_t size_K = Cin * KW * KH;

|

||||

uint64_t size_N = N * OW * OH;

|

||||

uint64_t n_flops = size_M * size_N * (size_K + (size_K - 1));

|

||||

*n_flops = size_M * size_N * (size_K + (size_K - 1));

|

||||

name += " M=Cout=" + std::to_string(size_M) + ", K=Cin*KW*KH=" + std::to_string(size_K) +

|

||||

", N=N*OW*OH=" + std::to_string(size_N);

|

||||

name = fusion_str + name;

|

||||

flops[name].push_back(n_flops);

|

||||

timings[name].push_back(time);

|

||||

return;

|

||||

return name;

|

||||

}

|

||||

if (node->op == GGML_OP_RMS_NORM) {

|

||||

std::string name = ggml_op_name(node->op);

|

||||

name += "(" + std::to_string(node->ne[0]) + "," + std::to_string(node->ne[1]) + "," + std::to_string(node->ne[2]) + "," + std::to_string(node->ne[3]) + ")";

|

||||

name = fusion_str + name;

|

||||

timings[name].push_back(time);

|

||||

return;

|

||||

return name;

|

||||

}

|

||||

if (node->op == GGML_OP_FLASH_ATTN_EXT) {

|

||||

const ggml_tensor * dst = node;

|

||||

|

|

@ -1653,8 +1651,7 @@ class vk_perf_logger {

|

|||

" k(" << k->ne[0] << "," << k->ne[1] << "," << k->ne[2] << "," << k->ne[3] << "), " <<

|

||||

" v(" << v->ne[0] << "," << v->ne[1] << "," << v->ne[2] << "," << v->ne[3] << "), " <<

|

||||

" m(" << (m?m->ne[0]:0) << "," << (m?m->ne[1]:0) << "," << (m?m->ne[2]:0) << "," << (m?m->ne[3]:0) << ")";

|

||||

timings[name.str()].push_back(time);

|

||||

return;

|

||||

return name.str();

|

||||

}

|

||||

if (node->op == GGML_OP_TOP_K) {

|

||||

std::stringstream name;

|

||||

|

|

@ -1662,11 +1659,38 @@ class vk_perf_logger {

|

|||

name << ggml_op_name(node->op) <<

|

||||

" K=" << node->ne[0] <<

|

||||

" (" << node->src[0]->ne[0] << "," << node->src[0]->ne[1] << "," << node->src[0]->ne[2] << "," << node->src[0]->ne[3] << ")";

|

||||

timings[name.str()].push_back(time);

|

||||

return;

|

||||

return name.str();

|

||||

}

|

||||

timings[fusion_str + ggml_op_name(node->op)].push_back(time);

|

||||

return fusion_str + ggml_op_name(node->op);

|

||||

}

|

||||

|

||||

void log_timing(const ggml_tensor * node, const char *fusion_name, uint64_t time) {

|

||||

uint64_t n_flops;

|

||||

std::string name = get_node_fusion_name(node, fusion_name, &n_flops);

|

||||

if (n_flops) {

|

||||

flops[name].push_back(n_flops);

|

||||

}

|

||||

timings[name].push_back(time);

|

||||

}

|

||||

|

||||

void log_timing(const std::vector<ggml_tensor *> &nodes, const std::vector<const char *> &names, uint64_t time) {

|

||||

uint64_t total_flops = 0;

|

||||

std::string name;

|

||||

for (size_t n = 0; n < nodes.size(); ++n) {

|

||||

uint64_t n_flops = 0;

|

||||

name += get_node_fusion_name(nodes[n], names[n], &n_flops);

|

||||

total_flops += n_flops;

|

||||

|

||||

if (n != nodes.size() - 1) {

|

||||

name += ", ";

|

||||

}

|

||||

}

|

||||

if (total_flops) {

|

||||

flops[name].push_back(total_flops);

|

||||

}

|

||||

timings[name].push_back(time);

|

||||

}

|

||||

|

||||

private:

|

||||

std::map<std::string, std::vector<uint64_t>> timings;

|

||||

std::map<std::string, std::vector<uint64_t>> flops;

|

||||

|

|

@ -1729,7 +1753,9 @@ struct ggml_backend_vk_context {

|

|||

std::unique_ptr<vk_perf_logger> perf_logger;

|

||||

vk::QueryPool query_pool;

|

||||

std::vector<const char *> query_fusion_names;

|

||||

std::vector<int> query_fusion_node_count;

|

||||

std::vector<ggml_tensor *> query_nodes;

|

||||

std::vector<int> query_node_idx;

|

||||

int32_t num_queries {};

|

||||

int32_t query_idx {};

|

||||

};

|

||||

|

|

@ -5194,6 +5220,8 @@ static void ggml_vk_instance_init() {

|

|||

}

|

||||

|

||||

vk_perf_logger_enabled = getenv("GGML_VK_PERF_LOGGER") != nullptr;

|

||||

vk_perf_logger_concurrent = getenv("GGML_VK_PERF_LOGGER_CONCURRENT") != nullptr;

|

||||

vk_enable_sync_logger = getenv("GGML_VK_SYNC_LOGGER") != nullptr;

|

||||

const char* GGML_VK_PERF_LOGGER_FREQUENCY = getenv("GGML_VK_PERF_LOGGER_FREQUENCY");

|

||||

|

||||

if (GGML_VK_PERF_LOGGER_FREQUENCY != nullptr) {

|

||||

|

|

@ -11820,15 +11848,18 @@ static bool ggml_vk_build_graph(ggml_backend_vk_context * ctx, ggml_cgraph * cgr

|

|||

}

|

||||

}

|

||||

|

||||

#define ENABLE_SYNC_LOGGING 0

|

||||

|

||||

if (need_sync) {

|

||||

#if ENABLE_SYNC_LOGGING

|

||||

std::cerr << "sync" << std::endl;

|

||||

#endif

|

||||

if (vk_enable_sync_logger) {

|

||||

std::cerr << "sync" << std::endl;

|

||||

}

|

||||

ctx->unsynced_nodes_written.clear();

|

||||

ctx->unsynced_nodes_read.clear();

|

||||

ggml_vk_sync_buffers(ctx, compute_ctx);

|

||||

|

||||

if (vk_perf_logger_enabled && vk_perf_logger_concurrent) {

|

||||

ctx->query_node_idx[ctx->query_idx] = node_idx;

|

||||

compute_ctx->s->buffer.writeTimestamp(vk::PipelineStageFlagBits::eAllCommands, ctx->query_pool, ctx->query_idx++);

|

||||

}

|

||||

}

|

||||